|

|

| (18 intermediate revisions by the same user not shown) |

| Line 6: |

Line 6: |

| | | | |

| | * [[Media:Network_Management_and_High_Availability-Resume_for_the_exam.pdf]] | | * [[Media:Network_Management_and_High_Availability-Resume_for_the_exam.pdf]] |

| − | ::* There are some virtualization and cloud material in this resume that could be added to the wiki. | + | |

| | + | * [[Media:Interacting_with_the_GCP-CA2_Cloud.pdf]] |

| | + | |

| | + | * [[Media:Designing and Implementing an AWS Cloud Solution.pdf]] |

| | + | |

| | + | * [[Media:PowerShell_and_GoogleCloud.pdf]] |

| | | | |

| | | | |

| | <br /> | | <br /> |

| | | | |

| − | Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. This cloud model is composed of five essential characteristics, three service models, and four deployment models. | + | |

| | + | Cloud computing" is a term broadly used to define the on-demand delivery of IT resources and applications via the Internet, with pay-as-you-go pricing. [Definición from AWS Academy] |

| | + | |

| | + | |

| | + | Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. This cloud model is composed of five essential characteristics, three service models, and four deployment models. [This is another common definition a little more complete and complex] |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ==CA1 - Case study - Migration of on-premise network onto a public Cloud provider== | + | ==Case study - Migration of an on-premise network onto a public Cloud provider== |

| | CA1 - Physical Server Hardware Research / Case study of one company that successfully migrated their on-premise network onto a public Cloud provider | | CA1 - Physical Server Hardware Research / Case study of one company that successfully migrated their on-premise network onto a public Cloud provider |

| | | | |

| Line 97: |

Line 106: |

| | | | |

| | <br /> | | <br /> |

| − | ==Containers== | + | ==[[AWS]]== |

| − | https://www.cio.com/article/2924995/software/what-are-containers-and-why-do-you-need-them.html

| |

| − | https://cloud.google.com/containers/

| |

| − | | |

| − | Containers are a solution to the problem of how to get software to run reliably when moved from one computing environment to another. This could be from a developer's laptop to a test environment, from a staging environment into production, and perhaps from a physical machine in a data center to a virtual machine in a private or public cloud.

| |

| − | | |

| − | Problems arise when the supporting software environment is not identical. For example: "You're going to test using Python 2.7, and then it's going to run on Python 3 in production and something weird will happen. Or you'll rely on the behavior of a certain version of an SSL library and another one will be installed. You'll run your tests on Debian and production is on Red Hat and all sorts of weird things happen."

| |

| − | | |

| − | And it's not just different software that can cause problems, he added. "The network topology might be different, or the security policies and storage might be different but the software has to run on it."

| |

| − | | |

| − | '''How do containers solve this problem?'''

| |

| − | Put simply, a container consists of an entire runtime environment: an application, plus all its dependencies, libraries and other binaries, and configuration files needed to run it, bundled into one package. By containerizing the application platform and its dependencies, differences in OS distributions and underlying infrastructure are abstracted away.

| |

| − | | |

| − | Containers offer a logical packaging mechanism in which applications can be abstracted from the environment in which they actually run.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ==Google Cloud Platform==

| |

| − | https://console.cloud.google.com

| |

| − | | |

| − | '''para ver la Amount remaining del Promotion value of 243.35:'''

| |

| − | Principal Menu (Burger) > Billing > Overview

| |

| − | | |

| − | | |

| − | Lo primero que tenemos que hacer es crear un proyecto. Generalmente hay un proyecto by default llamado «My First Project». Para crear un nuevo proyecto debemos ir a la pestaña que se encuentra justo al lado del Main Menu (Hamburger).

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Pricing===

| |

| − | * 1 shared vCPU, 0.6 GB RAM, 50GB Storage: 70.56 $/year

| |

| − | * 1 shared vCPU, 1.7 GB RAM, 50GB Storage: 189.6 $/year

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===CA2-Interacting with the GCP===

| |

| − | [[Media:Interacting_with_the_GCP-CA2_Cloud.pdf]]

| |

| − | | |

| − | # Creating a storage bucket. Uploading items from a host computer using the command line and the GUI and place them into the bucket

| |

| − | ## Using the command line

| |

| − | ## Using the GUI

| |

| − | # Upload items from the bucket to a Linux virtual machine using the Google CLI

| |

| − | # Creation of a Linux VM, installing Apache and uploading the web page to the web site

| |

| − | # Create a Linux VM, install NGINX and upload the web page to the web site

| |

| − | # Create a Windows VM, install IIS and upload the web page to the web site

| |

| − | # Live Migration of a VirtualBox VM to the GCP

| |

| − | # Explain what Live Migration is and identify situations where DigiTech could benefit from it

| |

| − | # Research topic: Other services available from Google’s Cloud Launcher

| |

| − | ## Python tutorial

| |

| − | # Challenging research topic - GCSFUSE: It allows you to mount a bucket to a Debian Linux virtual machine

| |

| − | ## Installing Cloud Storage FUSE and its dependencies

| |

| − | ## Mounting a Google Cloud Storage Bucket as a local disk

| |

| − | ## Change the root directory of an apache server

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Cloud SDK: Command-line interface for Google Cloud Platform===

| |

| − | https://cloud.google.com/sdk/

| |

| − | | |

| − | The Cloud SDK is a set of tools for Cloud Platform. It contains [https://cloud.google.com/sdk/gcloud/reference/ '''gcloud'''], [https://cloud.google.com/storage/docs/gsutil '''gsutil'''], and [https://cloud.google.com/bigquery/docs/bq-command-line-tool '''bq'''], which you can use to access Google Compute Engine, Google Cloud Storage, Google BigQuery, and other products and services from the command-line. You can run these tools interactively or in your automated scripts. A comprehensive guide to gcloud can be found in [https://cloud.google.com/sdk/gcloud/ '''gcloud Overview'''].

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Instalación====

| |

| − | El proceso de instalación se encuentra detallado en https://cloud.google.com/sdk/

| |

| − | | |

| − | Logré instalarlo correctamente a través de la opción: Install for Debian / Ubuntu: https://cloud.google.com/sdk/docs/quickstart-debian-ubuntu

| |

| − | | |

| − | La procesimiento de instalación general para Linux: https://cloud.google.com/sdk/docs/quickstart-linux generó errores en mi sistema y no se pudo completar la instalación.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Initialize the SDK====

| |

| − | https://cloud.google.com/sdk/docs/quickstart-linux

| |

| − | | |

| − | Luego de instalar SDK, debemos usar the '''gcloud init''' command to perform several common SDK setup tasks. These include:

| |

| − | * Especificar la user account (adeloaleman@gmail.com): authorizing the SDK tools to access Google Cloud Platform using your user account credentials

| |

| − | * Setting up the default SDK configuration:

| |

| − | * El proyecto (creado en al GCP) al que vamos a acceder by default

| |

| − | * ...

| |

| − | | |

| − | gcloud init

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Crear una VM Instance===

| |

| − | Main Menu (Hamburger) > Compute Engine > VM instances:

| |

| − | * Create Instance

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Linux VM Instance====

| |

| − | * '''Name:''' debian9-1

| |

| − | * '''Zone:''' Lo lógico es elegir la zona donde te encuentras, aunque si elijes alguna otra no habrá mucha diferencia (el precio varía dependiendo de la zona)

| |

| − | : Elegiremos la primera zona de West Europe propuesta: '''europe-west4-a'''

| |

| − | * '''Machine type:''' Is customize. Las características por defecto son:

| |

| − | : 1vCPU / 3.75 GB memory

| |

| − | : Las características más básicas son:

| |

| − | :: micro (1 shared vCPU 0.6GB memory) (el precio baja considerablemente si elegimos éste) (para Linux podría ser suficiente)

| |

| − | * '''Container:''' Deploy a container image to this VM instance.

| |

| − | : Ver [[Cloud Computing#Containers|Containers]]

| |

| − | : No es, por ahora, necesario activar esta opción para nuestro propósito de prueba.

| |

| − | * '''Boot disk:'''

| |

| − | : OS: La opción por defecto es Debian GNU/Linux 9 (stretch). Vamos estar trabajando con éste.

| |

| − | : Disk size: 10GB por es la opción por defecto para Linux.

| |

| − | * '''Identity and API access :'''

| |

| − | : Service account: Esta opción se encuentra ajustada a "Compute Engine Default Service Account".

| |

| − | : Access scopes:

| |

| − | :* Allow default access: Activada por defecto...

| |

| − | :* Allow full access to all Cloud APIs

| |

| − | ::* <span style="background:#00FF00">Es apropiado marcar esta opción.</span> Si no se marca no se podrá, por ejemplo, copiar files desde una VM instance a un bucket de esta forma:

| |

| − | <blockquote>

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | gsutil cp file.txt gs://adelostorage/

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | </blockquote>

| |

| − | ::* <span style="background:#00FF00">Otra opción es para permitir copiar archivos desde la VM hacia un bucket es configurar la "Service account". Debemos elegir una Service Account que hayamos configurade previamente para permitir este tipo de acciones. Ver [[Cloud Computing#Service Accounts|Service Accounts]] para más detalles</span>

| |

| − | :* Set access for each API

| |

| − | * '''Firewall:'''

| |

| − | : Add tags and firewall rules to allow specific network traffic from the Internet

| |

| − | :* Allow HTTP traffic: Activaremos esta opción to be able to maange the site through an SSH connection.

| |

| − | :* Allow HTTPS traffic: Activaremos esta opción to be able to maange the site through an SSH connection.

| |

| − | * '''Management, disks, networking, SSH keys:''' Este link nos da la opción de realizar muchas otras configuraciones. Dejaremos todo por defecto por ahora.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Crear storage===

| |

| − | Create a storage bucket:

| |

| − | | |

| − | Main Menu > Storage > Browser:

| |

| − | * Create bucket:

| |

| − | ** '''Name:''' mi_storage-1

| |

| − | ** '''Default storage class:''' Vamos a dejar la opción by default -- Multi-Regional

| |

| − | ** '''Location:''' Europe

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Connecting to Instances===

| |

| − | https://cloud.google.com/compute/docs/instances/connecting-to-instance

| |

| − | | |

| − | Compute Engine provides tools to manage your SSH keys and help you connect to either Linux and Windows Server instances.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Connecting to Linux instances====

| |

| − | You can connect to Linux instances through either:

| |

| − | * The Google Cloud Platform Console

| |

| − | * The gcloud command-line tool.

| |

| − | * Connecting using third-party tools

| |

| − | | |

| − | Compute Engine generates an SSH key for you and stores it in one of the following locations:

| |

| − | * By default, Compute Engine adds the generated key to project or instance metadata.

| |

| − | * If your account is configured to use OS Login, Compute Engine stores the generated key with your user account.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====The Google Cloud Platform Console=====

| |

| − | Esta es un Console que pertenece a la GCP a la cual se accede a travé de una Browser window. Para abrirla vamos a:

| |

| − | * In the GCP (Google Cloud Platform) Console, go to the VM Instances page.

| |

| − | * In the list of virtual machine instances, click SSH in the row of the instance that you want to connect to.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====The gcloud command-line tool=====

| |

| − | A través del comando '''gcloud''' (incluido en Cloud SDK) podemos acceder a la VM Instance desde el Terminal (Console) de nuestra computadora.

| |

| − | gcloud compute ssh [INSTANCE_NAME]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====Connecting using third-party tools=====

| |

| − | https://cloud.google.com/compute/docs/instances/connecting-advanced#thirdpartytools

| |

| − | | |

| − | Ver [[Linux#SSH]]

| |

| − | | |

| − | También podemos acceder a una VM Instance sin necesidad de utilizar herramientas de la GCP, como lo son The Google Cloud Platform Console or gcloud. Podemos, en su lugar, utilizar '''SSH'''.

| |

| − | | |

| − | Luego de crear una VM Instance, no podremos acceder a la VM a través de SSH debido a que ningún método de autentificación estará disponible. Como explicamos en [[Linux#SSH]]. Hay dos approach para la autentificación al usar SSH:

| |

| − | | |

| − | * '''Using the password de la «cuenta linux»:'''

| |

| − | :* Las Google Cloud VM Instance se crean por defecto sin password. Además, en la configuración por defectos de las Google VM Instance, se impide la autentificación con password. Es decir, el archivo «/etc/ssh/sshd_config» presenta la siguiente configuración: «PasswordAuthentication no».

| |

| − | :* Entonces, tenemos primero que encontrar otro método de acceder a las GC VM para:

| |

| − | ::# Configurar «PasswordAuthentication yes»

| |

| − | ::# Configurar un password para el user@VMInstance

| |

| − | | |

| − | * '''SSH Keys:''' Si queremos autentificar la conexión con este método tenemos primero que copiar la «public key» de nuestra computadora en las Google Cloud VM Instances.

| |

| − | :* Como se explica en [[Linux#SSH]], si queremos hacerlo con el comando «ssh-copy-id» tenemos primero que configurar una password para el user@VMInstance y habilitar la autentificación a través de dicho password como explicamos arriba.

| |

| − | :* Entonces, igualmente, tenemos primero que encontrar otro método de acceder a las GC VM para poder copiar la «public key».

| |

| − | | |

| − | | |

| − | Alors, para conectarnos a las GC VM y realizar las configuraciones necesarias para permitir la conexión con SSH podemos usar el comando «gcloud» como ya hemos visto:

| |

| − | gcloud compute ssh [INSTANCE_NAME]

| |

| − | | |

| − | Cuando realizamos la primera conexión a través de «gcloud», el comando generará las keys necesarias para la conexión con «gcloud»:

| |

| − | | |

| − | [[File:SSH_google_keys.png|900px|thumb|center|]]

| |

| − | | |

| − | | |

| − | <span style="background:#D8BFD8">Lo que he constatado es que, luego de crear la GC VM, si intento ingresar con «ssh» antes de haber ingresado con «gcloud», no será posible el acceso porque no hay método de autentificación. Sin embargo, si primero me conecto con «gcloud», luego sí puedo ingresar con «ssh» sin necesidad de realizar otra configuración ni copiar la «public key». Esto me imagino que es porque, como se ve en la imagen mostrada arriba, «gcloud» ha creado las «key» y copiado la «public key» en la computadora destino; y al parecer éstas mismas funcionan con una conexión normal de ssh (sin acudir a «gcloud»). Esto lo he constatado conectandome desde mi computadora a las GC VM.</span> <span style="color:#FFF;background:#FF0000">Sin embargo, siguiendo el mismo procedimiento intenté conectarme entre dos GC VM Instances y no logré estableser la conección a través de SSH. El problema lo solucioné, siguiendo el consejo de un forum, borrando todos los archivos en «~/.ssh/» excepto «known_hosts» y generando nuevas keys (con «ssh-keygen») y copiando la nueva «public key» en la GC VM correspondiente, siguiendo los procedimientos en [[Linux#SSH]]</span>.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Copy files===

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====From the host computer to a bucket on Google Cloud====

| |

| − | Luego de crear el bucket, podemos copiar archivos al bucket. Hay diferentes formas de hacerlo:

| |

| − | * Sinple drag and drop

| |

| − | * Usando el terminal de Google cloud

| |

| − | * Desde el terminal de la computadora luego de instalar Cloud SDK

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====Desde el terminal de la computadora luego de instalar Cloud SDK=====

| |

| − | El siguiente comando copia un archivo que está en mi computadora a un bucket on GC:

| |

| − | gsutil cp probando gs://adelostorage

| |

| − | Where adelostorage is the name of the bucket.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====From the host computer to a Linux VM Instance or between VM's====

| |

| − | https://cloud.google.com/sdk/gcloud/reference/compute/scp

| |

| − | | |

| − | To copy a remote directory, ~/narnia, from example-instance to the ~/wardrobe directory of your local host, run:

| |

| − | gcloud compute scp --recurse example-instance:~/narnia ~/wardrobe

| |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ====From a bucket to an VM Instance==== | + | ==[[Google Cloud]]== |

| − | Luego de acceder a una VM Instance, puedo copiar un archivo desde un bucket a dicha VM Instance a través de la siguiente orden:

| |

| − | gsutil cp gs://adelostorage/probando.txt .

| |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ===Acceding Cloud Storage buckets=== | + | ==Azure== |

| − | He estado buscando una forma para acceder a mis Buckets de forma tal que sea posible compartir el bucket con otras personas:

| + | https://portal.azure.com/#home |

| − | * A través de una URL

| |

| − | * A través de un IP

| |

| − | | |

| − | En los siguientes links se explica como hacer el bucket público y como acceder a él a través de una URL:

| |

| − | * https://cloud.google.com/storage/docs/access-control/making-data-public

| |

| − | * https://cloud.google.com/storage/docs/access-public-data

| |

| − | | |

| − | Pensé que lo que se explicaba en esos links era la solución. Pero creo que eso sólo permite hacer el bucket público para todos los usuarios de un proyecto (creo); porque la URL a la que creo se refieren en dicho link es:

| |

| − | * https://console.cloud.google.com/storage/BUCKET_NAME

| |

| − | * En mi caso: https://console.cloud.google.com/storage/browser/sinfronterasbucket1

| |

| − | | |

| − | y pues ese link sólo permitiría el acceso a mi bucket al estar logeados en mi cuenta.

| |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ====Connecting your domain to a Cloud Storage Bucket==== | + | ==Alibaba Cloud== |

| − | Lo que sí encontré es que hay una forma de asociar el bucket a un dominio que nos pertenezca. Por ejemplo, el siguiente subdominio lo voy a asociar a un bucket:

| + | https://www.alibabacloud.com/ |

| − | * www.es.sinfronteras.ws

| |

| − | * https://console.cloud.google.com/storage/browser/www.es.sinfronteras.ws

| |

| | | | |

| − | En el siguiente link se explica como connect your domain to Cloud Storage and Hosting a Static Website en el Bucket: https://cloud.google.com/storage/docs/hosting-static-website

| + | An account has been created using my gmail account |

| | | | |

| | | | |

| − | <br />

| + | * '''Elastic Compute Service''': |

| − | ===Mounting Cloud Storage buckets as file systems===

| + | : Pricing: https://www.alibabacloud.com/en/product/ecs?_p_lc=1&spm=5176.ecsnewbuy.0.0.45d11594Q1r4Au&tabIndex=2#pricingFrame |

| − | Cloud Storage FUSE: Mounting Cloud Storage buckets as file systems on Linux or OS X systems:

| |

| − | https://cloud.google.com/storage/docs/gcs-fuse

| |

| − | | |

| − | Cloud Storage FUSE is an open source FUSE adapter that allows you to mount Cloud Storage buckets as file systems on Linux or OS X systems.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Installing Cloud Storage FUSE and its dependencies====

| |

| − | https://github.com/GoogleCloudPlatform/gcsfuse/blob/master/docs/installing.md

| |

| − | | |

| − | * Add the gcsfuse distribution URL as a package source and import its public key:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | export GCSFUSE_REPO=gcsfuse-`lsb_release -c -s`

| |

| − | echo "deb http://packages.cloud.google.com/apt $GCSFUSE_REPO main" | sudo tee /etc/apt/sources.list.d/gcsfuse.list

| |

| − | curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | * Update the list of packages available and install gcsfuse:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo apt-get update

| |

| − | sudo apt-get install gcsfuse

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | * (Ubuntu before wily only) Add yourself to the fuse group, then log out and back in:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo usermod -a -G fuse $USER

| |

| − | exit

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | * Future updates to gcsfuse can be installed in the usual way:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo apt-get update && sudo apt-get upgrade

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Set up credentials for Cloud Storage FUSE====

| |

| − | Cloud Storage FUSE auto-discovers credentials based on application default credentials:

| |

| − | * If you are running on a Google Compute Engine instance with scope storage-full configured, then Cloud Storage FUSE can use the Compute Engine built-in service account. For more information, see Using Service Accounts with Applications.

| |

| − | ** <span style="background:#D8BFD8">Si se está usando una Google Compute Engine Instance, no se tiene que hacer nada para montar el Bucket. Sin embargo si el Access scopes de dicha Instance no fue configurado correctamente, entonces no podremos copiar arechivos desde la Instance hacia el Bucket montado. Ver en la [[Cloud Computing#Crear una VM Instance|creación de la VM Instance]] que es apropiado elegir "Allow full access to all Cloud APIs" o elegir una Service Account que hayamos configurade previamente para permitir este tipo de acciones. Ver [[Cloud Computing#Service Accounts|Service Accounts]] para más detalles.

| |

| − | </span>

| |

| − | | |

| − | * If you installed the Google Cloud SDK and ran gcloud auth application-default login, then Cloud Storage FUSE can use these credentials.

| |

| − | | |

| − | * If you set the environment variable GOOGLE_APPLICATION_CREDENTIALS to the path of a service account's JSON key file, then Cloud Storage FUSE will use this credential. For more information about creating a service account using the Google Cloud Platform Console, see Service Account Authentication.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Mounting a Google Cloud Storage Bucket as a local disk====

| |

| − | * Create a directory:

| |

| − | mkdir /path/to/mount

| |

| − | | |

| − | * Create the bucket you wish to mount, if it doesn't already exist, using the Google Cloud Platform Console.

| |

| − | | |

| − | * Use Cloud Storage FUSE to mount the bucket (e.g. example-bucket).

| |

| − | gcsfuse example-bucket /path/to/mount

| |

| − | <blockquote>

| |

| − | <span style="background:#D8BFD8">

| |

| − | Es muy importante notar que si montamos el bucket como se muestra arriba, no podremos accederlo a través del Web Brower. Es decir, el Web server no tendrá la permisología para acceder a este directorio.</span>

| |

| − | | |

| − | <span style="background:#D8BFD8">

| |

| − | Esto es un problema si queremos colocar un sitio web en el bucket; al cual se va a acceder a través de la VM Instance.</span>

| |

| − | | |

| − | <span style="background:#D8BFD8">

| |

| − | En este caso, debemos montarlo de la siguiente forma:</span>

| |

| − | https://www.thedotproduct.org/posts/mounting-a-google-cloud-storage-bucket-as-a-local-disk.html

| |

| − | <syntaxhighlight lang="java">

| |

| − | sudo gcsfuse -o noatime -o noexec --gid 33 --implicit-dirs -o ro -o nosuid -o nodev --uid 33 -o allow_other mi_storage1 /path/directory/

| |

| − | </syntaxhighlight>

| |

| − | '''Explaining the mount options:'''

| |

| − | * ro - mount the volume in read-only mode

| |

| − | * uid=33,gid=33 - grant access to user-id and group-id 33 (usually the web serve user e.g. www-data):

| |

| − | ** <span style="background:#00FF00">Your OS has a web server user whose user-id (UID) and groupd-id (GID) are 33</span>

| |

| − | <blockquote>

| |

| − | Para desplegar el web server user-id (UID) and groupd-id: http://www.digimantra.com/linux/find-users-uid-gid-linux-unix/

| |

| − | <syntaxhighlight lang="bash">

| |

| − | id -u www-data

| |

| − | id -g www-data</syntaxhighlight>

| |

| − | </blockquote>

| |

| − | * noatime - don't update access times of files

| |

| − | * _netdev - mark this as a network volume so the OS knows that the network needs to be available before it'll try to mount

| |

| − | * noexec - don't allow execution of files in the mount

| |

| − | * user - allow non-root users to mount the volume

| |

| − | * implicit_dirs - treat object paths as if they were directories - this is critical and Fuse-specific

| |

| − | * allow_other - allow non-root users to mount (Fuse-specific AFAIK)

| |

| − | </blockquote>

| |

| − | | |

| − | * To unmount the bucket:

| |

| − | fusermount -u /home/shared/local_folder/

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Firewall Rules===

| |

| − | '''How to open a specific port such as 9090 in Google Compute Engine:'''

| |

| − | | |

| − | https://cloud.google.com/vpc/docs/using-firewalls

| |

| − | | |

| − | https://stackoverflow.com/questions/21065922/how-to-open-a-specific-port-such-as-9090-in-google-compute-engine

| |

| − | | |

| − | Por defectos, sólo el puerto tcp:80 se encuentra abierto. Los otros están protegidos por los firewalls. Si configuramos, por ejemplo, un Web Server (Apache, NGINX) en un puerto distinto al 80, debemos modificar los Firewall Rules para que el Web Server sea capaz de servir las páginas a través de otro puerto.

| |

| − | | |

| − | '''Para abrir un puerto:''' | |

| − | * Choose you Project: al lado del Main Menu (burguer)

| |

| − | * Then, go to: Burguer > VPC network > Firewall rules:

| |

| − | ** Create a firewall rule

| |

| − | | |

| − | Luego, seguir los pasos indicados en: https://cloud.google.com/vpc/docs/using-firewalls:

| |

| − | Con las siguientes especificaciones (el resto en default) he sido capaz de hacerlo:

| |

| − | * Targets

| |

| − | ** All instances in the network

| |

| − | * Source IP ranges:

| |

| − | ** If you want it to apply to all ranges, specify 0.0.0.0/0

| |

| − | * Specified protocols and ports:

| |

| − | ** tcp:8080 (para abrir el puerto 8080)

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Service Accounts===

| |

| − | A service account is a special account whose credentials you can use in your application code to access other Google Cloud Platform services.

| |

| − | | |

| − | Creating and Managing Service Accounts:

| |

| − | https://cloud.google.com/iam/docs/creating-managing-service-accounts#creating_a_service_account

| |

| − | | |

| − | Creating and Enabling Service Accounts for Instances:

| |

| − | https://cloud.google.com/compute/docs/access/create-enable-service-accounts-for-instances#createanewserviceaccount

| |

| − | | |

| − | Granting Roles to Service Accounts:

| |

| − | https://cloud.google.com/iam/docs/granting-roles-to-service-accounts

| |

| − | | |

| − | Por ejemplo, para poder copar arvhivos desde VM Instance hacia un Bucket, you need to Setting up a new instance to run as a service account. Dicha service account tiene que haber sido Granted with the particular Roles que permiten dicha operación de copia.

| |

| − | | |

| − | To grant the roles, no puede hacerlo a través de la línea de comandos pues me generó un error. Lo pude sin embargo hacer desde la página de GCP. para la operación de copia descrita arriba hay que agregar a la service account en la cual corre la VM los roles relacionados con Storage:

| |

| − | * Storage Admin

| |

| − | * Storage Object Admin

| |

| − | * Storage Object Creator

| |

| − | * Storage Object Viewer

| |

| − | | |

| − | Luego de haber creado la Service Account podemos, al momento de crear la VM Instance, ajustar que la new instance runs as this service account (ver ).

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Live Migration of a Virtual Machine===

| |

| − | * The virtualBox machine that you migrate should be using a VMDK (Virtual Machine Disk), not VDI (VirtualBox Disk Image) or VHD (Virtual Hard Disk).

| |

| − | * Make sure you the NIC (Network Interface Controler) (Network > Adapter 1 > Attached to) is set to NAT so that you have Internet access.

| |

| − | * <span style="background:#00FF00">Es muy importante tomar en cuenta que para podernos conectar (a través de gcloud compute ssh VMmigrada) a la VM Instance luego de haberla migrado, el paquete '''openssh-server''' tiene que haber sido previamente instalado en la VM.</span>

| |

| − | | |

| − | '''Para realizar la migración:'''

| |

| − | * '''In the GCP'''

| |

| − | ** Main Menu > Compute engine > VM Instances: Import VM

| |

| − | ** There you will be redirected to the Cloud Endure Platform (VM Migration Service)

| |

| − | | |

| − | | |

| − | * '''In the Cloud Endure Platform (VM Migration Service)'''

| |

| − | : https://gcp.cloudendure.com

| |

| − | :* <span style="background:#D8BFD8">'''GCP credentials'''</span>

| |

| − | :: '''We need to create GCP credentials (Google Cloud Platform JSON private key (JSON file))'''. To generate a JSON private key file (could be also PKCS12 format) (Creo que estas credenciales cambiarían sólo si queremos realizar una migración hacia otro proyecto):

| |

| − | ::* Click "Find out where to get these credentials"

| |

| − | ::* Lo anterior abrirá la página de Documentation with a link to "Open the link of Credentials"

| |

| − | ::* Then, you need to choose the project: The target project where your VM will be migrate.

| |

| − | ::* Debemos copiar el Project ID porque luego lo vamos a necesitar en la Cloud Endure Platforme

| |

| − | ::* Click "Create credentials" and Select "Service Account Key" option

| |

| − | ::* Select the JSON option (for key type)

| |

| − | ::* The service account should be "Compute engine default service account"

| |

| − | ::* Then hit create: the JSON file will be downloaded to your computer.

| |

| − | ::* Luego, you go back to the Cloud Endure Platform (VM Migration Service)

| |

| − | ::* Enter the Google Cloud Platform project ID

| |

| − | ::* Luego cargamos el JSON file (Google Cloud Platform JSON private key). Then "Save".

| |

| − | | |

| − | ::* <span style="background:#00FF00">Este es el archivo JSON que creé en mi cuenta GC cuando migré mi VM:</span> [[Media:ProyectoAdelo2017279-78811cb38326.json]]

| |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Replication settings'''</span>

| |

| − | ::* Live migration target: Google EU West 2 (London)

| |

| − | ::* Hit Save replication settings

| |

| − | ::* Luego de esto: Project setup complete Congratulations!

| |

| − | :::* Your Live Migration Project is set up. So, the next step is to install the CloudEndure Agent on your Source machine.

| |

| − | | |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Install the CloudEndure Agent on your Source machine'''</span>

| |

| − | :: How To Add Machines: https://gcp.cloudendure.com/#/project/2be028cc-40ae-4215-ad2c-52e3ce4e325d/machines

| |

| − | :: In order to add a machine to the GCP, install the CloudEndure Agent on your machine (your VirtualBox VM for example) (data replication (proceso de transferencia de la VM) begins automatically upon CloudEndure Agent installation).

| |

| − | | |

| − | <blockquote>

| |

| − | Your Agent installation token: (este código lo genera automáticamente la Cloud Endure Platform (VM Migration Service. No sé si es diferente en cada migración.. Creo podría depender del proyecto; entonces debería cambiar si queremos migrar una VM hacia otro proyecto)

| |

| − | <syntaxhighlight lang="bash">

| |

| − | 0E65-51C9-50EE-E20E-A583-9709-1653-BA57-457D-92BF-57B9-0DE2-22DC-EA11-3B80-81E6

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | '''For Linux machines:'''

| |

| − | | |

| − | Download the Installer:

| |

| − | | |

| − | <syntaxhighlight lang="bash">

| |

| − | wget -O ./installer_linux.py https://gcp.cloudendure.com/installer_linux.py

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | <span style="background:#00FF00">Antes de ejecutar el siguiente paso tuvimos que instalar:</span>

| |

| − | <syntaxhighlight lang="sh">

| |

| − | sudo apt-get install python2.7

| |

| − | sudo apt-get install gcc

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | Then run the Installer and follow the instructions:

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo python ./installer_linux.py -t 0E65-51C9-50EE-E20E-A583-9709-1653-BA57-457D-92BF-57B9-0DE2-22DC-EA11-3B80-81E6 --no-prompt

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | '''For Windows machines:'''

| |

| − | | |

| − | Download the Windows installer here https://gcp.cloudendure.com/installer_win.exe, then launch as follows:

| |

| − | | |

| − | <syntaxhighlight lang="bash">

| |

| − | installer_win.exe -t 0E65-51C9-50EE-E20E-A583-9709-1653-BA57-457D-92BF-57B9-0DE2-22DC-EA11-3B80-81E6 --no-prompt

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | </blockquote>

| |

| − | | |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Data replication'''</span>

| |

| − | ::* Data replication begins automatically once the installation of the CloudEndure Agent is completed. You will see able to see progress on the Cloud Endure Platform (VM Migration Service)

| |

| − | ::* Data replication es de hecho el proceso en el cual la VM es copiada hacia la GCP.

| |

| − | | |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Launch target Machine'''</span>

| |

| − | ::* Luego de haberse completado la Data replication, se habrá creado una Instance en la página de VM Instace del correspondiente proyecto en la GCP. Esta Instace, cuyo nombre contiene "replicator" no será la VM Instance definitiva. Debemos entonces hacer click en "Launch target Machine" (aún en la Cloud Endure Platform (VM Migration Service)), lo cual generará la VM Instance en la correspondiente página de la GCP. Habrán entonces dos Instance en la Página de la GCP. En mi caso:

| |

| − | :::* ce-replicator-goo16-20eb5f29

| |

| − | :::* ubuntu-18932dd6

| |

| − | | |

| − | ::* <span style="background:#00FF00">Si hacemos cambios en nuestra Local VirtualBox VM, podremos actualizar la correspondiente Google VM Instance fácilmente haciendo click en "Launch target Machine" nuevamente.</span> La ce-replicator-goo16-20eb5f29 creo que tiene la función de actuar en esta actualización.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Live migration of a desktop computer===

| |

| − | Migrating your computer to Google Cloud using Cloud Endure

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Create Bitnami WordPress Site on Google Cloud===

| |

| − | [[Media:Create_Bitnami_WordPress_Site_on_Google_Cloud-Michael2018.pdf]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Setting up IIS web site on Google cloud===

| |

| − | [[Media:Setting_up_IIS_web_site_on_Google_cloud-Michael2018.pdf]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | | |

| − | ==[[AWS]]==

| |

| | | | |

| | | | |

| Line 620: |

Line 132: |

| | | | |

| | ==OVH== | | ==OVH== |

| | + | Para acceder a su espacio de cliente, el manager, mediante su número de identificador de Cliente (NIC)y su contraseña: |

| | | | |

| | + | https://www.ovh.com/managerv3/index.pl |

| | | | |

| − | <br />

| + | https://www.ovh.com/manager/web/#/configuration |

| − | ===Mi plan actual===

| |

| − | * Para acceder a su espacio de cliente, el manager, mediante su número de identificador de Cliente (NIC)y su contraseña:

| |

| − | * https://www.ovh.com/managerv3/index.pl

| |

| − | * https://www.ovh.com/manager/web/#/configuration

| |

| − | : Su identificador : va266899-ovh

| |

| − | : Contraseña : eptpi...

| |

| | | | |

| | + | Su identificador : va266899-ovh |

| | | | |

| − | * Dirección IPv4 del VPS: 37.59.121.119

| + | Contraseña : eptpi... |

| − | * Dirección IPv6 del VPS: 2001:41d0:0051:0001:0000:0000:0000:18ae

| |

| − | * Nombre del VPS: vps109855.ovh.net

| |

| − | | |

| − | | |

| − | Tengo la opción VPS Classic 2:

| |

| − | * 3vCPU, 4GB RAM, 50GB Storage

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Planes===

| |

| − | https://www.ovh.ie/vps/vps-ssd.xml

| |

| − | | |

| − | * 1vCPU, 2RAM, 20GB storage: 35.88€/year

| |

| − | * 1vCPU, 4RMM, 40GB storage: 71.88€/year

| |

| − | * 2vCPU, 8RMM, 80GB storage: 143.88€/year

| |

| | | | |

| | | | |

Cloud computing" is a term broadly used to define the on-demand delivery of IT resources and applications via the Internet, with pay-as-you-go pricing. [Definición from AWS Academy]

Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. This cloud model is composed of five essential characteristics, three service models, and four deployment models. [This is another common definition a little more complete and complex]

Case study - Migration of an on-premise network onto a public Cloud provider

CA1 - Physical Server Hardware Research / Case study of one company that successfully migrated their on-premise network onto a public Cloud provider

Media:Physical_Server_Hardware_Research-CA1_Cloud.pdf

Essential Characteristics

On-demand self-service: A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider.

Broad network access: Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations).

Resource pooling: The provider's computing resources are pooled to serve multiple consumers using a multi-tenant model, with different physical and virtual resources dynamically assigned and reassigned according to consumer demand. There is a sense of location independence in that the customer generally has no control or knowledge over the exact location of the provided resources but may be able to specify location at a higher level of abstraction (e.g., country, state, or datacenter). Examples of resources include storage, processing, memory, and network bandwidth.

Rapid elasticity: Capabilities can be elastically provisioned and released, in some cases automatically, to scale rapidly outward and inward commensurate with demand. To the consumer, the capabilities available for provisioning often appear to be unlimited and can be appropriated in any quantity at any time.

Measured service: Cloud systems automatically control and optimize resource use by leveraging a metering capability1 at some level of abstraction appropriate to the type of service (e.g., storage, processing, bandwidth, and active user accounts). Resource usage can be monitored, controlled, and reported, providing transparency for both the provider and consumer of the utilized service.

On-Premise Computing:

- Requires hardware, space, electricity, cooling

- Requires managing OS, applications and updates

- Software Licensing

- Difficult to scale:

- Too much or too little capacity

- High upfront capital costs

- You have complete control

Cloud Computing:

- Shared, multi-tenant environment

- Pools of computing resources

- Resources can be requested as required

- Available via the Internet

- Private clouds can be available via private WAN

- Pay as you go

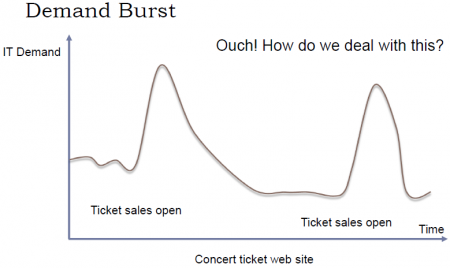

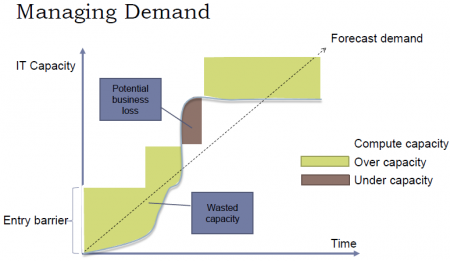

Una de las ventajas más importantes del Cloud es que puede ser «scaled» fácilmente (Resources can be requested as required). En otras palabras, a través del cloud la capacidad se puede variar fácilmente con respecto a la demanda. sólo cuando la demanda sea alta, el sistema será configurado para disponer de gran capacidad. On-Premise Computing, lo que generalmente pasa es que se configura el sistema para cubrir una capacidad promedio y generalmente se debe sobreestimar para que, cuando la demanda sea alta, poder todavía cubrirla. Entonces, cuando la demanda es baja estamos a "over capacity" y podría también pasar que cuando la demanda sea muy alta estemos a "under capacity". Esto es solucionado en el cloud. Esto se puede visualizar a través del ejemplo presentado en las siguientes figuras, en el cual se plantea el caso de un Concert ticket web site.

Service Models

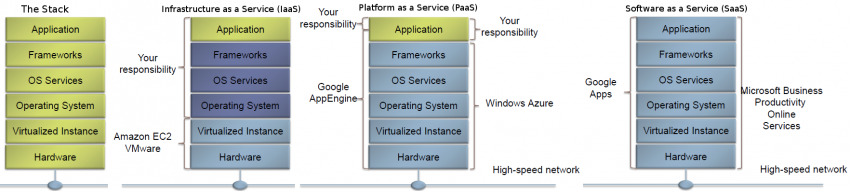

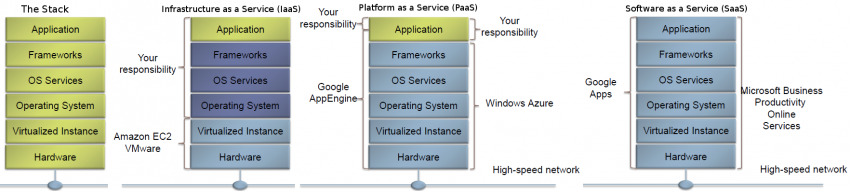

Infrastructure as a Service (IaaS) - Platform as a Service (PaaS) - Software as a Service (SaaS)

Cloud: IaaS - PaaS - SaaS: En esta gráfica se puede ver que la Virtual machine corre directamente sobre el hardware.. No hay un SO sobre el cual corren las VM's

Software as a Service (SaaS): The capability provided to the consumer is to use the provider’s applications running on a cloud infrastructure2. The applications are accessible from various client devices through either a thin client interface, such as a web browser (e.g., web-based email), or a program interface. The consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, storage, or even individual application capabilities, with the possible exception of limited user-specific application configuration settings.

Platform as a Service (PaaS): The capability provided to the consumer is to deploy onto the cloud infrastructure consumer-created or acquired applications created using programming languages, libraries, services, and tools supported by the provider.3 The consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, or storage, but has control over the deployed applications and possibly configuration settings for the application-hosting environment.

Infrastructure as a Service (IaaS): The capability provided to the consumer is to provision processing, storage, networks, and other fundamental computing resources where the consumer is able to deploy and run arbitrary software, which can include operating systems and applications. The consumer does not manage or control the underlying cloud infrastructure but has control over operating systems, storage, and deployed applications; and possibly limited control of select networking components (e.g., host firewalls).

Deployment Models

Private cloud: The cloud infrastructure is provisioned for exclusive use by a single organization comprising multiple consumers (e.g., business units). It may be owned, managed, and operated by the organization, a third party, or some combination of them, and it may exist on or off premises.

Community cloud: The cloud infrastructure is provisioned for exclusive use by a specific community of consumers from organizations that have shared concerns (e.g., mission, security requirements, policy, and compliance considerations). It may be owned, managed, and operated by one or more of the organizations in the community, a third party, or some combination of them, and it may exist on or off premises.

Public cloud: The cloud infrastructure is provisioned for open use by the general public. It may be owned, managed, and operated by a business, academic, or government organization, or some combination of them. It exists on the premises of the cloud provider.

Hybrid cloud: The cloud infrastructure is a composition of two or more distinct cloud infrastructures (private, community, or public) that remain unique entities, but are bound together by standardized or proprietary technology that enables data and application portability (e.g., cloud bursting for load balancing between clouds).

Azure

https://portal.azure.com/#home

Alibaba Cloud

https://www.alibabacloud.com/

An account has been created using my gmail account

- Pricing: https://www.alibabacloud.com/en/product/ecs?_p_lc=1&spm=5176.ecsnewbuy.0.0.45d11594Q1r4Au&tabIndex=2#pricingFrame

OVH

Para acceder a su espacio de cliente, el manager, mediante su número de identificador de Cliente (NIC)y su contraseña:

https://www.ovh.com/managerv3/index.pl

https://www.ovh.com/manager/web/#/configuration

Su identificador : va266899-ovh

Contraseña : eptpi...

Add a subdomain

Domains > sinfronteras.ws:

-

- Pointer records: A

- Sub-domain: wiki.sinfronteras.ws

- Target: 52.212.210.222

- Add an entry (again) (optional):

- Pointer records: CNAME

- Sub-domain: www.wiki.sinfronteras.ws

- Target: wiki.sinfronteras.ws

Contabo

https://contabo.com/?show=vps

- CPU: four cores

- Intel® Xeon® E5-2620v3, E5-2630v4 or 4114 processor

- 8 GB RAM (guaranteed)

- 200 GB disk space (100% SSD)

- 100% SSD disk space

- 58.88 €/year

- You can access your entire customer account at https://my.contabo.com. Please use the credentials below to log in:

- user name: adeloaleman@gmail.com

- password: 001

- Once logged in, you can view all your services, perform hard reboots, reinstalls or boot a rescue system. You can also update your contact details and reverse DNS entries, view your payment history and send new transfers in a comfortable way. Anything you can do at my.contabo.com is free, of course.

- My VPS

- IP address: 62.171.143.243

- server type: VPS S SSD

- VNC IP and port: 144.91.93.73:63025

- VNC password: ***

- user name: ***

- password: ***

- operating system: Ubuntu 18.04 (64 Bit)

- You can access and configure your VPS via SSH (in case of a Linux operating system) or via Remote Desktop (in case of a Windows operating system) using the login details above.

- Additionally you can connect to your VPS via VNC. This might be handy if, due to a wrong firewall configuration, for example, your server is not accessible normally anymore. In order to establish a VNC connection, you will need a VNC client such as UltraVNC. Since VNC is not an encrypted protocol, we recommend not to prefer it to SSH or Remote Desktop. Please always remember to log out before you close your VNC session. You can change the VNC password at any time within the customer control panel. Furthermore, you can disable the VNC access within the customer control panel.

- Each dedicated server and each VPS comes with a /64 IPv6 subnet in addition to its IPv4 address. You can use the addresses of such a subnet freely on the associated server/VPS. IPv6 is already preconfigured on our servers but has to be activated explicitly in some cases. You can find out how to activate IPv6 and further information on the subject in our tutorial: https://contabo.com/?show=tutorials&tutorial=adding-ipv6-connectivity-to-your-server

- IPv6 subnet: 2a02:c207:2034:6715:0000:0000:0000:0001 / 64