|

|

| (35 intermediate revisions by the same user not shown) |

| Line 6: |

Line 6: |

| | | | |

| | * [[Media:Network_Management_and_High_Availability-Resume_for_the_exam.pdf]] | | * [[Media:Network_Management_and_High_Availability-Resume_for_the_exam.pdf]] |

| − | ::* There are some virtualization and cloud material in this resume that could be added to the wiki. | + | |

| | + | * [[Media:Interacting_with_the_GCP-CA2_Cloud.pdf]] |

| | + | |

| | + | * [[Media:Designing and Implementing an AWS Cloud Solution.pdf]] |

| | + | |

| | + | * [[Media:PowerShell_and_GoogleCloud.pdf]] |

| | | | |

| | | | |

| | <br /> | | <br /> |

| | | | |

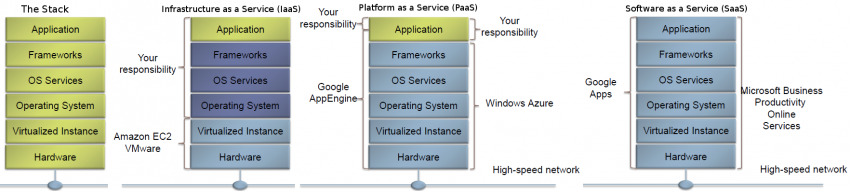

| − | Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. This cloud model is composed of five essential characteristics, three service models, and four deployment models. | + | |

| | + | Cloud computing" is a term broadly used to define the on-demand delivery of IT resources and applications via the Internet, with pay-as-you-go pricing. [Definición from AWS Academy] |

| | + | |

| | + | |

| | + | Cloud computing is a model for enabling ubiquitous, convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. This cloud model is composed of five essential characteristics, three service models, and four deployment models. [This is another common definition a little more complete and complex] |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ==CA1 - Case study - Migration of on-premise network onto a public Cloud provider== | + | ==Case study - Migration of an on-premise network onto a public Cloud provider== |

| | CA1 - Physical Server Hardware Research / Case study of one company that successfully migrated their on-premise network onto a public Cloud provider | | CA1 - Physical Server Hardware Research / Case study of one company that successfully migrated their on-premise network onto a public Cloud provider |

| | | | |

| Line 97: |

Line 106: |

| | | | |

| | <br /> | | <br /> |

| − | ==Containers== | + | ==[[AWS]]== |

| − | https://www.cio.com/article/2924995/software/what-are-containers-and-why-do-you-need-them.html

| |

| − | https://cloud.google.com/containers/

| |

| − | | |

| − | Containers are a solution to the problem of how to get software to run reliably when moved from one computing environment to another. This could be from a developer's laptop to a test environment, from a staging environment into production, and perhaps from a physical machine in a data center to a virtual machine in a private or public cloud.

| |

| − | | |

| − | Problems arise when the supporting software environment is not identical. For example: "You're going to test using Python 2.7, and then it's going to run on Python 3 in production and something weird will happen. Or you'll rely on the behavior of a certain version of an SSL library and another one will be installed. You'll run your tests on Debian and production is on Red Hat and all sorts of weird things happen."

| |

| − | | |

| − | And it's not just different software that can cause problems, he added. "The network topology might be different, or the security policies and storage might be different but the software has to run on it."

| |

| − | | |

| − | '''How do containers solve this problem?'''

| |

| − | Put simply, a container consists of an entire runtime environment: an application, plus all its dependencies, libraries and other binaries, and configuration files needed to run it, bundled into one package. By containerizing the application platform and its dependencies, differences in OS distributions and underlying infrastructure are abstracted away.

| |

| − | | |

| − | Containers offer a logical packaging mechanism in which applications can be abstracted from the environment in which they actually run.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ==Google Cloud Platform==

| |

| − | https://console.cloud.google.com

| |

| − | | |

| − | '''para ver la Amount remaining del Promotion value of 243.35:'''

| |

| − | Principal Menu (Burger) > Billing > Overview

| |

| − | | |

| − | | |

| − | Lo primero que tenemos que hacer es crear un proyecto. Generalmente hay un proyecto by default llamado «My First Project». Para crear un nuevo proyecto debemos ir a la pestaña que se encuentra justo al lado del Main Menu (Hamburger).

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Pricing===

| |

| − | * 1 shared vCPU, 0.6 GB RAM, 50GB Storage: 70.56 $/year

| |

| − | * 1 shared vCPU, 1.7 GB RAM, 50GB Storage: 189.6 $/year

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===CA2-Interacting with the GCP===

| |

| − | [[Media:Interacting_with_the_GCP-CA2_Cloud.pdf]] | |

| − | | |

| − | # Creating a storage bucket. Uploading items from a host computer using the command line and the GUI and place them into the bucket

| |

| − | ## Using the command line

| |

| − | ## Using the GUI

| |

| − | # Upload items from the bucket to a Linux virtual machine using the Google CLI

| |

| − | # Creation of a Linux VM, installing Apache and uploading the web page to the web site

| |

| − | # Create a Linux VM, install NGINX and upload the web page to the web site

| |

| − | # Create a Windows VM, install IIS and upload the web page to the web site

| |

| − | # Live Migration of a VirtualBox VM to the GCP

| |

| − | # Explain what Live Migration is and identify situations where DigiTech could benefit from it

| |

| − | # Research topic: Other services available from Google’s Cloud Launcher

| |

| − | ## Python tutorial

| |

| − | # Challenging research topic - GCSFUSE: It allows you to mount a bucket to a Debian Linux virtual machine

| |

| − | ## Installing Cloud Storage FUSE and its dependencies

| |

| − | ## Mounting a Google Cloud Storage Bucket as a local disk

| |

| − | ## Change the root directory of an apache server

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Cloud SDK: Command-line interface for Google Cloud Platform===

| |

| − | https://cloud.google.com/sdk/

| |

| − | | |

| − | The Cloud SDK is a set of tools for Cloud Platform. It contains [https://cloud.google.com/sdk/gcloud/reference/ '''gcloud'''], [https://cloud.google.com/storage/docs/gsutil '''gsutil'''], and [https://cloud.google.com/bigquery/docs/bq-command-line-tool '''bq'''], which you can use to access Google Compute Engine, Google Cloud Storage, Google BigQuery, and other products and services from the command-line. You can run these tools interactively or in your automated scripts. A comprehensive guide to gcloud can be found in [https://cloud.google.com/sdk/gcloud/ '''gcloud Overview'''].

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Instalación====

| |

| − | El proceso de instalación se encuentra detallado en https://cloud.google.com/sdk/

| |

| − | | |

| − | Logré instalarlo correctamente a través de la opción: Install for Debian / Ubuntu: https://cloud.google.com/sdk/docs/quickstart-debian-ubuntu

| |

| − | | |

| − | La procesimiento de instalación general para Linux: https://cloud.google.com/sdk/docs/quickstart-linux generó errores en mi sistema y no se pudo completar la instalación.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Initialize the SDK====

| |

| − | https://cloud.google.com/sdk/docs/quickstart-linux

| |

| − | | |

| − | Luego de instalar SDK, debemos usar the '''gcloud init''' command to perform several common SDK setup tasks. These include:

| |

| − | * Especificar la user account (adeloaleman@gmail.com): authorizing the SDK tools to access Google Cloud Platform using your user account credentials

| |

| − | * Setting up the default SDK configuration:

| |

| − | * El proyecto (creado en al GCP) al que vamos a acceder by default

| |

| − | * ...

| |

| − | | |

| − | gcloud init

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Crear una VM Instance===

| |

| − | Main Menu (Hamburger) > Compute Engine > VM instances:

| |

| − | * Create Instance

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Linux VM Instance====

| |

| − | * '''Name:''' debian9-1

| |

| − | * '''Zone:''' Lo lógico es elegir la zona donde te encuentras, aunque si elijes alguna otra no habrá mucha diferencia (el precio varía dependiendo de la zona)

| |

| − | : Elegiremos la primera zona de West Europe propuesta: '''europe-west4-a'''

| |

| − | * '''Machine type:''' Is customize. Las características por defecto son:

| |

| − | : 1vCPU / 3.75 GB memory

| |

| − | : Las características más básicas son:

| |

| − | :: micro (1 shared vCPU 0.6GB memory) (el precio baja considerablemente si elegimos éste) (para Linux podría ser suficiente)

| |

| − | * '''Container:''' Deploy a container image to this VM instance.

| |

| − | : Ver [[Cloud Computing#Containers|Containers]]

| |

| − | : No es, por ahora, necesario activar esta opción para nuestro propósito de prueba.

| |

| − | * '''Boot disk:'''

| |

| − | : OS: La opción por defecto es Debian GNU/Linux 9 (stretch). Vamos estar trabajando con éste.

| |

| − | : Disk size: 10GB por es la opción por defecto para Linux.

| |

| − | * '''Identity and API access :'''

| |

| − | : Service account: Esta opción se encuentra ajustada a "Compute Engine Default Service Account".

| |

| − | : Access scopes:

| |

| − | :* Allow default access: Activada por defecto...

| |

| − | :* Allow full access to all Cloud APIs

| |

| − | ::* <span style="background:#00FF00">Es apropiado marcar esta opción.</span> Si no se marca no se podrá, por ejemplo, copiar files desde una VM instance a un bucket de esta forma:

| |

| − | <blockquote>

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | gsutil cp file.txt gs://adelostorage/

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | </blockquote>

| |

| − | ::* <span style="background:#00FF00">Otra opción es para permitir copiar archivos desde la VM hacia un bucket es configurar la "Service account". Debemos elegir una Service Account que hayamos configurade previamente para permitir este tipo de acciones. Ver [[Cloud Computing#Service Accounts|Service Accounts]] para más detalles</span>

| |

| − | :* Set access for each API

| |

| − | * '''Firewall:'''

| |

| − | : Add tags and firewall rules to allow specific network traffic from the Internet

| |

| − | :* Allow HTTP traffic: Activaremos esta opción to be able to maange the site through an SSH connection.

| |

| − | :* Allow HTTPS traffic: Activaremos esta opción to be able to maange the site through an SSH connection.

| |

| − | * '''Management, disks, networking, SSH keys:''' Este link nos da la opción de realizar muchas otras configuraciones. Dejaremos todo por defecto por ahora.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Crear storage===

| |

| − | Create a storage bucket:

| |

| − | | |

| − | Main Menu > Storage > Browser:

| |

| − | * Create bucket:

| |

| − | ** '''Name:''' mi_storage-1

| |

| − | ** '''Default storage class:''' Vamos a dejar la opción by default -- Multi-Regional

| |

| − | ** '''Location:''' Europe

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Connecting to Instances===

| |

| − | https://cloud.google.com/compute/docs/instances/connecting-to-instance

| |

| − | | |

| − | Compute Engine provides tools to manage your SSH keys and help you connect to either Linux and Windows Server instances.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Connecting to Linux instances====

| |

| − | You can connect to Linux instances through either:

| |

| − | * The Google Cloud Platform Console

| |

| − | * The gcloud command-line tool.

| |

| − | * Connecting using third-party tools

| |

| − | | |

| − | Compute Engine generates an SSH key for you and stores it in one of the following locations:

| |

| − | * By default, Compute Engine adds the generated key to project or instance metadata.

| |

| − | * If your account is configured to use OS Login, Compute Engine stores the generated key with your user account.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====The Google Cloud Platform Console=====

| |

| − | Esta es un Console que pertenece a la GCP a la cual se accede a travé de una Browser window. Para abrirla vamos a:

| |

| − | * In the GCP (Google Cloud Platform) Console, go to the VM Instances page.

| |

| − | * In the list of virtual machine instances, click SSH in the row of the instance that you want to connect to.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====The gcloud command-line tool=====

| |

| − | A través del comando '''gcloud''' (incluido en Cloud SDK) podemos acceder a la VM Instance desde el Terminal (Console) de nuestra computadora.

| |

| − | gcloud compute ssh [INSTANCE_NAME]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====Connecting using third-party tools=====

| |

| − | https://cloud.google.com/compute/docs/instances/connecting-advanced#thirdpartytools

| |

| − | | |

| − | Ver [[Linux#SSH]]

| |

| − | | |

| − | También podemos acceder a una VM Instance sin necesidad de utilizar herramientas de la GCP, como lo son The Google Cloud Platform Console or gcloud. Podemos, en su lugar, utilizar '''SSH'''.

| |

| − | | |

| − | Luego de crear una VM Instance, no podremos acceder a la VM a través de SSH debido a que ningún método de autentificación estará disponible. Como explicamos en [[Linux#SSH]]. Hay dos approach para la autentificación al usar SSH:

| |

| − | | |

| − | * '''Using the password de la «cuenta linux»:'''

| |

| − | :* Las Google Cloud VM Instance se crean por defecto sin password. Además, en la configuración por defectos de las Google VM Instance, se impide la autentificación con password. Es decir, el archivo «/etc/ssh/sshd_config» presenta la siguiente configuración: «PasswordAuthentication no».

| |

| − | :* Entonces, tenemos primero que encontrar otro método de acceder a las GC VM para:

| |

| − | ::# Configurar «PasswordAuthentication yes»

| |

| − | ::# Configurar un password para el user@VMInstance

| |

| − | | |

| − | * '''SSH Keys:''' Si queremos autentificar la conexión con este método tenemos primero que copiar la «public key» de nuestra computadora en las Google Cloud VM Instances.

| |

| − | :* Como se explica en [[Linux#SSH]], si queremos hacerlo con el comando «ssh-copy-id» tenemos primero que configurar una password para el user@VMInstance y habilitar la autentificación a través de dicho password como explicamos arriba.

| |

| − | :* Entonces, igualmente, tenemos primero que encontrar otro método de acceder a las GC VM para poder copiar la «public key».

| |

| − | | |

| − | | |

| − | Alors, para conectarnos a las GC VM y realizar las configuraciones necesarias para permitir la conexión con SSH podemos usar el comando «gcloud» como ya hemos visto:

| |

| − | gcloud compute ssh [INSTANCE_NAME]

| |

| − | | |

| − | Cuando realizamos la primera conexión a través de «gcloud», el comando generará las keys necesarias para la conexión con «gcloud»:

| |

| − | | |

| − | [[File:SSH_google_keys.png|900px|thumb|center|]]

| |

| − | | |

| − | | |

| − | <span style="background:#D8BFD8">Lo que he constatado es que, luego de crear la GC VM, si intento ingresar con «ssh» antes de haber ingresado con «gcloud», no será posible el acceso porque no hay método de autentificación. Sin embargo, si primero me conecto con «gcloud», luego sí puedo ingresar con «ssh» sin necesidad de realizar otra configuración ni copiar la «public key». Esto me imagino que es porque, como se ve en la imagen mostrada arriba, «gcloud» ha creado las «key» y copiado la «public key» en la computadora destino; y al parecer éstas mismas funcionan con una conexión normal de ssh (sin acudir a «gcloud»). Esto lo he constatado conectandome desde mi computadora a las GC VM.</span> <span style="color:#FFF;background:#FF0000">Sin embargo, siguiendo el mismo procedimiento intenté conectarme entre dos GC VM Instances y no logré estableser la conección a través de SSH. El problema lo solucioné, siguiendo el consejo de un forum, borrando todos los archivos en «~/.ssh/» excepto «known_hosts» y generando nuevas keys (con «ssh-keygen») y copiando la nueva «public key» en la GC VM correspondiente, siguiendo los procedimientos en [[Linux#SSH]]</span>.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Copy files===

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====From the host computer to a bucket on Google Cloud====

| |

| − | Luego de crear el bucket, podemos copiar archivos al bucket. Hay diferentes formas de hacerlo:

| |

| − | * Sinple drag and drop

| |

| − | * Usando el terminal de Google cloud

| |

| − | * Desde el terminal de la computadora luego de instalar Cloud SDK

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====Desde el terminal de la computadora luego de instalar Cloud SDK=====

| |

| − | El siguiente comando copia un archivo que está en mi computadora a un bucket on GC:

| |

| − | gsutil cp probando gs://adelostorage

| |

| − | Where adelostorage is the name of the bucket.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====From the host computer to a Linux VM Instance or between VM's====

| |

| − | https://cloud.google.com/sdk/gcloud/reference/compute/scp

| |

| − | | |

| − | To copy a remote directory, ~/narnia, from example-instance to the ~/wardrobe directory of your local host, run:

| |

| − | gcloud compute scp --recurse example-instance:~/narnia ~/wardrobe

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====From a bucket to an VM Instance====

| |

| − | Luego de acceder a una VM Instance, puedo copiar un archivo desde un bucket a dicha VM Instance a través de la siguiente orden:

| |

| − | gsutil cp gs://adelostorage/probando.txt .

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Acceding Cloud Storage buckets===

| |

| − | He estado buscando una forma para acceder a mis Buckets de forma tal que sea posible compartir el bucket con otras personas:

| |

| − | * A través de una URL

| |

| − | * A través de un IP

| |

| − | | |

| − | En los siguientes links se explica como hacer el bucket público y como acceder a él a través de una URL:

| |

| − | * https://cloud.google.com/storage/docs/access-control/making-data-public

| |

| − | * https://cloud.google.com/storage/docs/access-public-data

| |

| − | | |

| − | Pensé que lo que se explicaba en esos links era la solución. Pero creo que eso sólo permite hacer el bucket público para todos los usuarios de un proyecto (creo); porque la URL a la que creo se refieren en dicho link es:

| |

| − | * https://console.cloud.google.com/storage/BUCKET_NAME

| |

| − | * En mi caso: https://console.cloud.google.com/storage/browser/sinfronterasbucket1

| |

| − | | |

| − | y pues ese link sólo permitiría el acceso a mi bucket al estar logeados en mi cuenta.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Connecting your domain to a Cloud Storage Bucket====

| |

| − | Lo que sí encontré es que hay una forma de asociar el bucket a un dominio que nos pertenezca. Por ejemplo, el siguiente subdominio lo voy a asociar a un bucket:

| |

| − | * www.es.sinfronteras.ws

| |

| − | * https://console.cloud.google.com/storage/browser/www.es.sinfronteras.ws

| |

| − | | |

| − | En el siguiente link se explica como connect your domain to Cloud Storage and Hosting a Static Website en el Bucket: https://cloud.google.com/storage/docs/hosting-static-website

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Mounting Cloud Storage buckets as file systems===

| |

| − | Cloud Storage FUSE: Mounting Cloud Storage buckets as file systems on Linux or OS X systems:

| |

| − | https://cloud.google.com/storage/docs/gcs-fuse

| |

| − | | |

| − | Cloud Storage FUSE is an open source FUSE adapter that allows you to mount Cloud Storage buckets as file systems on Linux or OS X systems.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Installing Cloud Storage FUSE and its dependencies====

| |

| − | https://github.com/GoogleCloudPlatform/gcsfuse/blob/master/docs/installing.md

| |

| − | | |

| − | * Add the gcsfuse distribution URL as a package source and import its public key:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | export GCSFUSE_REPO=gcsfuse-`lsb_release -c -s`

| |

| − | echo "deb http://packages.cloud.google.com/apt $GCSFUSE_REPO main" | sudo tee /etc/apt/sources.list.d/gcsfuse.list

| |

| − | curl https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | * Update the list of packages available and install gcsfuse:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo apt-get update

| |

| − | sudo apt-get install gcsfuse

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | * (Ubuntu before wily only) Add yourself to the fuse group, then log out and back in:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo usermod -a -G fuse $USER

| |

| − | exit

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | * Future updates to gcsfuse can be installed in the usual way:

| |

| − | <blockquote>

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo apt-get update && sudo apt-get upgrade

| |

| − | </syntaxhighlight>

| |

| − | </blockquote>

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Set up credentials for Cloud Storage FUSE====

| |

| − | Cloud Storage FUSE auto-discovers credentials based on application default credentials:

| |

| − | * If you are running on a Google Compute Engine instance with scope storage-full configured, then Cloud Storage FUSE can use the Compute Engine built-in service account. For more information, see Using Service Accounts with Applications.

| |

| − | ** <span style="background:#D8BFD8">Si se está usando una Google Compute Engine Instance, no se tiene que hacer nada para montar el Bucket. Sin embargo si el Access scopes de dicha Instance no fue configurado correctamente, entonces no podremos copiar arechivos desde la Instance hacia el Bucket montado. Ver en la [[Cloud Computing#Crear una VM Instance|creación de la VM Instance]] que es apropiado elegir "Allow full access to all Cloud APIs" o elegir una Service Account que hayamos configurade previamente para permitir este tipo de acciones. Ver [[Cloud Computing#Service Accounts|Service Accounts]] para más detalles.

| |

| − | </span>

| |

| − | | |

| − | * If you installed the Google Cloud SDK and ran gcloud auth application-default login, then Cloud Storage FUSE can use these credentials.

| |

| − | | |

| − | * If you set the environment variable GOOGLE_APPLICATION_CREDENTIALS to the path of a service account's JSON key file, then Cloud Storage FUSE will use this credential. For more information about creating a service account using the Google Cloud Platform Console, see Service Account Authentication.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Mounting a Google Cloud Storage Bucket as a local disk====

| |

| − | * Create a directory:

| |

| − | mkdir /path/to/mount

| |

| − | | |

| − | * Create the bucket you wish to mount, if it doesn't already exist, using the Google Cloud Platform Console.

| |

| − | | |

| − | * Use Cloud Storage FUSE to mount the bucket (e.g. example-bucket).

| |

| − | gcsfuse example-bucket /path/to/mount

| |

| − | <blockquote>

| |

| − | <span style="background:#D8BFD8">

| |

| − | Es muy importante notar que si montamos el bucket como se muestra arriba, no podremos accederlo a través del Web Brower. Es decir, el Web server no tendrá la permisología para acceder a este directorio.</span>

| |

| − | | |

| − | <span style="background:#D8BFD8">

| |

| − | Esto es un problema si queremos colocar un sitio web en el bucket; al cual se va a acceder a través de la VM Instance.</span>

| |

| − | | |

| − | <span style="background:#D8BFD8">

| |

| − | En este caso, debemos montarlo de la siguiente forma:</span>

| |

| − | https://www.thedotproduct.org/posts/mounting-a-google-cloud-storage-bucket-as-a-local-disk.html

| |

| − | <syntaxhighlight lang="java">

| |

| − | sudo gcsfuse -o noatime -o noexec --gid 33 --implicit-dirs -o ro -o nosuid -o nodev --uid 33 -o allow_other mi_storage1 /path/directory/

| |

| − | </syntaxhighlight>

| |

| − | '''Explaining the mount options:'''

| |

| − | * ro - mount the volume in read-only mode

| |

| − | * uid=33,gid=33 - grant access to user-id and group-id 33 (usually the web serve user e.g. www-data):

| |

| − | ** <span style="background:#00FF00">Your OS has a web server user whose user-id (UID) and groupd-id (GID) are 33</span>

| |

| − | <blockquote>

| |

| − | Para desplegar el web server user-id (UID) and groupd-id: http://www.digimantra.com/linux/find-users-uid-gid-linux-unix/

| |

| − | <syntaxhighlight lang="bash">

| |

| − | id -u www-data

| |

| − | id -g www-data</syntaxhighlight>

| |

| − | </blockquote>

| |

| − | * noatime - don't update access times of files

| |

| − | * _netdev - mark this as a network volume so the OS knows that the network needs to be available before it'll try to mount

| |

| − | * noexec - don't allow execution of files in the mount

| |

| − | * user - allow non-root users to mount the volume

| |

| − | * implicit_dirs - treat object paths as if they were directories - this is critical and Fuse-specific

| |

| − | * allow_other - allow non-root users to mount (Fuse-specific AFAIK)

| |

| − | </blockquote>

| |

| − | | |

| − | * To unmount the bucket:

| |

| − | fusermount -u /home/shared/local_folder/

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Firewall Rules===

| |

| − | '''How to open a specific port such as 9090 in Google Compute Engine:'''

| |

| − | | |

| − | https://cloud.google.com/vpc/docs/using-firewalls

| |

| − | | |

| − | https://stackoverflow.com/questions/21065922/how-to-open-a-specific-port-such-as-9090-in-google-compute-engine

| |

| − | | |

| − | Por defectos, sólo el puerto tcp:80 se encuentra abierto. Los otros están protegidos por los firewalls. Si configuramos, por ejemplo, un Web Server (Apache, NGINX) en un puerto distinto al 80, debemos modificar los Firewall Rules para que el Web Server sea capaz de servir las páginas a través de otro puerto.

| |

| − | | |

| − | '''Para abrir un puerto:'''

| |

| − | * Choose you Project: al lado del Main Menu (burguer)

| |

| − | * Then, go to: Burguer > VPC network > Firewall rules:

| |

| − | ** Create a firewall rule

| |

| − | | |

| − | Luego, seguir los pasos indicados en: https://cloud.google.com/vpc/docs/using-firewalls:

| |

| − | Con las siguientes especificaciones (el resto en default) he sido capaz de hacerlo:

| |

| − | * Targets

| |

| − | ** All instances in the network

| |

| − | * Source IP ranges:

| |

| − | ** If you want it to apply to all ranges, specify 0.0.0.0/0

| |

| − | * Specified protocols and ports:

| |

| − | ** tcp:8080 (para abrir el puerto 8080)

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Service Accounts===

| |

| − | A service account is a special account whose credentials you can use in your application code to access other Google Cloud Platform services.

| |

| − | | |

| − | Creating and Managing Service Accounts:

| |

| − | https://cloud.google.com/iam/docs/creating-managing-service-accounts#creating_a_service_account

| |

| − | | |

| − | Creating and Enabling Service Accounts for Instances:

| |

| − | https://cloud.google.com/compute/docs/access/create-enable-service-accounts-for-instances#createanewserviceaccount

| |

| − | | |

| − | Granting Roles to Service Accounts:

| |

| − | https://cloud.google.com/iam/docs/granting-roles-to-service-accounts

| |

| − | | |

| − | Por ejemplo, para poder copar arvhivos desde VM Instance hacia un Bucket, you need to Setting up a new instance to run as a service account. Dicha service account tiene que haber sido Granted with the particular Roles que permiten dicha operación de copia.

| |

| − | | |

| − | To grant the roles, no puede hacerlo a través de la línea de comandos pues me generó un error. Lo pude sin embargo hacer desde la página de GCP. para la operación de copia descrita arriba hay que agregar a la service account en la cual corre la VM los roles relacionados con Storage:

| |

| − | * Storage Admin

| |

| − | * Storage Object Admin

| |

| − | * Storage Object Creator

| |

| − | * Storage Object Viewer

| |

| − | | |

| − | Luego de haber creado la Service Account podemos, al momento de crear la VM Instance, ajustar que la new instance runs as this service account (ver ).

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Live Migration of a Virtual Machine===

| |

| − | * The virtualBox machine that you migrate should be using a VMDK (Virtual Machine Disk), not VDI (VirtualBox Disk Image) or VHD (Virtual Hard Disk).

| |

| − | * Make sure you the NIC (Network Interface Controler) (Network > Adapter 1 > Attached to) is set to NAT so that you have Internet access.

| |

| − | * <span style="background:#00FF00">Es muy importante tomar en cuenta que para podernos conectar (a través de gcloud compute ssh VMmigrada) a la VM Instance luego de haberla migrado, el paquete '''openssh-server''' tiene que haber sido previamente instalado en la VM.</span>

| |

| − | | |

| − | '''Para realizar la migración:'''

| |

| − | * '''In the GCP'''

| |

| − | ** Main Menu > Compute engine > VM Instances: Import VM

| |

| − | ** There you will be redirected to the Cloud Endure Platform (VM Migration Service)

| |

| − | | |

| − | | |

| − | * '''In the Cloud Endure Platform (VM Migration Service)'''

| |

| − | : https://gcp.cloudendure.com

| |

| − | :* <span style="background:#D8BFD8">'''GCP credentials'''</span>

| |

| − | :: '''We need to create GCP credentials (Google Cloud Platform JSON private key (JSON file))'''. To generate a JSON private key file (could be also PKCS12 format) (Creo que estas credenciales cambiarían sólo si queremos realizar una migración hacia otro proyecto):

| |

| − | ::* Click "Find out where to get these credentials"

| |

| − | ::* Lo anterior abrirá la página de Documentation with a link to "Open the link of Credentials"

| |

| − | ::* Then, you need to choose the project: The target project where your VM will be migrate.

| |

| − | ::* Debemos copiar el Project ID porque luego lo vamos a necesitar en la Cloud Endure Platforme

| |

| − | ::* Click "Create credentials" and Select "Service Account Key" option

| |

| − | ::* Select the JSON option (for key type)

| |

| − | ::* The service account should be "Compute engine default service account"

| |

| − | ::* Then hit create: the JSON file will be downloaded to your computer.

| |

| − | ::* Luego, you go back to the Cloud Endure Platform (VM Migration Service)

| |

| − | ::* Enter the Google Cloud Platform project ID

| |

| − | ::* Luego cargamos el JSON file (Google Cloud Platform JSON private key). Then "Save".

| |

| − | | |

| − | ::* <span style="background:#00FF00">Este es el archivo JSON que creé en mi cuenta GC cuando migré mi VM:</span> [[Media:ProyectoAdelo2017279-78811cb38326.json]]

| |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Replication settings'''</span>

| |

| − | ::* Live migration target: Google EU West 2 (London)

| |

| − | ::* Hit Save replication settings

| |

| − | ::* Luego de esto: Project setup complete Congratulations!

| |

| − | :::* Your Live Migration Project is set up. So, the next step is to install the CloudEndure Agent on your Source machine.

| |

| − | | |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Install the CloudEndure Agent on your Source machine'''</span>

| |

| − | :: How To Add Machines: https://gcp.cloudendure.com/#/project/2be028cc-40ae-4215-ad2c-52e3ce4e325d/machines

| |

| − | :: In order to add a machine to the GCP, install the CloudEndure Agent on your machine (your VirtualBox VM for example) (data replication (proceso de transferencia de la VM) begins automatically upon CloudEndure Agent installation).

| |

| − | | |

| − | <blockquote>

| |

| − | Your Agent installation token: (este código lo genera automáticamente la Cloud Endure Platform (VM Migration Service. No sé si es diferente en cada migración.. Creo podría depender del proyecto; entonces debería cambiar si queremos migrar una VM hacia otro proyecto)

| |

| − | <syntaxhighlight lang="bash">

| |

| − | 0E65-51C9-50EE-E20E-A583-9709-1653-BA57-457D-92BF-57B9-0DE2-22DC-EA11-3B80-81E6

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | '''For Linux machines:'''

| |

| − | | |

| − | Download the Installer:

| |

| − | | |

| − | <syntaxhighlight lang="bash">

| |

| − | wget -O ./installer_linux.py https://gcp.cloudendure.com/installer_linux.py

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | <span style="background:#00FF00">Antes de ejecutar el siguiente paso tuvimos que instalar:</span>

| |

| − | <syntaxhighlight lang="sh">

| |

| − | sudo apt-get install python2.7

| |

| − | sudo apt-get install gcc

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | Then run the Installer and follow the instructions:

| |

| − | <syntaxhighlight lang="bash">

| |

| − | sudo python ./installer_linux.py -t 0E65-51C9-50EE-E20E-A583-9709-1653-BA57-457D-92BF-57B9-0DE2-22DC-EA11-3B80-81E6 --no-prompt

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | '''For Windows machines:'''

| |

| − | | |

| − | Download the Windows installer here https://gcp.cloudendure.com/installer_win.exe, then launch as follows:

| |

| − | | |

| − | <syntaxhighlight lang="bash">

| |

| − | installer_win.exe -t 0E65-51C9-50EE-E20E-A583-9709-1653-BA57-457D-92BF-57B9-0DE2-22DC-EA11-3B80-81E6 --no-prompt

| |

| − | </syntaxhighlight>

| |

| − | | |

| − | </blockquote>

| |

| − | | |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Data replication'''</span>

| |

| − | ::* Data replication begins automatically once the installation of the CloudEndure Agent is completed. You will see able to see progress on the Cloud Endure Platform (VM Migration Service)

| |

| − | ::* Data replication es de hecho el proceso en el cual la VM es copiada hacia la GCP.

| |

| − | | |

| − | | |

| − | :* <span style="background:#D8BFD8">'''Launch target Machine'''</span>

| |

| − | ::* Luego de haberse completado la Data replication, se habrá creado una Instance en la página de VM Instace del correspondiente proyecto en la GCP. Esta Instace, cuyo nombre contiene "replicator" no será la VM Instance definitiva. Debemos entonces hacer click en "Launch target Machine" (aún en la Cloud Endure Platform (VM Migration Service)), lo cual generará la VM Instance en la correspondiente página de la GCP. Habrán entonces dos Instance en la Página de la GCP. En mi caso:

| |

| − | :::* ce-replicator-goo16-20eb5f29

| |

| − | :::* ubuntu-18932dd6

| |

| − | | |

| − | ::* <span style="background:#00FF00">Si hacemos cambios en nuestra Local VirtualBox VM, podremos actualizar la correspondiente Google VM Instance fácilmente haciendo click en "Launch target Machine" nuevamente.</span> La ce-replicator-goo16-20eb5f29 creo que tiene la función de actuar en esta actualización.

| |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ===Live migration of a desktop computer=== | + | ==[[Google Cloud]]== |

| − | Migrating your computer to Google Cloud using Cloud Endure

| |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ===Create Bitnami WordPress Site on Google Cloud=== | + | ==Azure== |

| − | [[Media:Create_Bitnami_WordPress_Site_on_Google_Cloud-Michael2018.pdf]]

| + | https://portal.azure.com/#home |

| | | | |

| | | | |

| | <br /> | | <br /> |

| − | ===Setting up IIS web site on Google cloud=== | + | ==Alibaba Cloud== |

| − | [[Media:Setting_up_IIS_web_site_on_Google_cloud-Michael2018.pdf]]

| + | https://www.alibabacloud.com/ |

| | | | |

| | + | An account has been created using my gmail account |

| | | | |

| − | <br />

| |

| | | | |

| − | ==AWS==

| + | * '''Elastic Compute Service''': |

| − | Overview of Amazon Web Services: https://d1.awsstatic.com/whitepapers/aws-overview.pdf

| + | : Pricing: https://www.alibabacloud.com/en/product/ecs?_p_lc=1&spm=5176.ecsnewbuy.0.0.45d11594Q1r4Au&tabIndex=2#pricingFrame |

| − | | |

| − | | |

| − | <br />

| |

| − | ===AWS Academy===

| |

| − | [[:File:AWS_Academy_on_Vocareum_and_VitalSource_Bookshelf.pdf]]

| |

| − | | |

| − | AWS Academy uses three learning platforms to provide you with access to your learning resources. This guide shows you how to register on each platform and access the learning resources. There are three steps to registering on your class:

| |

| − | *Register in the AWS Training & Certification Portal

| |

| − | *Complete registration in Vocareum

| |

| − | *Complete registration in VitalSource Bookshelf

| |

| − | | |

| − | | |

| − | | |

| − | *'''AWS Training & Certification Portal'''

| |

| − | ::The AWS Training & Certificaiton Portal is our Learning Mangement System. It hosts videos, eLearning modules, and knowledge assessments:

| |

| − | :: '''Resources:'''

| |

| − | ::*Video introductions

| |

| − | ::*Video console demos

| |

| − | ::*Narrated lectures

| |

| − | ::*Knowledge checks

| |

| − | | |

| − | | |

| − | ::*Registering on Your Class:

| |

| − | :::* This is the general link: https://www.aws.training/SignIn

| |

| − | :::* We can Create an account normally. Creo que no requiere ninguna autorización especial.

| |

| − | :::* However, your educator could provide you with a registration link. This is the links provided in the course:

| |

| − | :::: https://www.aws.training/SignIn?returnUrl=%2fUserPreferences%2fRegistration%3ftoken%3d4WQW9dOyY0uFHfKBMIhwSA2-JMU1JT1RLCVP3%26returnUrl%3d%252Foneclickregistration%253Fid%253D35854%2526returnUrl%253D%25252Foneclickregistration%25253Fid%25253D39997

| |

| − | | |

| − | | |

| − | *'''Vocareum:'''

| |

| − | : General link: https://labs.vocareum.com/home/login.php

| |

| − | : Link provided for the course: https://labs.vocareum.com/home/login.php?code=&e=You%20are%20not%20logged%20in.%20Please%20login

| |

| − | : Your educator could provide you with a registration link:

| |

| − | | |

| − | :: '''Resources:'''

| |

| − | ::*Lab exercises

| |

| − | ::*Projects

| |

| − | ::*Activities

| |

| − | | |

| − | | |

| − | *'''VitalSource BookShelf:'''

| |

| − | : https://bookshelf.vitalsource.com/

| |

| − | : We can Create a VitalSource account normally. Creo que no requiere ninguna autorización especial; aunque la guía dice "Your educator will register you in VitalSource Bookshelf"

| |

| − | | |

| − | :: '''Resources:'''

| |

| − | ::*Student materials

| |

| − | | |

| − | | |

| − | <br />

| |

| − | | |

| − | ===AWS Global Infrastructure===

| |

| − | AWS Global infrastructure can be brooked down into three elements:

| |

| − | | |

| − | *Regions

| |

| − | *Availability zones

| |

| − | *Edge Locations

| |

| − | | |

| − | | |

| − | [[File:AWS-Global infrastructure.png|center|thumb|830x830px]]

| |

| − | | |

| − | [[File:AWS Region.png|thumb|507x507px]]

| |

| − | | |

| − | | |

| − | *'''AWS Regions:'''

| |

| − | | |

| − | ::An AWS Region is a geographical area. Each Region is made up of two or more '''Availability Zones'''.

| |

| − | ::AWS has 18 Regions worldwide.

| |

| − | ::You enable and control '''data replication''' across Regions.

| |

| − | ::Communication between Regions uses '''AWS backbone network''' connections infrastructure.

| |

| − | | |

| − | | |

| − | *'''AWS Availability Zones:'''

| |

| − | | |

| − | ::Each Availability Zone is:

| |

| − | :::Made up of '''one or more data centers'''.

| |

| − | :::Designed for '''fault isolation'''.

| |

| − | :::Interconnected with other Availability Zones using '''high-speed private links'''.

| |

| − | | |

| − | ::You choose your availability zones. AWS recommends replicating across Availability Zones for resiliency.

| |

| − | | |

| − | | |

| − | *'''AWS Edge Locations:'''

| |

| − | | |

| − | ::An Edge Location is where users access AWS services.

| |

| − | ::It is a global network of 114 points of presence (103 Edge Locations and 11 regional Edge Caches) in 56 cities across 24 countries.

| |

| − | ::Specifically used with '''Amazon CloudFront''', a Global Content Delivery Network (CDN), to deliver content to end users with reduced latency.

| |

| − | ::'''Regional edge caches''' used for content with infrequent access.

| |

| − | | |

| − | | |

| − | [[File:AWS Regions and number of Availability Zones.png|thumb|center|653x653px]]

| |

| − | [[File:Edge locations-Regional edge caches.png|thumb|center|653x653px]]

| |

| − | | |

| − | | |

| − | *'''AWS Infrastructure Features:'''

| |

| − | | |

| − | :*'''Elastic and Scalable:'''

| |

| − | :::Elastic infrastructure; dynamic adaption of capacity.

| |

| − | :::Scalable infrastructure; adapts to accommodate growth.

| |

| − | | |

| − | :*'''Fault-tolerant:'''

| |

| − | :::Continues operating properly in the presence of a failure.

| |

| − | :::Built-in redundancy of components.

| |

| − | | |

| − | :*'''High availability:'''

| |

| − | :::High level of operational performance

| |

| − | :::Minimized downtime

| |

| − | :::No human intervention

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===AWS Core Services===

| |

| − | AWS Foundational/Core Services

| |

| − | | |

| − | https://www.slideshare.net/AmazonWebServices/aws-core-services-overview-immersion-day-huntsville-2019

| |

| − | | |

| − | | |

| − | AWS has a lot of services, but some of them, provides the foundation for all solutions; we refer to those as the '''Core Services'''.

| |

| − | | |

| − | | |

| − | [[File:AWS-Foundation services.png|center|thumb|830x830px]]

| |

| − | | |

| − | | |

| − | [[File:AWS-Foundation services-Services and Categories.png|center|thumb|836x836px]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Compute====

| |

| − | | |

| − | :*'''Amazon Elastic Compute Cloud (EC2)'''

| |

| − | ::*EC2 is essentially a computer in the cloud. EC2 is a Web Service that provides compute capacity in the cloud (Virtual computing environment in the cloud).

| |

| − | ::*EC2 allows you to create and configure virtual compute capacity.

| |

| − | ::*'''Elastic''' refers tot the fact that if properly configured, you can increase or decrease the amount of servers required by an application automatically, according to the current demands on that application.

| |

| − | ::*'''Compute''' refers to the compute, or '''server''' resources that are being presented. | |

| − | | |

| − | | |

| − | :*'''AWS Lambda:''' | |

| − | ::*AWS Lambda is an event-driven, serverless computing platform. It is a computing service that runs code in response to events and automatically manages the computing resources required by that code.

| |

| − | ::*AWS Lambda lets you run code without provisioning or managing servers. You pay only for the compute time you consume - there is no charge when your code is not running.

| |

| − | ::*The purpose of Lambda, as compared to AWS EC2, is to simplify building smaller, on-demand applications that are responsive to events and new information. AWS targets starting a Lambda instance within milliseconds of an event. Node.js, Python, Java, Go[2], Ruby[3] and C# (through .NET Core) are all officially supported as of 2018. In late 2018, custom runtime support[4] was added to AWS Lambda giving developers the ability to run a Lambda in the language of their choice.

| |

| − | | |

| − | | |

| − | :*'''Auto Scaling:'''

| |

| − | ::*Scales EC2 capacity as needed

| |

| − | ::*Improves availability

| |

| − | | |

| − | | |

| − | :*'''Elastic Load Balancer:'''

| |

| − | ::*Elastic Load Balancing automatically distributes incoming application traffic across multiple targets, such as Amazon EC2 instances, containers, IP addresses, and Lambda functions. It can handle the varying load of your application traffic in a single Availability Zone or across multiple Availability Zones.

| |

| − | | |

| − | | |

| − | :*'''AWS Elastic Beanstalk:'''

| |

| − | ::*AWS Elastic Beanstalk is an easy-to-use service for deploying and scaling web applications and services developed with Java, .NET, PHP, Node.js, Python, Ruby, Go, and Docker on familiar servers such as Apache, Nginx, Passenger, and IIS.

| |

| − | | |

| − | :::You can simply upload your code and Elastic Beanstalk automatically handles the deployment, from capacity provisioning, load balancing, auto-scaling to application health monitoring.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Storage====

| |

| − | | |

| − | :'''There are three broad categories of storage:'''

| |

| − | ::*'''Instance storage:''' Temporary storage that is added to your Amazon EC2 instance

| |

| − | | |

| − | | |

| − | ::*'''Amazon Elastic Block Store (Amazon EBS):''' It is persistent, mountable storage, which can be mounted as a device to an Amazon EC2 instance. Amazon EBS can only be mounted to an Amazon EC2 instance within the same Availability zone.

| |

| − | | |

| − | :::Each Amazon EBS volume is automatically replicated within its Availability Zone to protect you from component failure, offering high availability.

| |

| − | | |

| − | :::* '''Uses:'''

| |

| − | ::::*Boot volumes and storage for Amazon EC2 instances

| |

| − | ::::*Data Storage with a file system

| |

| − | ::::*Database hosts

| |

| − | ::::*Enterprise applications.

| |

| − | | |

| − | :::* '''Volume types:'''

| |

| − | ::::*Solid-State Drives (SSD)

| |

| − | ::::*Hard Disk Drives (HDD)

| |

| − | | |

| − | | |

| − | ::*'''Amazon Simple Storage Service (Amazon S3):''' Similar to Amazon EBS. It is persistent storage; however, it can be accessed from anywhere.

| |

| − | :::* Object level storage

| |

| − | :::* Amazon S3 con even be configured to support corss-region replication such that data put into an Amazon S3 bucket in one region can be automatically replicated to another Amazon S3 Region.

| |

| − | | |

| − | :::* '''Uses:'''

| |

| − | ::::* Storing application assets

| |

| − | ::::* Static web hosting

| |

| − | ::::* Backup and Disaster Recovery (DR)

| |

| − | ::::* Staging area for big data

| |

| − | | |

| − | | |

| − | | |

| − | :'''Another Storage options:'''

| |

| − | | |

| − | ::*'''Amazon Elastic File System (Amazon EFS)'''

| |

| − | ::: Provides simple, scalable, elastic file storage for use with AWS services and on-premises resources. It is easy to use and offers a simple interface that allows you to create and configure file systems quickly and easyly.

| |

| − | ::: Amazon EFS is build to elastically scale on demand withou disrupting applications, growing and shrinking automatically as you add and remove files, so your applications have the storage they need, when they need it.

| |

| − | | |

| − | | |

| − | ::*'''Amazon Glacier:'''

| |

| − | :::* Amazon Glacier is a secure, durable, and extremely low-cost cloud storage service for data archiving and long-term backup.

| |

| − | :::* Data stored in Amazon Glacier takes several hours to retrieve, which is why it's ideal for archiving.

| |

| − | | |

| − | | |

| − | ::*Amazon Relational Database Service (RDS)

| |

| − | | |

| − | | |

| − | ::*Amazon DynamoDB

| |

| − | | |

| − | | |

| − | ::*Amazon Redshift

| |

| − | | |

| − | | |

| − | ::*Amazon Aurora

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====Block vs Object Storage=====

| |

| − | What if you want to change one character in a 1 GB file?:

| |

| − | | |

| − | *With block storage, you only need to change the block that contains the character.

| |

| − | *With object storage, the entire file must be updated.

| |

| − | | |

| − | This difference has a mejor impact on the throughput latency, and cost of your storage solution. Block storage solutions are typically faster and use less bandwidth, but cost more than object-level storage.

| |

| − | [[File:Block vs Object Storage.png|center|thumb|675x675px]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===Analytics===

| |

| − | * '''Amazon Athena''' is an interactive query service that makes it easy to analyze data in Amazon S3 using standard SQL.

| |

| − | | |

| − | | |

| − | * '''Amazon EMR''' provides a managed Hadoop framework that makes it easy, fast, and cost-effective to process vast amounts of data across dynamically scalable Amazon EC2 instances.

| |

| − | | |

| − | | |

| − | * '''Amazon CloudSearch''' is a managed service in the AWS Cloud that makes it simple and cost-effective to set up, manage, and scale a search solution for your website or application. Amazon CloudSearchsupports 34 languages and popular search features such as highlighting, autocomplete, and geospatial search

| |

| − | | |

| − | | |

| − | * '''Amazon Elasticsearch''' Service makes it easy to deploy, secure, operate, and scale Elasticsearch to search, analyze, and visualize data in real-time. With Amazon Elasticsearch Service,you get easy-to-use APIs and real-time

| |

| − | | |

| − | | |

| − | * '''Amazon Kinesis''' makes it easy to collect, process, and analyze real-time, streaming data so you can get timely insights and react quickly to new information.

| |

| − | | |

| − | | |

| − | * '''Amazon Redshift''' is a fast, scalable data warehouse that makes it simple and cost-effective to analyze all your data across your data warehouse and data lake.

| |

| − | | |

| − | | |

| − | * '''Amazon QuickSight''' is a fast, cloud-powered business intelligence (BI) service that makes it easy for you to deliver insights to everyone in your organization. QuickSight lets you create and publish interactive dashboards that can be accessed from browsers or mobile devices.

| |

| − | | |

| − | | |

| − | * '''AWS Data Pipeline''' is a web service that helps you reliably process and move data between different AWS compute and storage services, as well as on-premises data sources, at specified intervals.

| |

| − | | |

| − | | |

| − | * '''AWS Glue''' is a fully managed extract, transform, and load (ETL)service that makes it easy for customers to prepare and load their data for analytics

| |

| − | | |

| − | | |

| − | * '''AWS Lake Formation''' is a service that makes it easy to set up a secure data lake in days. A data lake is a centralized, curated, and secured repository that stores all your data, both in its original form and prepared for analysis.

| |

| − | | |

| − | | |

| − | '''Why should I use Amazon VPC:'''

| |

| − |

| |

| − | Amazon VPC enables you to build a virtual network in the AWS cloud - no VPNs, hardware, or physical datacenters required. You can define your own network space, and control how your network and the Amazon EC2 resources inside your network are exposed to the Internet. You can also leverage the enhanced security options in Amazon VPC to provide more granular access to and from the Amazon EC2 instances in your virtual network.

| |

| − | | |

| − | <br />

| |

| − | ===Important concepts===

| |

| − | | |

| − | <br />

| |

| − | ====Virtual Private Cloud - VPC====

| |

| − | https://aws.amazon.com/vpc/faqs/ | |

| − | | |

| − | https://docs.aws.amazon.com/vpc/latest/userguide/what-is-amazon-vpc.html

| |

| − | | |

| − | | |

| − | Amazon Virtual Private Cloud (Amazon VPC) is an AWS (podríamos decir a framework) that allows you to define or to provision a logically isolated section of the Amazon Web Services ('''a Virtual Network''') where you can

| |

| − | launch AWS resources.

| |

| − | | |

| − | You have complete control over your virtual networking environment, including selection of your own IP address ranges, creation of subnets, and configuration of route tables and network gateways.

| |

| − | | |

| − | | |

| − | * '''Components of Amazon VPC:'''

| |

| − | | |

| − | :* '''Subnet:''' A segment of a VPC's IP address range where you can place groups of isolated resources.

| |

| − | | |

| − | :* '''Internet Gateway:''' The VPC side of a connection to the public Internet.

| |

| − |

| |

| − | :* '''NAT Gateway:''' A highly available, Network Address Translation (NAT) service for your resources in a subnet to access the Internet.

| |

| − |

| |

| − | :* '''Virtual private gateway:''' The VPC side of a VPN connection.

| |

| − |

| |

| − | :* '''Peering Connection:''' A peering connection enables you to route traffic via private IP addresses between two peered VPCs.

| |

| − |

| |

| − | :* '''VPC Endpoints:''' Enables private connectivity to services hosted in AWS, from within your VPC without using an Internet Gateway, VPN, Network Address Translation (NAT) devices, or firewall proxies.

| |

| − |

| |

| − | :* '''Egress-only Internet Gateway:''' A stateful gateway to provide egress only access for IPv6 traffic from the VPC to the Internet.

| |

| − | | |

| − | | |

| − | * '''The advantage of the VPC:'''

| |

| − | : In a VPC you can control how your network and the Amazon EC2 resources inside your network are exposed to the Internet. This way, you can take advantage of the enhanced security options in Amazon VPC to provide more granular access to and from Amazon EC2 instances in your virtual network.

| |

| − | | |

| − | | |

| − | : Some of the security options you can take advantage are:

| |

| − | :* ...

| |

| − | :* ...

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Network ACLs====

| |

| − | https://docs.amazonaws.cn/en_us/vpc/latest/userguide/vpc-network-acls.html

| |

| − | | |

| − | A network access control list (ACL) is an optional layer of security for your VPC that acts as a firewall for controlling traffic in and out of one or more subnets.

| |

| − | | |

| − | For example, we can define an ACL to allow Inbound traffic only when the traffic match this features:

| |

| − | '''Type:''' HTTPS

| |

| − | '''Protocol:''' TCP

| |

| − | '''Port Range:''' 443

| |

| − | '''Source:''' 0.0.0.0/0 (Cualquier source)

| |

| − | '''Allow/Deny:''' Allow

| |

| − | '''Comments:''' Allows inbound HTTP traffic from any IPv4 address.

| |

| − | | |

| − | All other traffic will be denied. For example, HTTP traffic (TCP, port 80) OR SSH (TCP port 22) traffic will be denied.

| |

| − | | |

| − | | |

| − | | |

| − | * '''Default Network ACL:''' Your VPC automatically comes with a modifiable default network ACL. By default, it allows all inbound and outbound IPv4 traffic and, if applicable, IPv6 traffic.

| |

| − | :: The default network ACL is configured to allow all traffic to flow in and out of the subnets with which it is associated. Each network ACL also includes a rule whose rule number is an asterisk. This rule ensures that if a packet doesn't match any of the other numbered rules, it's denied.

| |

| − | | |

| − | :: The following is an example default network ACL for a VPC that supports IPv4 only:

| |

| − | | |

| − | [[File:Default_AWS_ACL.png|700px|thumb|center|]]

| |

| − | | |

| − | | |

| − | | |

| − | * '''Custom Network ACL:''' The following table shows an example of a custom network ACL for a VPC that supports IPv4 only.

| |

| − | | |

| − | [[File:Custom_AWS_ACL.png|1000px|thumb|center|]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Security Groups====

| |

| − | | |

| − | | |

| − | Here you can see the Network Access Control List (ACL) associated with the subnet. The rules currently permit ALL Traffic to flow in and out of the subnet, but they can be further restricted by using Security Groups.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | | |

| − | ==AWS Vocareum labs==

| |

| − | https://labs.vocareum.com

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ===ACA Module 3 LAB - Making Your Environment Highly Available===

| |

| − | https://labs.vocareum.com/main/main.php

| |

| − | | |

| − | | |

| − | Critical business systems should be deployed as Highly Available applications, meaning that they can remain operational even when some components fail. To achieve High Availability in AWS, it is recommended to run services across '''multiple Availability Zones.'''

| |

| − | | |

| − | Many AWS services are inherently highly available, such as Load Balancers, or can be configured for high availability, such as deploying Amazon EC2 instances in multiple Availability Zones.

| |

| − | | |

| − | In this lab, you will start with an application running on a single Amazon EC2 instance and will then convert it to be Highly Available.

| |

| − | | |

| − | | |

| − | '''Objectives:'''

| |

| − | | |

| − | After completing this lab, you will be able to:

| |

| − | * Create an image of an existing Amazon EC2 instance and use it to launch new instances.

| |

| − | * Expand an Amazon VPC to additional Availability Zones.

| |

| − | * Create VPC Subnets and Route Tables.

| |

| − | * Create an AWS NAT Gateway.

| |

| − | * Create a Load Balancer.

| |

| − | * Create an Auto Scaling group.

| |

| − | | |

| − | | |

| − | The final product of your lab will be this:

| |

| − | | |

| − | [[File:aws_lab-Making_your_environment_highly_available1.jpg|950px|thumb|center|]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Task 1 - Inspect Your environment====

| |

| − | This lab begins with an environment already deployed via AWS CloudFormation including:

| |

| − | * An Amazon VPC

| |

| − | * A public subnet and a private subnet in one Availability Zone

| |

| − | * An Internet Gateway associated with the public subnet

| |

| − | * A NAT Gateway in the public subnet

| |

| − | * An Amazon EC2 instance in the public subnet

| |

| − | | |

| − | | |

| − | [[File:aws_lab-Making_your_environment_highly_available2.jpg|950px|thumb|center|]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====Task 1-1 - Inspect Your VPC=====

| |

| − | * On the AWS Management Console, on the Services menu, click VPC.

| |

| − | : In the left navigation pane, click Your VPCs.

| |

| − | : Here you can see the Lab VPC that has been created for you.

| |

| − | | |

| − | | |

| − | * '''IP range:''' In the IPv4 CIDR column, you can see a value of 10.200.0.0/20, which means this VPC includes 4,096 IPs between 10.200.0.0 and 10.200.15.255 (with some reserved and unusable).

| |

| − | | |

| − | | |

| − | * It is also attached to a Route Table and a Network ACL.

| |

| − | | |

| − | | |

| − | * This VPC also has a Tenancy of default, instances launched into this VPC will by default use shared tenancy hardware.

| |

| − | | |

| − | | |

| − | | |

| − | * '''Subnets:''' In the navigation pane, click Subnets.

| |

| − | : Here you can see the Public Subnet 1 subnet:

| |

| − | | |

| − | :* In the VPC column, you can see that this subnet exists inside of Lab VPC

| |

| − | | |

| − | | |

| − | :* '''IP range:''' In the IPv4 CIDR column, you can see a value of 10.200.0.0/24, which means this subnet includes the 256 IPs (5 of which are reserved and unusable) between 10.200.0.0 and 10.200.0.255.

| |

| − | | |

| − | | |

| − | :* '''Availability Zone:''' In the Availability Zone column, you can see the Availability Zone in which this subnet resides.

| |

| − | | |

| − | | |

| − | :* Click on the row containing Public Subnet 1 to reveal more details at the bottom of the page.

| |

| − | | |

| − | | |

| − | :* '''Route Table:''' Click the Route Table tab in the lower half of the window.

| |

| − | | |

| − | :: Here you can see details about the Routing for this subnet:

| |

| − | | |

| − | ::* '''Local traffic:''' The first entry specifies that traffic destined within the VPC's CIDR range (10.200.0.0/20) will be routed within the VPC (local).

| |

| − | | |

| − | ::* '''Internet Gateway:''' The second entry specifies that any traffic destined for the Internet (0.0.0.0/0) is routed to the Internet Gateway (igw-). <span style="color:#FF0000">'''This setting makes it a Public Subnet.'''</span>

| |

| − | | |

| − | :::* In the left navigation pane, click Internet Gateways.

| |

| − | :::: Notice that an Internet Gateway is already associated with Lab VPC.

| |

| − | | |

| − | | |

| − | | |

| − | :* '''ACL:''' Click the Network ACL tab in the lower half of the window.

| |

| − | | |

| − | :: Here you can see the Network Access Control List (ACL) associated with the subnet. The rules currently permit ALL Traffic to flow in and out of the subnet, but they can be further restricted by using Security Groups.

| |

| − | | |

| − | | |

| − | | |

| − | :* '''Security Groups:''' In the navigation pane, click Security Groups.

| |

| − | | |

| − | ::* Click Configuration Server SG.

| |

| − | ::: This is the security group used by the Configuration Server.

| |

| − | | |

| − | ::* Click the Inbound Rules tab in the lower half of the window.

| |

| − | ::: Here you can see that this Security Group only allows traffic via SSH (TCP port 22) and HTTP (TCP port 80).

| |

| − | | |

| − | ::* Click the Outbound Rules tab.

| |

| − | ::: Here you can see that this Security Group allows all outbound traffic.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | | |

| − | =====Task 1-2 - Inspect Your Amazon EC2 Instance=====

| |

| − | In this task, you will inspect the Amazon EC2 instance that was launched for you.

| |

| − | | |

| − | | |

| − | * On the Services menu, click EC2.

| |

| − | | |

| − | | |

| − | * In the left navigation pane, click Instances.

| |

| − | : Here you can see that a Configuration Server is already running. In the Description tab in the lower half of the window, you can see the details of this instance, including:

| |

| − | :* Public and private IP addresses

| |

| − | :* Availability zone

| |

| − | :* VPC

| |

| − | :* Subnet

| |

| − | :* Security Groups.

| |

| − | | |

| − | | |

| − | * In the Actions menu, click Instance Settings > View/Change User Data.

| |

| − | : Note that no User Data appears! This means that the instance has not yet been configured to run your web application. When launching an Amazon EC2 instance, you can provide a User Data script that is executed when the instance first starts and is used to configure the instance. However, in this lab you will configure the instance yourself!

| |

| − | | |

| − | | |

| − | * Click Cancel to close the User Data dialog box.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | | |

| − | ====Task 2 - Login to your Amazon EC2 instance====

| |

| − | * En este lab se descargó el archivo «labsuser.pem» de una forma particular debido a que el la genera la VPC and Instances de forma automática. Sabemos que este archivo «labsuser.pem» se optiene cuando se crea la Instance, podemos descargarlo y contiene la ssh key que permite autenticar la conexión entre nuestra computadora y la instance.

| |

| − | | |

| − | * Change the permissions on the key to be read only, by running this command:

| |

| − | chmod 400 labsuser.pem

| |

| − | | |

| − | | |

| − | * Luego para conectarnos desde el terminal:

| |

| − | ssh -i labsuser.pem ec2-user@<public-ip>

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Task 3 - Install and Launch Your Web Server PHP Application====

| |

| − | sudo yum -y update

| |

| − | | |

| − | | |

| − | This command installs an Apache web server (httpd) and the PHP language interpreter:

| |

| − | sudo yum -y install httpd php

| |

| − | | |

| − | | |

| − | This configures the Apache web server to automatically start when the instance starts:

| |

| − | sudo chkconfig httpd on

| |

| − | | |

| − | | |

| − | This downloads a zip file containing the PHP web application:

| |

| − | wget https://aws-tc-largeobjects.s3-us-west-2.amazonaws.com/CUR-TF-200-ACACAD/studentdownload/phpapp.zip

| |

| − | | |

| − | sudo unzip phpapp.zip -d /var/www/html/

| |

| − | | |

| − | | |

| − | This starts the Apache web server:

| |

| − | sudo service httpd start

| |

| − | | |

| − | | |

| − | Open a new web browser tab, paste the Public IP address for your instance in the address bar and hit Enter. The web application should appear and will display information about your location (actually, the location of your Amazon EC2 instance). This information is obtained from freegeoip.app.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Task 4 - Create an Amazon Machine Image - AMI====

| |

| − | Now that your web application is configured on your instance, you will create an Amazon Machine Image (AMI) of it. An AMI is a copy of the disk volumes attached to an Amazon EC2 instance. When a new instance is launched from an AMI, the disk volumes will contain exactly the same data as the original instance.

| |

| − | | |

| − | This is an excellent way to clone instances to run an application on multiple instances, even across multiple Availability Zones.

| |

| − | | |

| − | In this task, you will create an AMI from your Amazon EC2 instance. You will later use this image to launch additional, fully-configured instances to provide a Highly Available solution.

| |

| − | | |

| − | | |

| − | * Return to the web browser tab showing the EC2 Management Console.

| |

| − | | |

| − | | |

| − | * Ensure that your Configuration Server is selected, and click Actions > Image > Create Image.

| |

| − | : You will see that a Root Volume is currently associated with the instance. This volume will be copied into the AMI.

| |

| − | | |

| − | | |

| − | * For Image name, type: Web application

| |

| − | | |

| − | | |

| − | * Leave other values at their default settings and click Create Image.

| |

| − | | |

| − | | |

| − | * Click Close.

| |

| − | : The AMI will be created in the background and you will use it in a later step. There is no need to wait while it is being created.

| |

| − | | |

| − | | |

| − | <br />

| |

| − | ====Task 5 - Configure a Second Availability Zone====

| |

| − | To build a highly available application, it is a best practice to launch resources in multiple Availability Zones. Availability Zones are physically separate data centers (or groups of data centers) within the same Region. Running your applications across multiple Availability Zones will provide greater availability in case of failure within a data center.

| |

| − | | |

| − | | |

| − | In this task, you will duplicate your network environment into a second Availability Zone. You will create:

| |

| − | * A second public subnet

| |

| − | * A second private subnet

| |

| − | * A second NAT Gateway

| |

| − | * A second private Route Table

| |

| − | | |

| − | | |

| − | [[File:aws_lab-Making_your_environment_highly_available3.jpg|950px|thumb|center|]]

| |

| − | | |

| − | | |

| − | <br />

| |

| − | =====Task 5-1 - Create a second Public Subnet=====

| |

| − | * On the Services menu, click VPC.

| |

| − | | |

| − | * In the left navigation pane, click Subnets.

| |

| − | | |

| − | * In the row for Public Subnet 1, take note of the value for Availability Zone. (You might need to scroll sideways to see it.)

| |

| − | : Note: The name of an Availability Zone consists of the Region name (eg us-west-2) plus a zone identifier (eg a). Together, this Availability Zone has a name of us-west-2a.

| |

| − | | |

| − | | |

| − | * Click Create Subnet. In the Create Subnet dialog box, configure the following:

| |

| − | :* Name tag: <code> Public Subnet 2 </code>

| |

| − | :* VPC: Lab VPC

| |

| − | :* Availability Zone: Choose a '''different Availability Zone''' from the existing Subnet (for example, if it was a, then choose b).

| |

| − | :* IPv4 CIDR block: <code> 10.200.1.0/24 </code>

| |

| − | : This will create a second Subnet in a different Availability Zone, but still within '''Lab VPC'''. It will have an IP range between 10.200.1.0 and 10.200.1.255.

| |

| − | | |

| − | * Click Create.

| |

| − | | |

| − | | |

| − | * Copy the Subnet ID to a text editor for later use, then click Close.

| |

| − | : It should look similar to: subnet-abcd1234

| |

| − | | |

| − | | |

| − | * '''Edit route table association:'''

| |

| − | | |

| − | :* With '''Public Subnet 2''' selected, click the '''Route Table''' tab in the lower half of the window. (Do not click the Route Tables link in the left navigation pane.)

| |

| − | : Here you can see that your new Subnet has been provided with a default Route Table, but this Route Table does not have a connection to your Internet gateway. You will change it to use the Public Route Table.

| |

| − | | |

| − | :* Click '''Edit route table association'''.

| |

| − | | |

| − | :* Try each '''Route Table ID''' in the list, selecting the one that shows a '''Target''' containing ''igw''.

| |

| − | | |

| − | :* Click Save then click Close.

| |

| − | :: <span style="color:#FF0000">Because we have chosen a Route Table that has a connection to our Internet gateway, Public Subnet 2 is now a Public Subnet that can communicate directly with the Internet.</span>

| |

| − | | |

| − | | |

| − | <br />

| |

| − | | |

| − | =====Task 5-2 - Create a Second Private Subnet=====

| |