Difference between revisions of "Django"

Adelo Vieira (talk | contribs) (→Clone our code to the server) |

Adelo Vieira (talk | contribs) (→Clone our code to the server) |

||

| Line 2,572: | Line 2,572: | ||

<br /> | <br /> | ||

| − | ===Clone our code to the server=== | + | ===Clone our code to the server and configure the project=== |

https://www.udemy.com/course/django-python-advanced/learn/lecture/32236476#overview | https://www.udemy.com/course/django-python-advanced/learn/lecture/32236476#overview | ||

| Line 2,585: | Line 2,585: | ||

vi .env | vi .env | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | |||

<code>vi .env</code> | <code>vi .env</code> | ||

| Line 2,593: | Line 2,594: | ||

DJANGO_SECRET_KEY=lsfdlkdf092342klilisdfoi09d | DJANGO_SECRET_KEY=lsfdlkdf092342klilisdfoi09d | ||

DJANGO_ALLOWED_HOSTS=ec2-54-153-49-86.us-west-1.compute.amazonaws.com # This is the Public IPv4 DNS we can get from our Instance summary | DJANGO_ALLOWED_HOSTS=ec2-54-153-49-86.us-west-1.compute.amazonaws.com # This is the Public IPv4 DNS we can get from our Instance summary | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | <br /> | ||

| + | ===Running the service=== | ||

| + | https://www.udemy.com/course/django-python-advanced/learn/lecture/32236480#overview | ||

| + | |||

| + | https://github.com/LondonAppDeveloper/build-a-backend-rest-api-with-python-django-advanced-resources/blob/main/deployment.md | ||

| + | |||

| + | |||

| + | To start the service, run: | ||

| + | <syntaxhighlight lang="bash"> | ||

| + | docker-compose -f docker-compose-deploy.yml up -d | ||

| + | </syntaxhighlight> | ||

| + | |||

| + | |||

| + | To stop the service, run: | ||

| + | <syntaxhighlight lang="bash"> | ||

| + | docker-compose -f docker-compose-deploy.yml down | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<br /> | <br /> | ||

Latest revision as of 21:39, 1 June 2023

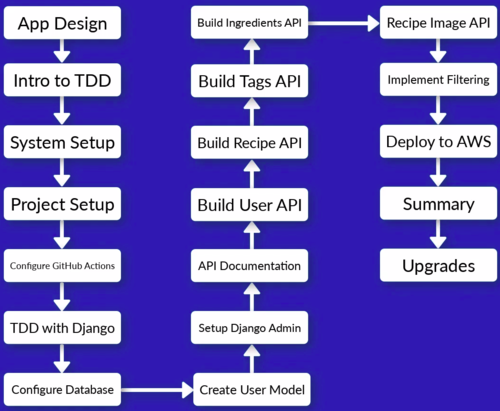

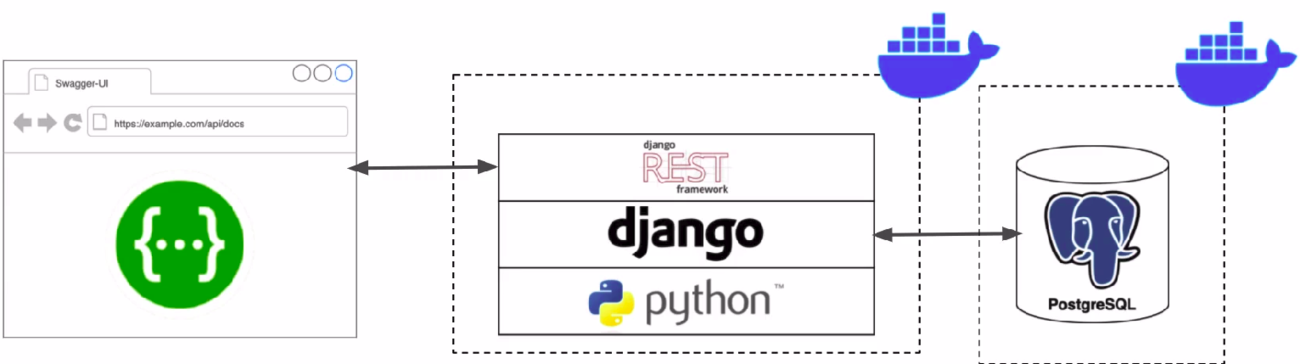

This page is about the Udemy course: Build a Backend REST API with Python Django - Advanced

In this course we build a Recipe REST API

https://www.udemy.com/course/django-python-advanced/

https://www.djangoproject.com/

|

Techologies used in this course: |

API Features: |

Structure of the project: |

|

|

|

Contents

- 1 Docker

- 2 Docker on GitHub Actions

- 3 Unit Tests and Test-driven development (TDD)

- 4 Some VS configurations

- 5 Setting up the development environment

- 5.1 Creating a Github repository and clone it to your local machine

- 5.2 Setting up the DockerHub account along with GitHub Actions

- 5.3 Installing required libraries

- 5.4 Creating a Docker Container

- 5.5 Creating a Django project and testing Linting through Docker Compose

- 5.6 Setting up Unit Testing

- 5.7 Running our development server through Docker Compose

- 5.8 Configuring GitHub Actions to run automatic task whenever we modify our project

- 5.9 Test driven development with Django (TDD)

- 5.10 Configuring the DB

- 5.11 Setting up DB migration through docker-compose

- 6 API Documentation

- 7 Django user model - Django authentication system - Django admin system

- 8 Bilding the user API

- 9 Deployment

- 9.1 Overview

- 9.2 Adding the new WSGI server to the project

- 9.3 Adding the reverse proxy configuration

- 9.4 Creating the proxy Dockerfile

- 9.5 Handling configurations

- 9.6 Creating an AWS account and user

- 9.7 Uploading our SSH public key to AWS and creating an EC2 instance

- 9.8 Setting up a deploy SSH key on

- 9.9 Install dependencies on our server

- 9.10 Clone our code to the server and configure the project

- 9.11 Running the service

Docker

Some benefits of using Docker Docker#Why use Docker

Drawbacks of using Docker in this project: (Not sure if these limitations are still in place)

- VSCode will be unable to access interpreter

- More Difficult to use integrated features, such as the Interactive debugger and the Linting tools.

- Here there is a tutorial from the same Instructor about how to configure VSCode to work with Docker https://londonappdeveloper.com/debugging-a-dockerized-django-app-with-vscode/

- Sin embargo, creo que este tutorial es viejo. Creo que ya deberian haber Extensions para VSCode que resuelvan el problema de forma más fácil.

- Verificar esta extension: https://code.visualstudio.com/docs/devcontainers/tutorial

This is a related question someone asked in the course about the integration between Docker and VSCode:

Chanin

- Import "rest_framework" could not be resolved

Mark - Instructor

- Hey Chanin,

- This is because we've installed the Python dependencies inside Docker, so VSCode isn't able to access the Python interpreter we are using.

- There are a few ways round this:

- * 1. Disable the Pylance extension to avoid seeing any errors - this is my preferred approach, because configuring VSCode to access the Python interpreter in Docker can be tedious. I like to disable the integrated features entirely and do the linting and testing manually through the command line.

- * 2. Configure VSCode to work with Docker - this is quite a complex approach, but I have a guide on setting up a Django project for this here: https://londonappdeveloper.com/debugging-a-dockerized-django-app-with-vscode/

- Hope this helps.

Ashley

- A follow up on this. What would be an advantage/disadvantage to just in this particular project running "pip install djangorestframework" ?

Mark - Instructor:

- The advantage would be that you get the linting tools to work. The disadvantage is that you need to install the dependency locally and it might conflict with other Python packages.

- You could always setup a virtual environment locally if you wanted.

How will be used Docker in this project

- Create a

Dockerfile: This is the file that contains all the OS level dependencies that our project needs. It is just a list of steps that Docker use to create an image for our project:

- Firs we choose a base image, which is the Python base image provided for free in DockerHub.

- Install dependencies: OS level dependencies.

- Setup users: Linux users needed to run the application

- Create a Docker Compose configuration

docker-compose.yml: Tells Docker how to run the images that are created from our Docker file configuration.

- We need to define our "Services":

- Name (We are going bo be using the name

app) - Port mappings

- Name (We are going bo be using the name

- Then we can run all commands via Docker Compose. For example:

docker-compose run --rm app sh -c "python manage.py collectstatic"

docker-composeruns a Docker Compose commandrunwill start a specific container defined in config--rm: This is optional. It tells Docker Compose to remove the container ones it finishes running.app: This is the name of the app/service we defined.sh -cpass in a shell command

Docker Hub

Docker Hub is a cloud-based registry service that allows developers to store, share, and manage Docker images. It is a central repository of Docker images that can be accessed from anywhere in the world, making it easy to distribute and deploy containerized applications.

Using Docker Hub, you can upload your Docker images to a central repository, making it easy to share them with other developers or deploy them to production environments. Docker Hub also provides a search function that allows you to search for images created by other developers, which can be a useful starting point for building your own Docker images.

Docker Hub supports both automated and manual image builds. With automated builds, you can connect your GitHub (using Docker on GitHub Actions) or Bitbucket repository to Docker Hub and configure it to automatically build and push Docker images whenever you push changes to your code. This can help streamline your CI/CD pipeline and ensure that your Docker images are always up-to-date.

Docker Hub has introduced rate limits:

- 100 pulls/6hr for unauthenticated users (applied for all users)

- 200 pulls/6hr for authenticated users (for free) (only for your users)

- So, we have to Authenticate with Docker Hub: Create an account / Setup credentials / Login before running job.

Docker on GitHub Actions

Docker on GitHub Actions is a GitHub feature that allows developers to use Docker containers for building and testing their applications in a continuous integration and delivery (CI/CD) pipeline on GitHub.

With Docker on GitHub Actions, you can define your build and test environments using Dockerfiles and Docker Compose files, and run them in a containerized environment on GitHub's virtual machines. This provides a consistent and reproducible environment for building and testing your applications, regardless of the host operating system or infrastructure.

Docker on GitHub Actions also provides a number of pre-built Docker images and actions that you can use to easily set up your CI/CD pipeline. For example, you can use the "docker/build-push-action" action to build and push Docker images to a container registry, or the "docker-compose" action to run your application in a multi-container environment.

Using Docker on GitHub Actions can help simplify your CI/CD pipeline, improve build times, and reduce the risk of deployment failures due to environmental differences between development and production environments.

Common uses for GitHub actions are:

- Deployment: This is not covered in this course:

- There is a specific course for it: https://www.udemy.com/course/devops-deployment-automation-terraform-aws-docker/

- This instructor also has some resources about this on Youtube, for example: https://www.youtube.com/watch?v=mScd-Pc_pX0

- Handle Code linting (Cover in this course)

- Run Unit test (Cover in this course)

To configure GitHub Actions, we start by setting a Trigger. There are different Triggers that are documented on the GitHub website.

The Trigger that we are going to be using is the «Push to GitHub» trigger:

- Whenever we push code to GitHub. It automatically will run some actions:

- Run Unit Test: After the job run there will be an output/result: success/fail...

GitHub Actions is charged per minute. There are 2000 free minutes for all free accounts.

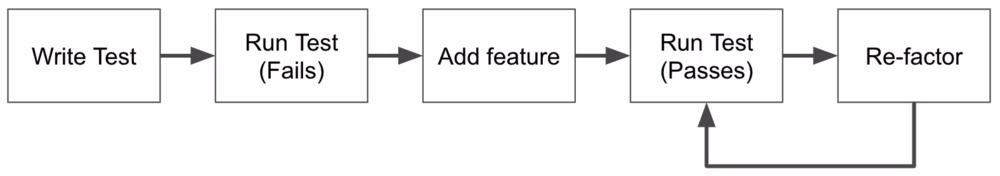

Unit Tests and Test-driven development (TDD)

See explanation at:

- https://www.udemy.com/course/django-python-advanced/learn/lecture/32238668#notes

- https://www.udemy.com/course/django-python-advanced/learn/lecture/32238780#learning-tools

Unit Tests: Code which test code. It's usually done this way:

- You set up some conditions; such as inputs to a function

- Then you run a piece of code

- You check outputs of that code using "assertions"

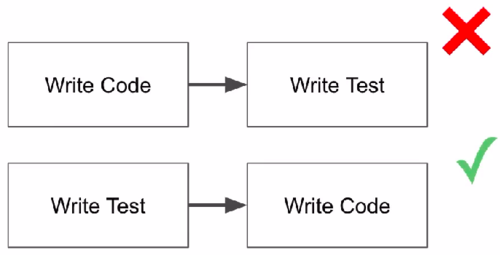

Test-driven development (TDD)

Some VS configurations

Automatically remove whitespaces in VS: This ensure that whitespaces are remove when you save the file, which could cause some issues with Python.

- Go to VS Settings and check the option «Files: Trim Trailing Whitespace (When enabled, will trim trailing whitespace when saving a file)»

Setting up the development environment

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238710#overview

Creating a Github repository and clone it to your local machine

Go to https://github.com, create a repository for the project and clone it into your local machine. See GitHub for help:

https://github.com/adeloaleman/django-rest-api-recipe-app

git clone git@github.com:adeloaleman/django-rest-api-recipe-app.git

Then we create the app directory inside our project directorymkdir app

Setting up the DockerHub account along with GitHub Actions

Go to DockerHub https://hub.docker.com : login into your account. Then go to

Account settings > Security > New Access Token:

- Access token description: It's good practice to use the name of your github project repository:

django-rest-api-recipe-app- Create and copy the Access token.

- This Token will be used by GitHub to get access to you DockerHub account and build the Docker container.

Setting up Docker on GitHub ActionsGo to your project's GitHub repository : https://github.com/adeloaleman/django-rest-api-recipe-app >

Settings > Secrets and variables > Actions:

New repository secret: First we add the user:

- Name:

DOCKERHUB_USER- Secret: This must be your DockerHub user. In my case (don't remember why is my C.I.):

16407742

- Then we click again

New repository secretto add the token:

- Name:

DOCKERHUB_TOKEN- Secret: This must be the DockerHub Access token we created at https://hub.docker.com:

***************

This way, GitHub (through Docker on GitHub Actions) is able to authenticate and gets access to DockerHub to build the Docker container.

Installing required libraries

Go to your local project's directory and create

requirements.txtDjango>=3.2.4,<3.3 djangorestframework>=3.12.4,<3.13This tells pip that we want to install at least version 3.2.4 (which is the last version at the moment of the course) but less than 3.3. This way we make sure that we get the last 3.2.x version. However, if 3.3 is released, we want to stay with 3.2.x cause this important version change could introduce significant changes that may cause our code to fail"

Now we are going to installflake8, whici is Linting librery we'll be using

Linting is running a tool that test our code formatting. It highlights herror, typos, formatting issues, etc.

Create the file

requirements.dev.txt:flake8>=3.9.2,<3.10The reason why we are creating a new «requirement» file is because we are going to add a custom state to our Docker Compose so we only install these development requirements when we are building an image for our local development server. This is because we don't need the «flake8» package when we will deploy our application. We only need Linting for development.

It is good practice to separate development dependencies from the actual project dependencies so you don't introduce unnecessary packages into the image that you will deploy in your deployment server.

Now wee need to add the defaultflake8conf file, which is.flake8and musb be placed inside ourappdirectory. We're gonna use it to manage exclusions. We only want to do Linting in the files we code.

app/.flake8:[flake8] exclude = migrations, __pycache__, manage.py, settings.py

Creating a Docker Container

Go to your local project's directory and create

DockerfileFROM python:3.9-alpine3.13 LABEL maintainer="adeloaleman" ENV PYTHONUNBUFFERED 1 COPY ./requirements.txt /tmp/requirements.txt # This copy the file into the Docker container COPY ./requirements.dev.txt /tmp/requirements.dev.txt # We have separated development requirements from deployment requirements for the reasons that will be explained below COPY ./app /app WORKDIR /app # This is the default directory where our commands are gonna be run when we run commands on our Docker image EXPOSE 8000 # This is the port we are going to access in our container ARG DEV=false RUN python -m venv /py && \ # Creates a virtual env. Some people says it's not needed inside a Container /py/bin/pip install --upgrade pip && \ # Upgrade pip /py/bin/pip install -r /tmp/requirements.txt && \ # Install our requirements if [ $DEV = "true" ]; \ # We install development dependencies only if DEV=true. This is the case where the container is built through our Docker Compose (see comments below). then /py/bin/pip install -r /tmp/requirements.dev.txt ; \ fi && \ rm -rf /tmp && \ # rm /tmp cause it's not needed anymore adduser \ # We add this user because it's good practice not to use the root user --disabled-password \ --no-create-home \ django-user ENV PATH="/py/bin:$PATH" # This is to avoid specify /py/bin/ every time that we want to run a command from our virtual env USER django-user # Finally, we switch the user. The above commands were run as root but in the end, we switch the user so commands executed later will be run by this user

python:3.9-alpine3.13: This is the base image that we're gonna be using. It is pulled from Docker Hub.

pythonis the name of the image and3.9-alpine3.13is the name of the tag.alpineis a light version of Linux. It's ideal to build Docker containers cause it doesn't have any unnecessary dependencies, which makes it very light and efficient..- You can find all the Images/Tags at https://hub.docker.com

ARG DEV=false: This specify that it is not for Development. This will be overridden in our docker-compose.yml by « - DEV=true ». So when we build the container through our Docker Compose, it will be set as «DEV=tru» but by default (without building it through Docker Compose) it is set as « ARG DEV=false »

.dockerignore: We want to exclude any file that Docker doesn't need to be concerned with.# Git .git .gitignore # Docker .docker # Python app/__pycache__/ # We want to exclude this because it could cause issues. The __pycache__ that is created in our local machine, app/*/__pycache__/ # would maybe be specifically for our local OS and not for the container OS app/*/*/__pycache__/ app/*/*/*/__pycache__/ .env/ .venv/ venv/

At this point we can test building our image. For this we run:docker build .

Now we need to create adocker-compose.yml

Docker Compose is a tool that allows you to define and manage multi-container Docker applications. It provides a way to describe the services, networks, and volumes required for your application in a declarative YAML file.

docker-compose.ymlversion: "3.9" # This is the version of the Docker Compose syntax. We specify it in case Docker Compose release a new version of the syntaxw services: # Docker Compose normally consists of one or more services needed for our application app: # This is the name of the service that is going to run our Dockerfile build: context: . # This is to specify the current directory args: - DEV=true # This overrides the DEV=false defined in our Dockerfile so we can make distinctions between the building of our development Container and the Deployment one (See comments above) ports: # This maps port 8000 in our local machine to port 8000 in our Docker container - "8000:8000" volumes: - ./app:/app command: > sh -c "python manage.py runserver 0.0.0.0:8000"

- ./app:/app: This maps the app directory created in our local machine with the app directory of our Docker container. We do this because we want the changes made in our local directory to be reflected in our running container in real-time. We don't want to rebuild the Container every time we change a line of code.

command: >: This is going to be the command to run the service. That is to say, the command that «docker-compose» run by default. I'm not completely sure but I think that when we run «docker-compose»; it actually runs the command specified here. We can override this command by using «docker-compose run...», which is something we are going to be doing often.

Then we run in the project directory:docker-compose build

Creating a Django project and testing Linting through Docker Compose

Now we can create our

Djangoproject through our Docker Compose:docker-compose run --rm app sh -c "django-admin startproject app ." # Because we have already created an app directory, we need to add the «.» at the end so it won't create an extra app directory

Runningflake8through our Docker Compose:docker-compose run --rm app sh -c "flake8"

Setting up Unit Testing

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238780#learning-tools

We are going to useDjango test suitefor Unit Testing:

The Django test suite/framework is built on top of theunittestlibrary. Django test adds features such as:

- Test client: This is a dummy web brower that we can use to make request to our project.

- Simulate authentication

- Test Database (Test code that uses the DB): It automatically creates a temporary database and will clear the data from the database once you've finished running each test so it make sure that we have a fresh database for each test. We usually don't want to create tests data inside the real database. It is possible to override this behavior so that you have consistent data for all of your tests. However, it's not really recommended unless there's a specific reason to do that.

On top of Django we have theDjango REST Framework, which also adds some features:

- API test client. Which is like the Test client add in Django but specifically for testing API requests: https://www.udemy.com/course/django-python-advanced/learn/lecture/32238810#learning-tools

Where do yo put test?

- When you create a new app in Django, it automatically adds a

test.pymodule. This is a placeholder wehre you can add tests.- Alternatively, you can create a

test/subdirectory inside your app, which allows you to split your test up into multiple different modules.- You can only use either the

test.pymodule or thetest/directory. You can't use them both.- If you do create tests inside your

test/directory, each module must be prefixed by thetest_.- Test directories must contain

__init__.py. This is what allows Django to pick up the tests.

Mocking:

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238806#learning-tools

Mocking is a technique used in software development to simulate the behavior of real objects or components in a controlled environment for the purpose of testing.

In software testing, it is important to isolate the unit being tested and eliminate dependencies on external systems or services that may not be available or that may behave unpredictably. Mocking helps achieve this by creating a mock or a simulated version of the dependencies that are not available, allowing developers to test their code in isolation.

We're going to implement Mocking useing theunittest.mocklibrary.We're going to setup Tests for each Django app that we create. We will run the Tests through Docker Compose:

Test classes:

SimpleTestCase: No DB integration.TestCase: DB integration.

Running Test through docker-compose:docker-compose run --rm app sh -c "python manage.py test"

Running our development server through Docker Compose

docker-compose up

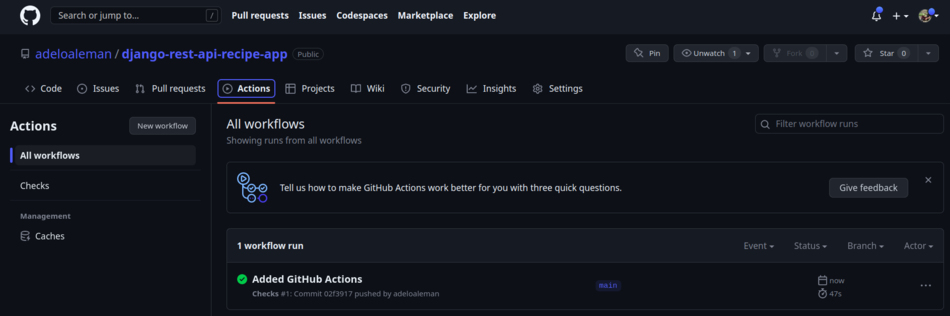

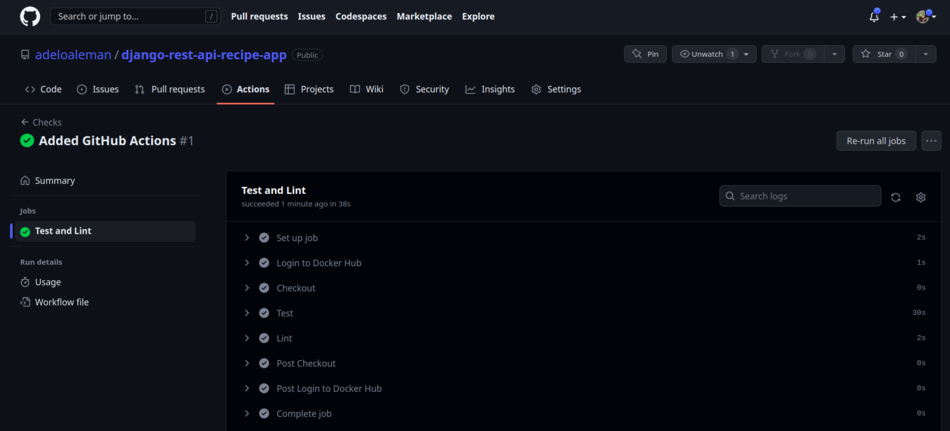

Configuring GitHub Actions to run automatic task whenever we modify our project

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238764#learning-tools

Create a config file at

django-rest-api-recipe-app/github/workflows/checs.yml(checscan be replaced for anything we want)--- # This specify that it is a .yml ile name: Checks on: [push] # This is the Trigger jobs: test-lint: name: Test and Lint runs-on: ubuntu-20.04 steps: - name: Login to Docker Hub uses: docker/login-action@v1 with: username: ${{ secrets.DOCKERHUB_USER }} # These are the secrets that we have configured above in our GitHub repository using the USER and TOKEN created in DockerHub password: ${{ secrets.DOCKERHUB_TOKEN }} - name: Checkout uses: actions/checkout@v2 # This allows us to check out our repository's code into the file system of the GitHub Actions Runner. In this case the ubuntu-20.04 VM - name: Test run: docker-compose run --rm app sh -c "python manage.py test" - name: Lint run: docker-compose run --rm app sh -c "flake8"

Test the GitHub actions:

- We go to to our GitHub repository: https://github.com/adeloaleman/django-rest-api-recipe-app >

Actions:

- We'll see that there is no actions yet in the Acctions page.

- In our local machine we commit our code:

git add . git commit -am "Added GitHub Actions" git push origin

- Then, if we come back to our

GitHub repository > Actionswe'll see the execution ofchecs.yml:

Test driven development with Django (TDD)

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238804#learning-tools

As we already mentioned, we are going to use Django test framework...

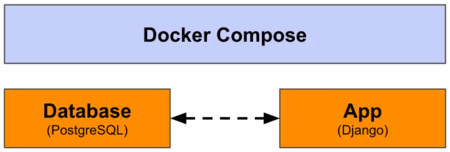

Configuring the DB

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238814#learning-tools

- We're gonna use PostgreSQL

- We're gonna use Docker Compose to configure the DB:

- Defined with project (re-usable): Docker Compose allows us to define the DB configuration inside our project.

- Persistent data using volumes: In our local DB we can persist the data until we want to clear it.

- Maps directory in container to local machine.

- It handles the network configuration.

- It allows us to configure our settings using Environment variable configuration.

- Adding the DB to our Docker Compose:

- Then, to make sure the DB configuration is correct by running our server:

- DB configuration in Django:

- Install DB adaptor dependencies: Tools Django uses to connect (Postgres adaptor).

- We're goona use the

psycopg2library/adaptor.- Package dependencies for

psycopg2:

- List of package in the official

psycopg2documentation:C compiler/python3-dev/libpq-dev- Equivalent packages for Alpine:

postgresql-client/build-base/postgresql-dev/musl-dev

- Update

Dockerfile

- Update

requirements.txt

- Then we can rebuild our container:

docker-compose down # This clears the containers (no me queda claro si hay que hacerlo siempre) docker-compose build

- Confiure Django: Tell Django how to connect https://www.udemy.com/course/django-python-advanced/learn/lecture/32238826#learning-tools

- We're gonna pull config values from environment variables

- This allows to configure DB variables in a single place and it will work for development and deployment.

settings.py

- Fixing DB race condition

depends_onmakes sure the service starts; but doesn't ensure the application (in this case, PostgreSQL) is running. This can cause the Django app to try connecting to the DB when the DB hasn't started, which would cause our app to crash.

- The soluciont is to make Django

wait for db. This is a custom django management command that we're gonna create.

- Before we can creaate our

wait for db, we need to add a new App to our project. We're goona call itcore

docker-compose run --rm app sh -c "python manage.py startapp core"

- After the

coreapp is added to our project, we add it toINSTALLED_APPSinsettings.py

- We delete:

core/test.pycore/views.py

- We add:

core/tests/core/tests/__init__.pycore/tests/test_commands.pycore/management/core/management/__init__.pycore/management/commands/core/management/commands/wait_for_db.py- Because of this directory structure, Django will automatically recognize

wait_for_db.pyas a management command, that we'll be able to run usingpython manage.py

wait_for_db.py

...

test_commands.py

...

- Running

wait_for_db.py

- Running the tests

- We can also run our Linting:

Setting up DB migration through docker-compose

Djago comes with its ORM. We are gonna use the ORM fallowing these steps:

- Define the models: Each model maps to a table

- Generate the migration files:

- Run these migrations to setup the database (apply the migration to the configured DB):

To do so through Docker Compose:

- We need to update our

docker-compose.ymland CI/CD (checks.yml) to handlewait_for_dband execute DB migrations:

docker-compose.yml. We replace «command» by:. . command: > sh -c "python manage.py wait_for_db && python manage.py migrate && python manage.py runserver 0.0.0.0:8000" . .

checks.yml. . run: docker-compose run --rm app sh -c "python manage.py wait_for_db && python manage.py test" . .

- Now we go ahead and run our service to check everything is ok. Don't forget to check the execution of the Action on GitHub after commiting:

docker-compose down docker-compose up git add . git commit -am "Configured docker compose and checks wait_for_db" git push origin

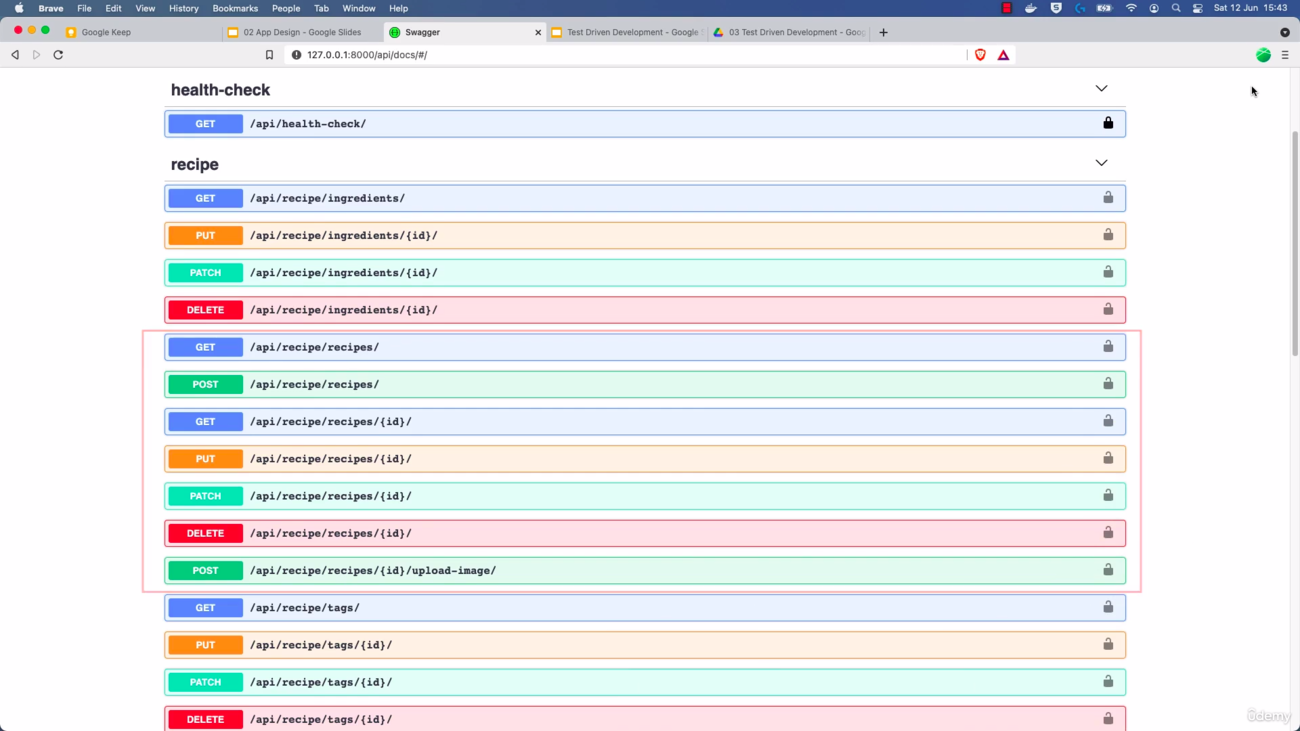

API Documentation

Automated documentation with Django REST Framework (DRF)

Esta sección está más adelante en el curso. Por eso los files tienen líneas que no han sido todavía explicadas.

https://www.udemy.com/course/django-python-advanced/learn/lecture/32237138?start=195#learning-tools

The OpenAPI Specification, previously known as the Swagger Specification, is a specification for a machine-readable interface definition language for describing, producing, consuming and visualizing web services. Previously part of the Swagger framework, it became a separate project in 2016, overseen by the OpenAPI Initiative, an open-source collaboration project of the Linux Foundation.

The OpenAPI Specification is a standardized format for describing RESTful APIs. It provides a structured and detailed overview of various aspects of the API, including endpoints, request/response formats, parameters, error handling, authentication methods, and more. It serves as a contract that defines the API's behavior and capabilities.

Documentation tools often support importing or parsing the OpenAPI Specification to automatically generate human-friendly API documentation.

By leveraging the OpenAPI Specification, API documentation tools can extract the necessary information, such as endpoint descriptions, parameter details, and response structures, to generate accurate and up-to-date API documentation. This reduces manual effort and ensures consistency between the API specification and the documentation.

Therefore, the OpenAPI Specification acts as a foundation for generating API documentation, allowing developers and API consumers to have a reliable and consistent source of information about the API's functionality, usage, and data structures.

To auto generate documentation, we're going to use a third party library called drf-spectacular:

drf-spectacularis a library that integrates with Django REST Framework (DRF) to generate OpenAPI/Swagger specifications for your API automatically. It provides a set of tools and decorators that allow you to document your API endpoints. DRF-Spectacular enhances the capabilities of DRF by automatically extracting information about your API views, serializers, and other components to generate comprehensive API documentation.

drf-spectacularwill allow us to generates OpenAPI specifications. In particular, the schema (docs in JSON or YAML format). The OpenAPI Schema, which is a specific component within the OpenAPI specifications, it is a JSON Schema-based definition that describes the structure and data types used in API requests and responses.

- It will also be used to generates a Swagger UI (Browsable web interface. This will allow us not only to visualize the API and its documentation but also to make test requests to the API.

Install drf-spectacular:

requirements.txt

...

drf-spectacular>=0.15.1,<0.16

Now we are going to rebuild Docker Container to make sure it installs this new package:

docker-compose build

We need to enable rest_framework and drf_spectacular in app/app/settings.py:

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'rest_framework',

'drf_spectacular',

'core',

]

.

.

.

REST_FRAMEWORK = { # This configures the REST_FRAMEWORK to generate OpenAPI Schema using drf_spectacular

'DEFAULT_SCHEMA_CLASS': 'drf_spectacular.openapi.AutoSchema',

}

Configuring URLs:

Now we need to enable the URL's that are needed in order to serve the documentation through our Django project.

app/app/urls.py

...

from django.contrib import admin

from django.urls import path

from drf_spectacular.views import (

SpectacularAPIView,

SpectacularSwaggerView,

)

urlpatterns = [

path('admin/', admin.site.urls),

path('api/schema/', SpectacularAPIView.as_view(), name='api-schema'), # This will generate the schema for our API. That is the YAML file that describes the API

path('api/docs/', SpectacularSwaggerView.as_view(url_name='api-schema'), name='api-docs') # This will serve the swagger documentation that is going to use our schema to generate a graphical user interface for our API documentation.

]

Let's test Swagger https://www.udemy.com/course/django-python-advanced/learn/lecture/32238946#learning-tools

We star our development server:

docker-compose up

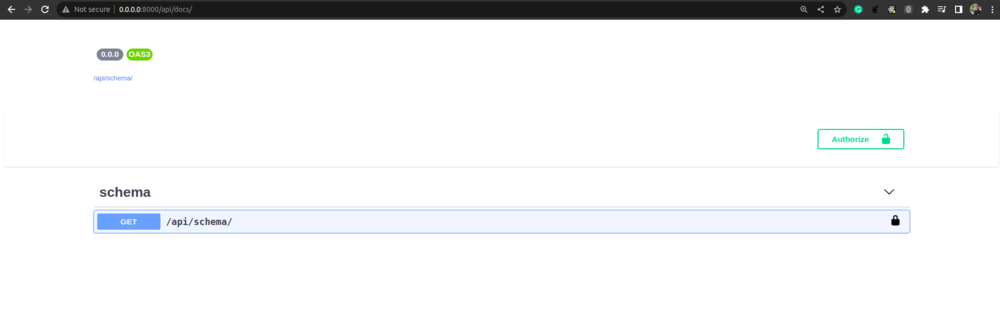

Now go to http://0.0.0.0:8000/api/docs/ and you should see the page for the swagger documentation:

Django user model - Django authentication system - Django admin system

It is important that we define a User model for our project. The default Django user model is the fundation of the Dajango auth system; it's what includes the data of the users who register to the system, and it's also used to authenticate those users by checking their passwords.

The authentication system gives us a basic Framework of basic features that we can use for our project. Including:

- Registration

- Login

- Auth

The Django authentication system integrates with the Django admin system, which another features that provides you an admin interface with doing very little coding.

There are some issues about using the Django default user model. For example:

- By default, it uses a username instead of a user's email address.

- It's not very easy to customize once you already start developing on a project using the default user model.

So, even if you don't need to customize anything on the default Django user model (even if you think you just need to use the same functionalities), I suggest that you define your own user model inside your project because if you ever do decide in the future to modify the model, it'll be a lot easier for you and it'll make your life easier because you won't need to mess around with changing migrations and things like that.

How do we customise the user model:

- We have to create a User model based on the

AbstracBaseUserandPermissionsMixinbase classes that comes built in with Django.

- Once we have defined our model, we then move on to create a custom manager. The manager is mostly used for the Django CLI integration, but it's also used for other things like creating and managing objects.

- Once you've defined the model and the manager, you can then set the

AUTH_USER_MODELconfiguration in yoursettings.py.

- Finally, you can then create and run the migrations for your project using the new custom user model.

AbstracBaseUser:

- It provides all of the features for authentication, but it doesn't include any fields. So we need to define all of the fields for our model ourselves; but that's okay because there's only a few minimal fields that we need to provide.

PermissionsMixin:

- The permissions mixing base class is used for the Django permissions system. So Django comes with a permission system that allows you to assign permissions to different users and it can be used with the Django admin, so in order to support that, we're going to be using the

PermissionsMixinbase class, the permissions - It provides all of the default fields and methods that are needed for our user model in order for it to work with the authentication system.

- The permissions mixing base class is used for the Django permissions system. So Django comes with a permission system that allows you to assign permissions to different users and it can be used with the Django admin, so in order to support that, we're going to be using the

I'm going to explain some of the common issues that people have when they are customizing the Django user model:

- The first one and probably the most common issue is that you run your migrations before setting your custom model. As I mentioned, I recommend that you create your custom model before running your migrations. However, because we've already created migrations for this project, we're going to have to clear them.

- Typos in config.

- Indentation in manager or model.

Designing our custom user model

User fields:

- email (

EmailField) - name (

CharField) - is_active (

BooleanField) - is_staff (

BooleanField)

User model manager:

The user model manager is used to manage objects in the system, so it's used to manage the objects from our user model. This is where we can define any custom logic for creating objects in the system.

- Hashing the password: When you have an authentication system, it's always best practice to hash the password with a one way encrypted hashing system, which means that you cannot easily read the value of the user's password in the database once they've registered.

- Create superuser method: Also, we're going to be adding a couple of methods that are used by the Django command line interface (CLI)

Creating our user model

We first Create the tests:

core/tests/test_models.py

"""

Tests for models.

"""

from django.test import TestCase

from django.contrib.auth import get_user_model

class ModelTests(TestCase):

"""Test models."""

def test_create_user_with_email_successful(self):

"""Test creating a user with an email is successful."""

email = 'test@example.com'

password = 'testpass123'

user = get_user_model().objects.create_user(

email=email,

password=password,

)

self.assertEqual(user.email, email)

self.assertTrue(user.check_password(password))

Running the test, which should fails:

docker-compose run --rm app sh -c "python manage.py test"

Let's now create our user model:

core/models.py

"""

Database models.

"""

from django.db import models

from django.contrib.auth.models import (

AbstractBaseUser,

BaseUserManager,

PermissionsMixin,

)

class UserManager(BaseUserManager):

"""Manager for users."""

def create_user(self, email, password=None, **extra_fields):

"""Create, save and return a new user."""

user = self.model(email=email, **extra_fields)

user.set_password(password)

user.save(using=self._db)

return user

class User(AbstractBaseUser, PermissionsMixin):

"""User in the system."""

email = models.EmailField(max_length=255, unique=True)

name = models.CharField(max_length=255)

is_active = models.BooleanField(default=True)

is_staff = models.BooleanField(default=False)

objects = UserManager()

USERNAME_FIELD = 'email'

After creating the model we have to add it to our settings.py by adding, at the end of the file:

AUTH_USER_MODEL = 'core.User'

We can now make the migrations:

docker-compose run --rm app sh -c "python manage.py makemigrations"

The above command will create the file core/migrations/0001_initial.py. In this file, we can see how the User model will be created by Django. We can see that Django adds other fields to the model besides those that we created.

Now we can apply the migrations to the database:

docker-compose run --rm app sh -c "python manage.py wait_for_db && python manage.py migrate"

If you have followed this course, the above command should generate an error and raise raise InconsistentMigrationHistory(.... This is because we earlier applied migration with the default Django model. So, we need to clear the data from our DB. We therefore need to clear the volume, to do so, you can first type :

docker volume ls

This will list all of the volumes on our system. You can see here that we have one called django-rest-api-recipe-app_dev-db-data. To remove this volume we do:

docker-compose down

docker volume rm django-rest-api-recipe-app_dev-db-data

docker volume ls

After that, we can go ahead and apply the migrations again and this time it should work. You will see from the message displayed on the terminal that there are many other migrations that are automatically applied by Django apart from the model that we just created.

We can now run our test again, which should now pass:

docker-compose run --rm app sh -c "python manage.py test"

Normalize email addresses

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238888#learning-tools

First, we add the test:

app/core/tests/test_models.py:

.

.

def test_new_user_email_normalized(self):

"""Test email is normalized for new users."""

sample_emails = [

['test1@EXAMPLE.com', 'test1@example.com'],

['Test2@Example.com', 'Test2@example.com'],

['TEST3@EXAMPLE.com', 'TEST3@example.com'],

['test4@example.COM', 'test4@example.com'],

]

for email, expected in sample_emails:

user = get_user_model().objects.create_user(email, 'sample123')

self.assertEqual(user.email, expected)

Now, as we've been doing so far based on the TDD practice, we run our test which should fails:

docker-compose run --rm app sh -c "python manage.py test"

So, we need to add normalize_email() to app/core/tests/test_models.py:

..

user = self.model(email=self.normalize_email(email), **extra_fields)

..

After that, we run our test again, and should pass.

Add a new feature to require email input

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238892#learning-tools

app/core/tests/test_models.py:

.

.

def test_new_user_without_email_raises_error(self):

"""Test that creating a user without an email raises a ValueError."""

with self.assertRaises(ValueError):

get_user_model().objects.create_user('', 'test123')

.

.

We run the test and verify it doesn't pass. Then,

app/core/models.py

.

.

"""Create, save and return a new user."""

if not email:

raise ValueError('User must have an email address.')

.

.

We run the test again and verify it pass

docker-compose run --rm app sh -c "python manage.py test"

Add superuser suport

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238894#learning-tools

app/core/tests/test_models.py

.

.

def test_create_superuser(self):

"""Test creating a superuser."""

user = get_user_model().objects.create_superuser(

'test@example.com',

'test123',

)

self.assertTrue(user.is_superuser)

self.assertTrue(user.is_staff)

.

.

We run the test and verify it fails. Then:

app/core/models.py

.

.

return user

def create_superuser(self, email, password):

"""Create and return a new superuser."""

user = self.create_user(email, password)

user.is_staff = True

user.is_superuser = True

user.save(using=self._db)

return user

class User(AbstractBaseUser, PermissionsMixin):

.

.

We run the test again and verify it pass:

docker-compose run --rm app sh -c "python manage.py test"

The following starts our development server but should also apply any migration that needs to be applied. Django only runs migrations if we update something. If nothing has changed Django won't run migrations.

docker-compose up

Now we can go to our browser and go to http://0.0.0.0:8000/admin

To login into the Django administration dashboard, we first need to create our admin credentials. Go the terminal and run:

docker-compose run --rm app sh -c "python manage.py createsuperuser"

Go back to http://0.0.0.0:8000/admin and login.

Setup Django Admin

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238904#learning-tools

What is the Django Admin? It's a Graphical User Interface for models. It allows you to do basic functions on our models, such as: Create, Read, Update, Delete

How to enable Django admin: You have to anable it per each model that we add. It's done in the admin.py file.

You can also customize Django admin: To do so, we have to create a class based off ModelAdmin or UserAdmin. Then inside this new class we created, we need to override/set some class variables depending on what we are looking to do.

First, we're gonna create some unit test for our Django admin: https://www.udemy.com/course/django-python-advanced/learn/lecture/32238912#learning-tools

app/core/tests/test_admin.py

"""

Tests for the Django admin modifications.

"""

from django.test import TestCase

from django.contrib.auth import get_user_model

from django.urls import reverse

from django.test import Client

class AdminSiteTests(TestCase):

"""Tests for Django admin."""

def setUp(self):

"""Create user and client."""

self.client = Client()

self.admin_user = get_user_model().objects.create_superuser(

email='admin@example.com',

password='testpass123',

)

self.client.force_login(self.admin_user)

self.user = get_user_model().objects.create_user(

email='user@example.com',

password='testpass123',

name='Test User'

)

def test_users_lists(self):

"""Test that users are listed on page."""

url = reverse('admin:core_user_changelist') # You'll find the different Reversing admin URLs at https://docs.djangoproject.com/en/3.1/ref/contrib/admin/#reversing-admin-urls

res = self.client.get(url) # This makes a http get request to the URL we want to test. Because we add a «force_loing» above, the request is gonna be made authenticated with the corresponding «force_login» user (admin)

self.assertContains(res, self.user.name) # Here we check that the page (res) contains the name and email of the user we've created.

self.assertContains(res, self.user.email)

Now we run the test and make sure it fails since we haven't registered the model and the URL to our Django admin https://www.udemy.com/course/django-python-advanced/learn/lecture/32238914#learning-tools

docker-compose run --rm app sh -c "python manage.py test"

Now we are going to implement the code to make Django admin list users:

app/core/admin.py

"""

Django admin customization.

"""

from django.contrib import admin

from django.contrib.auth.admin import UserAdmin as BaseUserAdmin

from core import models

class UserAdmin(BaseUserAdmin):

"""Define the admin pages for users."""

ordering = ['id']

list_display = ['email', 'name']

admin.site.register(models.User, UserAdmin) # I we don't add «UserAdmin» it would register the User Model but it wouldn't assign the custom user model we just add above.

We run our development server docker-compose up and go to http://0.0.0.0:8000/admin

We should now see the CORE > Users section we just implemented. However, if we click on the user we'll get an error cause we haven't added support for modifying users. Let's do that now:

Adding support for modifying users

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238918#learning-tools

We are gonna start by adding the test:

app/core/tests/test_admin.py

..

self.assertContains(res, self.user.email)

def test_edit_user_page(self):

"""Test the edit user page works."""

url = reverse('admin:core_user_change', args=[self.user.id])

res = self.client.get(url)

self.assertEqual(res.status_code, 200) # This will ensure the page loaed successfully with a HTTP 200 response

We go ahead and run our test again and make sure it fails:

docker-compose run --rm app sh -c "python manage.py test"

Let's now add the feature to support for modifying users:

app/core/admin.py

"""

Django admin customization.

"""

from django.contrib import admin

from django.contrib.auth.admin import UserAdmin as BaseUserAdmin

from django.utils.translation import gettext_lazy as _ # This is just the function that translate the name of the fileds. By convention it is used the «_» but you can also use somethign like «translate»

from core import models

class UserAdmin(BaseUserAdmin):

"""Define the admin pages for users."""

ordering = ['id']

list_display = ['email', 'name']

fieldsets = (

(None, {'fields': ('email', 'password')}),

(_('Personal Info'), {'fields': ('name',)}),

(

_('Permissions'),

{

'fields': (

'is_active',

'is_staff',

'is_superuser',

)

}

),

(_('Important dates'), {'fields': ('last_login',)}),

)

readonly_fields = ['last_login']

admin.site.register(models.User, UserAdmin) # I we don't add «UserAdmin» it would register the User Model but it wouldn't assign the custom user model we just add above.

Let's run our test again and make sure it pass. Then run docker-compose up, go to http://0.0.0.0:8000/admin > CORE > Users > click on the user and you should see the «change user» page.

Adding support for creating users

https://www.udemy.com/course/django-python-advanced/learn/lecture/32238922#learning-tools

app/core/tests/test_admin.py

...

self.assertEqual(res.status_code, 200)

def test_create_user_page(self):

"""Test the create user page works."""

url = reverse('admin:core_user_add')

res = self.client.get(url)

self.assertEqual(res.status_code, 200)

We go ahead and run our test again and make sure it fails:

app/core/admin.py

...

readonly_fields = ['last_login']

add_fieldsets = (

(None, {

'classes': ('wide',),

'fields': (

'email',

'password1',

'password2',

'name',

'is_active',

'is_staff',

'is_superuser',

),

}),

)

admin.site.register(models.User, UserAdmin) # I we don't add «UserAdmin» it would register the User Model but it wouldn't assign the custom user model we just add above.

Let's run our test again and make sure it passes. Then run docker-compose up, go to http://0.0.0.0:8000/admin > CORE > Users > ADD USER and you should see the Add user page. Go ahead and create a new user and make sure it is added to the User list page.

Bilding the user API

https://www.udemy.com/course/django-python-advanced/learn/lecture/32237130#learning-tools

Overview

- The user API:

- Handle user registration

- Creating an authentication token and then use it to authenticate resquest such as the login system

- Viewing/updatin the user profile

- In order to suppor these functionalities we are going to be ading the following endpoints and http methods:

user/create/:

- POST - Register a new user: This is going to accept a HTTP post request that allows you to register a new user. So you can post data to the API and it will create a new user from that data.

user/token/:

- POST - Create new token: This is going to accept HTTP post which allows you to generate a new token. So the post content will contain the user's username or email address and the password, and then it's going to return or create in return a new token for that user to authenticate with.

user/me/

- PUT/PATCH - Update profile: This is going to be used to accept a HTTP PUT/PATCH in order to update the user profile. So the user is authenticated, it can call this endpoint

- GET - View profile: It's also going to accept a HTTP GET so that we can see what the current dataset for that user in the system.

Create and enable the user app in the project

https://www.udemy.com/course/django-python-advanced/learn/lecture/32237136#learning-tools

docker-compose run --rm app sh -c "python manage.py startapp user"

Then you can go to the user directory and delete these file that we are not going to be useing:

app/user/migrations/: We are gonna keeps all the migrations in thecoreapp.app/user/admin.py: This is going to be also in thecoreapp.app/user/models.py: Same reasonapp/user/tests.pyCreate:

app/user/tests/app/user/tests/__init__.py

Then go toapp/app/settings.pyand enable theuserapp in our project:INSTALLED_APPS = [ 'django.contrib.admin', 'django.contrib.auth', 'django.contrib.contenttypes', 'django.contrib.sessions', 'django.contrib.messages', 'django.contrib.staticfiles', 'core', 'rest_framework', 'drf_spectacular', 'user', ]

The create user API

Write tests for the create user API

https://www.udemy.com/course/django-python-advanced/learn/lecture/32237140#learning-tools

app/user/tests/test_user_api.py""" Tests for the user API. """ from django.test import TestCase from django.contrib.auth import get_user_model from django.urls import reverse from rest_framework.test import APIClient from rest_framework import status CREATE_USER_URL = reverse('user:create') def create_user(**params): """Create and return a new user.""" return get_user_model().objects.create_user(**params) class PublicUserApiTests(TestCase): # This is for testing the methods that don't require authentication """Test the public features of the user API.""" def setUp(self): self.client = APIClient() def test_create_user_success(self): """Test creating a user is successful.""" payload = { 'email': 'test@example.com', 'password': 'testpass123', 'name': 'Test Name', } res = self.client.post(CREATE_USER_URL, payload) # This is going to make an HTTP request to our CREATE_USER_URL self.assertEqual(res.status_code, status.HTTP_201_CREATED) # Here we check if the endpont returns a HTTP_201_CREATED response. Which is the success response code for creating objects in the database by an API user = get_user_model().objects.get(email=payload['email']) # Here we validate tha the user was created in the DB self.assertTrue(user.check_password(payload['password'])) self.assertNotIn('password', res.data) # Here we make sure that the password is not returned in the response (So there's no key called password in the response). This is for security reasons def test_user_with_email_exists_error(self): # With this method we're gonna check that if we try to crate another user with the same email, we will get an error """Test error returned if user with email exists.""" payload = { 'email': 'test@example.com', 'password': 'testpass123', 'name': 'Test Name', } create_user(**payload) res = self.client.post(CREATE_USER_URL, payload) self.assertEqual(res.status_code, status.HTTP_400_BAD_REQUEST) def test_password_too_short_error(self): """Test an error is returned if password less than 5 chars.""" payload = { 'email': 'test@example.com', 'password': 'pw', 'name': 'Test name', } res = self.client.post(CREATE_USER_URL, payload) self.assertEqual(res.status_code, status.HTTP_400_BAD_REQUEST) user_exists = get_user_model().objects.filter( email=payload['email'] ).exists() self.assertFalse(user_exists)

We now run the test and make sure it fails.

Let's now implement our user APIhttps://www.udemy.com/course/django-python-advanced/learn/lecture/32237146#questions

Frst thing we'll do is to add a new sterilizer that we can use for creating objects and serializing our use object.So serialize is simply just a way to convert objects to and from python objects. So it takes a JSON input that might be posted from the API and validates the input to make sure that it is secure and correct as part of validation rules; and then it converts it to either a python object or a model in our actual database.

So there are different base classes that you can use for the serialization. In this case we are using

serializers.ModelSerializer. this allows us to automatically validate and save things to a specific model that we define in our serializer.

app/user/serializers.py""" Serializers for the user API View. """ from django.contrib.auth import get_user_model from rest_framework import serializers class UserSerializer(serializers.ModelSerializer): """Serializer for the user object.""" class Meta: model = get_user_model() fields = ['email', 'password', 'name'] extra_kwargs = {'password': {'write_only': True, 'min_length': 5}} def create(self, validated_data): """Create and return a user with encrypted password.""" return get_user_model().objects.create_user(**validated_data)

Now we have to create a view that uses the serializer. We are createing a viewCreateUserViewbased onCreateAPIView

CreateAPIViewhandles a HTTP post request that's designed for creating objects in the DB. It handles all of the logic for you. All you need to do is to set the serializer class on its view.

So when you make the HTTP request, it goes through to the URL, which we're going to map in a minute, and then it gets passed into thisCreateUserViewclass, which will then call the sterilizer and create the object and then return the appropriate response.

app/user/views.py""" Views for the user API. """ from rest_framework import generics from user.serializers import UserSerializer class CreateUserView(generics.CreateAPIView): """Create a new user in the system.""" serializer_class = UserSerializer

app/app/urls.py""" URL mappings for the user API. """ from django.urls import path from user import views app_name = 'user' urlpatterns = [ path('create/', views.CreateUserView.as_view(), name='create'), # The name parameter that we define here is used, for example, for the reverse lookup whe we define «CREATE_USER_URL = reverse('user:create')» in test_user_api.py ]

The last thing we need to do is connect the views we just created to our main app.

app/user/urls.py... from django.contrib import admin from django.urls import path, include from drf_spectacular.views import ( SpectacularAPIView, SpectacularSwaggerView, ) urlpatterns = [ path('admin/', admin.site.urls), path('api/schema/', SpectacularAPIView.as_view(), name='api-schema'), path('api/docs/', SpectacularSwaggerView.as_view(url_name='api-schema'), name='api-docs'), path('api/user/', include('user.urls')), # includes() allows us to include URLs from a different app ]

Now go ahead and run the test and make sure it passes.

Authentication

https://www.udemy.com/course/django-python-advanced/learn/lecture/32237150#questions

There are different types of authentications that are available with the Django REST framework:

- Basic: Send username and password with each request

- Token: Use a token in the HTTP header (This is the owne we are gonna be using in this course):

- It's supported out of the box by Django REST Framework.

- JSON Web Token (JWT): Use an access and refresh token

- It requires some external libraries and packages to integrate it with Django.

- Session: This is when you store the authentication details using cookies.

Token authenticatonHow token authenticaton works:

- Well, basically you start by creating a token. So we need to provide an endpoint that accepts the user's email and password and it's then going to create a new token in our database and return that token to the client.

- Then the client can store that token somewhere:

- If you're using a web browser: it could be in session stores . It could be in the local storage. It could be in a cookie

- If you're using a desktop app or you're creating a mobile app: It could be on an actual database on the local client or you might have any other mechanism to store it somewhere on the app.

- And then every request that the client makes to the APIs that have to be authenticated is simply includes this token in the HTTP headers of the request, and this means that the request can be authenticated in our back end.

Pro and cons of token authenticaton:

- Pros:

- Supported out of the box for Django REST Framework

- Simple to use

- Supported by all clients

- Avoid sending username/password each time as in the case of simple authentication; which is not secure.

- Cons::

- The token needs to be secure on the client site. So if the token gets compromised on the client site then it can be used to make requests to the API.

- Requires database requests: Every time you authenticate a request needs to be made to the DB. This is not a problem in most cases, at least you are building something that handles millions of users. In this case we should use something like JSON Web Token (JWT)

How token authentication handles logging out:

The log-out happens entirely on the client side and simply works by deleting the token. So you create the token when you need to log in and then when the user logs out, you simply just clear the token off the device, and then the user is effectively logged out. An important comment about Why a logout API is not created is made in this lesson

Token API

Write tests for the token API

https://www.udemy.com/course/django-python-advanced/learn/lecture/32237158#questions

app/user/tests/test_user_api.py. . . CREATE_USER_URL = reverse('user:create') TOKEN_URL = reverse('user:token') . . . def test_create_token_for_user(self): """Test generates token for valid credentials.""" user_details = { 'name': 'Test Name', 'email': 'test@example.com', 'password': 'test-user-password123', } create_user(**user_details) payload = { 'email': user_details['email'], 'password': user_details['password'], } res = self.client.post(TOKEN_URL, payload) self.assertIn('token', res.data) self.assertEqual(res.status_code, status.HTTP_200_OK) def test_create_token_bad_credentials(self): """Test returns error if credentials invalid.""" create_user(email='test@example.com', password='goodpass') payload = {'email': 'test@example.com', 'password': 'badpass'} res = self.client.post(TOKEN_URL, payload) self.assertNotIn('token', res.data) self.assertEqual(res.status_code, status.HTTP_400_BAD_REQUEST) def test_create_token_email_not_found(self): """Test error returned if user not found for given email.""" payload = {'email': 'test@example.com', 'password': 'pass123'} res = self.client.post(TOKEN_URL, payload) self.assertNotIn('token', res.data) self.assertEqual(res.status_code, status.HTTP_400_BAD_REQUEST) def test_create_token_blank_password(self): """Test posting a blank password returns an error.""" payload = {'email': 'test@example.com', 'password': ''} res = self.client.post(TOKEN_URL, payload) self.assertNotIn('token', res.data) self.assertEqual(res.status_code, status.HTTP_400_BAD_REQUEST)

We run the test and verify it doesn't pass

Implementing the token APIhttps://www.udemy.com/course/django-python-advanced/learn/lecture/32237168?start=255#questions

The first thing we need to do is to addrest_framework.authtokentoINSTALLED_APPSapp/app/settings.py... 'django.contrib.messages', 'django.contrib.staticfiles', 'rest_framework', 'rest_framework.authtoken', 'drf_spectacular', 'core', 'user', ...

Now we need to create aAuthTokenSerializer

app/user/serializers.py. . . from django.contrib.auth import ( get_user_model, authenticate, ) from django.utils.translation import gettext as _ # _ is the common syntax for translations with Django . . - class AuthTokenSerializer(serializers.Serializer): """Serializer for the user auth token.""" email = serializers.EmailField() password = serializers.CharField( style={'input_type': 'password'}, # With this input type the text is hidden when using the browsable API (no entendi muh bien esto) trim_whitespace=False, ) def validate(self, attrs): # The validate() method is going to be called in the validation stage by our view. So when the data is posted to the view, it's going to pass it to the serializers and then it's going to call validate() to validate that the data is correct """Validate and authenticate the user.""" email = attrs.get('email') password = attrs.get('password') user = authenticate( # This function checks that the username (in our case the email) and the password match. If it's correct it returns the users. If not, it returns nothing request=self.context.get('request'), username=email, password=password, ) if not user: msg = _('Unable to authenticate with provided credentials.') raise serializers.ValidationError(msg, code='authorization') attrs['user'] = user return attrs

Now we need to create a view that uses the serializer so that we can link that up to a URL so we can handle token authentication.

app/user/views.py""" Views for the user API. """ from rest_framework import generics from rest_framework.authtoken.views import ObtainAuthToken from rest_framework.settings import api_settings from user.serializers import ( UserSerializer, AuthTokenSerializer, ) class CreateUserView(generics.CreateAPIView): """Create a new user in the system.""" serializer_class = UserSerializer class CreateTokenView(ObtainAuthToken): """Create a new auth token for user.""" serializer_class = AuthTokenSerializer renderer_classes = api_settings.DEFAULT_RENDERER_CLASSES

Finaly, we define our token URL

app/user/urls.py... urlpatterns = [ path('create/', views.CreateUserView.as_view(), name='create'), path('token/', views.CreateTokenView.as_view(), name='token'), ] ...

Now we run the tests and make sure they pass.

Manage user API

Writing tests for the manage user API

https://www.udemy.com/course/django-python-advanced/learn/lecture/32237172?start=150#questions

app/user/tests/test_user_api.py. . . ME_URL = reverse('user:me') . . . def test_retrieve_user_unauthorized(self): """Test authentication is required for users.""" res = self.client.get(ME_URL) self.assertEqual(res.status_code, status.HTTP_401_UNAUTHORIZED) class PrivateUserApiTests(TestCase): # The reason we separate it into separate classes is because we're going to handle the authentication in the setUp method as called automatically before each test. So this way we don't need to duplicate the code for authenticating or mocking the authentication with the API. """Test API requests that require authentication.""" def setUp(self): self.user = create_user( email='test@example.com', password='testpass123', name='Test Name', ) self.client = APIClient() self.client.force_authenticate(user=self.user) def test_retrieve_profile_success(self): """Test retrieving profile for logged in user.""" res = self.client.get(ME_URL) self.assertEqual(res.status_code, status.HTTP_200_OK) self.assertEqual(res.data, { 'name': self.user.name, 'email': self.user.email, }) def test_post_me_not_allowed(self): """Test POST is not allowed for the me endpoint.""" res = self.client.post(ME_URL, {}) self.assertEqual(res.status_code, status.HTTP_405_METHOD_NOT_ALLOWED) def test_update_user_profile(self): """Test updating the user profile for the authenticated user.""" payload = {'name': 'Updated name', 'password': 'newpassword123'} res = self.client.patch(ME_URL, payload) self.user.refresh_from_db() self.assertEqual(self.user.name, payload['name']) self.assertTrue(self.user.check_password(payload['password'])) self.assertEqual(res.status_code, status.HTTP_200_OK)

Run the test and make sure it fails.

Implementing the manage user APIhttps://www.udemy.com/course/django-python-advanced/learn/lecture/32237176#questions

So we're going to reuse the user's serialization because the data that we're going to use for this serializer is going to be the same. We can actually just reuse the same user serialization for both registering users/creating users and updating users.But what we need to do is add a new method that's called when we update the user; because if we don't do that, then when they update the user profile, it's going to store the password in clear text, which we don't want. We want to make sure that the password is hashed and it gets put through the proper process for setting the password.

app/user/serializers.py. . . return get_user_model().objects.create_user(**validated_data) def update(self, instance, validated_data): """Update and return user.""" password = validated_data.pop('password', None) user = super().update(instance, validated_data) if password: user.set_password(password) user.save() return user class AuthTokenSerializer(serializers.Serializer): . . .

app/user/views.py. . . from rest_framework import generics, authentication, permissions . . . class ManageUserView(generics.RetrieveUpdateAPIView): """Manage the authenticated user.""" serializer_class = UserSerializer authentication_classes = [authentication.TokenAuthentication] permission_classes = [permissions.IsAuthenticated] def get_object(self): """Retrieve and return the authenticated user.""" return self.request.user

app/user/urls.pyurlpatterns = [ path('create/', views.CreateUserView.as_view(), name='create'), path('token/', views.CreateTokenView.as_view(), name='token'), path('me/', views.ManageUserView.as_view(), name='me'), ]

Now go ahead and run the tests and make sure they pass.

Reviwing and testing the user API in the browser

docker-compose upThen go to http://0.0.0.0:8000/api/docs

Take a look at the course video to see how to review and test our API using the Swagger Browsable API: https://www.udemy.com/course/django-python-advanced/learn/lecture/32237180#questions

Deployment

https://www.udemy.com/course/django-python-advanced/learn/lecture/32236422#overview

There are several ways to deploy a Django app:

Check this video out: The 4 best ways to deploy a Django application: https://www.youtube.com/watch?v=IoxHUrbiqUo

- Directly on a server:

- Run directly on server

- Docker (This is the way it's done in this course)

- Serverless cloud:

- Google Cloud Run / Google App Engine

- AWS Elastic Beanstalk / ECS Fargate

In this course we are going to deploy our app directly on a server using Docker

Overview

There is a very good explanation of the deployment process at this lesson: https://www.udemy.com/course/django-python-advanced/learn/lecture/32236426#overview

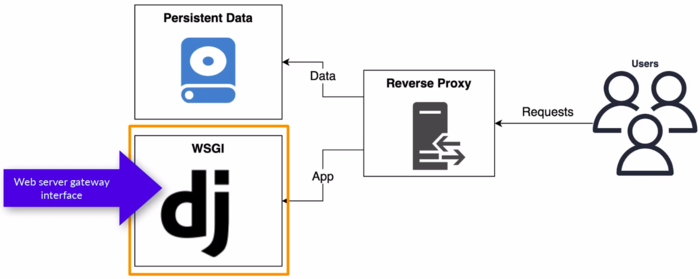

- WSGI stands for Web Server Gateway Interface. It is a specification that defines a standardized interface between web servers and web applications or frameworks in Python. WSGI acts as a middle layer or contract between web servers (like Apache or Nginx) and Python web applications, allowing them to communicate and work together seamlessly.

- The purpose of WSGI is to provide a common API that web servers and web applications can adhere to, enabling interoperability and portability. It allows developers to write web applications or frameworks once and then deploy them on different web servers without making any modifications.

- We're gonna use uWSGI(pronounced "u whiskey"). Another very popular WSGI tool is Gunicorn (Green Unicorn)

- Reverse proxy using a Web server application:

- Why to use a reverse proxy:

- WSGI server is great at executing Python but not great at serving static files (static content like Images, CSS, JavaScript)

- Now, a Web server is really efficient at serving static files.

- We're gonna use nginx

- Docker Compose just to pull all of these services together and serve them on our server.

- Handling configurations:

- Application and configuration means setting things such as the database password, the Django secret things that we currently store inside our settings file.

- Well, you can't just keep everything on Github because it's really insecure. Also, sometimes you want to deploy your application on multiple different servers and each of those servers requires a slightly different configuration. So it wouldn't really make sense to store this inside the source control.

- There are different ways of handling configurations:

- Environment variables (This is the approach we are using in this course)

- Secret manager: The other approach would be to use a secret manager or something like that. So these are generally third-party tools that are available on platforms such as AWB or Google Cloud; and these tools require that you integrate your application with them.

Adding the new WSGI server to the project

https://www.udemy.com/course/django-python-advanced/learn/lecture/32236430#overview

In this lesson, we're going to be adding the WSGI server and installing the application in our project.

Dockerfile

COPY ./scripts /scripts

.

.

apk add --update --no-cache --virtual .tmp-build-deps \

build-base postgresql-dev musl-dev zlib zlib-dev && \

build-base postgresql-dev musl-dev zlib zlib-dev linux-headers && \

.

.

chmod -R 755 /vol && \

chmod -R +x /scripts

.

.

ENV PATH="/scripts:/py/bin:$PATH"

.

.

CMD ["run.sh"]

linux-headersis a requirement for the uWSGI installation. We added to the.tmp-build-depsbecase is only required during installation, then we it can be removed.run.shis the name of the script we'll create that runs our application.

- So the command at the bottom (

CMD ["run.sh"]) is the default command that's run for docker containers that are spawned from our image, that's built from this Docker file. - You can override this command using Docker compose, and we will be overriding it for our development server because our development server is going to be using our

managed.pyrun server command instead ofuWSGI. However, the default is going to be used to run our service inuWSGIwhen we deploy our application.

scripts/run.sh

#!/bin/sh

set -e

python manage.py wait_for_db

python manage.py collectstatic --noinput

python manage.py migrate

uwsgi --socket :9000 --workers 4 --master --enable-threads --module app.wsgi

set -e: what this does is it means if any command fails, it's going to fail the whole script. So if any portion of our script fails, then we want to make sure that it crashes and we know about the failure and we can go to fix it instead of it just running the next command.

requirements.txt

.

.

uwsgi>=2.0.19,<2.1

Now we are gonna build your Docker image to make sure that it still build successfully:

docker-compose build

Adding the reverse proxy configuration

https://www.udemy.com/course/django-python-advanced/learn/lecture/32236434?start=15#overview

proxy/default.conf.tpl

server {

listen ${LISTEN_PORT};

location /static {

alias /vol/static;

}

location / {

uwsgi_pass ${APP_HOST}:${APP_PORT};

include /etc/nginx/uwsgi_params;

client_max_body_size 10M;

}

}

.tplrefers to template

proxy/uwsgi_params https://uwsgi-docs.readthedocs.io/en/latest/Nginx.html#what-is-the-uwsgi-params-file

uwsgi_param QUERY_STRING $query_string;

uwsgi_param REQUEST_METHOD $request_method;

uwsgi_param CONTENT_TYPE $content_type;

uwsgi_param CONTENT_LENGTH $content_length;

uwsgi_param REQUEST_URI $request_uri;

uwsgi_param PATH_INFO $document_uri;

uwsgi_param DOCUMENT_ROOT $document_root;

uwsgi_param SERVER_PROTOCOL $server_protocol;

uwsgi_param REMOTE_ADDR $remote_addr;

uwsgi_param REMOTE_PORT $remote_port;

uwsgi_param SERVER_ADDR $server_addr;

uwsgi_param SERVER_PORT $server_port;

uwsgi_param SERVER_NAME $server_name;

This is the script that is going to be used to start our proxy serfice.

proxy/run.sh

#!/bin/sh

set -e

envsubst < /etc/nginx/default.conf.tpl > /etc/nginx/conf.d/default.conf

nginx -g 'daemon off;'

- This is going to take

/etc/nginx/default.conf.tpl, substitutes the environment variables and output the result to/etc/nginx/conf.d/default.conf. nginx -g 'daemon off;'this starts the server and makes sure we run nginx in the foreground. Usually when you start nginx on a server, it will be running in the background and then you can interact with it through some kind of service manager. However, because we're running this in a Docker container, we want to make sure the service is running in the foreground, which means it is the primary thing being run by that Docker container.

Creating the proxy Dockerfile

Creating the Dockerfile that will run nginx as a service for our project.

https://www.udemy.com/course/django-python-advanced/learn/lecture/32236440#overview

proxy/Dockerfile

FROM nginxinc/nginx-unprivileged:1-alpine

LABEL maintainer="londonappdeveloper.com"

COPY ./default.conf.tpl /etc/nginx/default.conf.tpl

COPY ./uwsgi_params /etc/nginx/uwsgi_params

COPY ./run.sh /run.sh

ENV LISTEN_PORT=8000

ENV APP_HOST=app

ENV APP_PORT=9000

USER root

RUN mkdir -p /vol/static && \

chmod 755 /vol/static && \

touch /etc/nginx/conf.d/default.conf && \

chown nginx:nginx /etc/nginx/conf.d/default.conf && \

chmod +x /run.sh

VOLUME /vol/static

USER nginx

CMD ["/run.sh"]

Now, we need to test building the image:

cd proxy

docker build .

Handling configurations

https://www.udemy.com/course/django-python-advanced/learn/lecture/32236444?start=15#overview

We're gonna be using environment variables:

- We're going to store these configuration values on a file on the server that we're running the application on.

- Then we're going to use Docker Compose to retrieve these values, and we're going to assign them or pass them into the applications that are running.

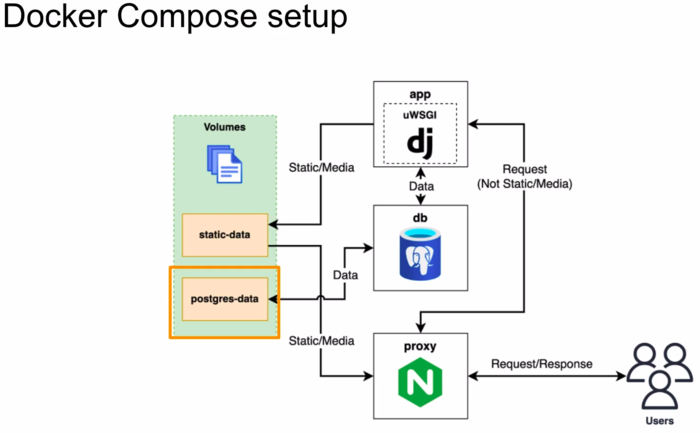

Creating a docker compose conf

This is going to be used as the deployment docker file.

So our docker-compose.yml is going to be used for the local development but we're going to have a special Docker file just for deployment (docker-compose-deploy.yml);

and this means we can have specific deployment configuration in our Docker file because configurations are going to be slightly different from our docker-compose-deploy.yml

docker-compose-deploy.yml

version: "3.9"

services:

app:

build:

context: .

restart: always

volumes:

- static-data:/vol/web

environment:

- DB_HOST=db

- DB_NAME=${DB_NAME}

- DB_USER=${DB_USER}

- DB_PASS=${DB_PASS}

- SECRET_KEY=${DJANGO_SECRET_KEY}

- ALLOWED_HOSTS=${DJANGO_ALLOWED_HOSTS}

depends_on:

- db

db:

image: postgres:13-alpine

restart: always

volumes: