Difference between revisions of "Supervised Machine Learning for Fake News Detection"

Adelo Vieira (talk | contribs) (→The training dataset) |

Adelo Vieira (talk | contribs) |

||

| (31 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

<br /> | <br /> | ||

<span style="background:#E6E6FA ">'''The final results of this project are:'''</span> | <span style="background:#E6E6FA ">'''The final results of this project are:'''</span> | ||

| + | <div style="margin-top:15px"> | ||

| + | <section begin=fake_news_result1 /> | ||

| + | <ul> <li class="entre-li-n4 entre-li-n4-first"> <div style="text-align:left"> <html> <p class="desplaza" style="margin-top:-5pt"> | ||

| + | <a style="font-weight:bold" href="http://fakenewsdetector.sinfronteras.ws"> | ||

| + | http://fakenewsdetector.sinfronteras.ws | ||

| + | </a> | ||

| + | <span> | ||

| + | This is the link to a Web Application that has been created to easily interact with the Machine Learning Models created. It allows us to determine if a News Article is Fake or Reliable by entering the text into an input field. The input text will be processed by the Machine Learning Models at the back-end and the result will be sent back to the client. This Web App was created using <a href="http://shiny.rstudio.com/">Shiny</a>, an R package that can be used to build interactive web apps straight from R. | ||

| + | </span> | ||

| + | </p> </html> </div> </li> </ul> | ||

| + | <section end=fake_news_result1 /> | ||

| + | </div> | ||

| − | + | <div style="margin-top:20px"> | |

| − | + | <section begin=fake_news_result2 /> | |

| − | + | <ul> <li class="entre-li"> <div style="text-align:left"> <html> <p class="desplaza" style="margin-top:-5pt"> | |

| − | + | <a style="font-weight:bold" href="https://github.com/adeloaleman/RFakeNewsDetector"> | |

| − | + | https://github.com/adeloaleman/RFakeNewsDetector | |

| − | + | </a> | |

| + | <span> | ||

| + | This is the link to a Github repository that contains a R Library we have created to package the Machine Learning Models built. This package contains essentially three functions: <span style="font-weight:bold">modelNB()</span>, <span style="font-weight:bold">modelSVM()</span> and <span style="font-weight:bold">modelXGBoost()</span>. These functions take a news article as argument and, using the Models created, return the authenticity tag («fake (1)» or «reliable (0)») | ||

| + | </span> | ||

| + | </p> </html> </div> </li> </ul> | ||

| + | <section end=fake_news_result2 /> | ||

| + | :*'''modelNB():''' Based on the the Naive Bayes Model. | ||

| + | :*'''modelSVM():''' Based on the Support Vector Machine Model. | ||

| + | :*'''modelXGBoost():''' Based on the Extreme Gradient Boosting Model. | ||

| + | </div> | ||

| Line 24: | Line 45: | ||

<span style="background:#E6E6FA "> '''What we call Fake News in this project:'''</span> | <span style="background:#E6E6FA "> '''What we call Fake News in this project:'''</span> | ||

| − | *Deliberately distorted information that secretly leaked into the communication process in order to deceive and manipulate ( | + | *Deliberately distorted information that secretly leaked into the communication process in order to deceive and manipulate (Vladimir Bitman). |

:Therefore, our Machine Learning Models are only able to detect this kind of disinformation: '''Fake News Articles that were''' <span style="color:#FF0000">'''deliberately'''</span> '''created in order to deceive and manipulate''' | :Therefore, our Machine Learning Models are only able to detect this kind of disinformation: '''Fake News Articles that were''' <span style="color:#FF0000">'''deliberately'''</span> '''created in order to deceive and manipulate''' | ||

| Line 31: | Line 52: | ||

<!-- | <!-- | ||

==Introduction for the project== | ==Introduction for the project== | ||

| − | In recent years, with the | + | In recent years, with the growth of the Web 2.0, it is really easy for anyone to publish information on the Web without any verification of its authenticity. |

This fact has driven a radical growth of online Fake News. | This fact has driven a radical growth of online Fake News. | ||

| − | Many | + | Many research papers from well know institutions confirm the growth of fake news and the problem that this reality is generating in our society. According to a study conducted by the Joint Research Centre of the European Commission [], it is estimated that the number of false news has increased. |

| − | Another interesting research about this topic has been | + | Another interesting research about this topic has been conducted by BBC Trending []. In this article, ... |

| − | An additional fact that gives us a relevant measure of the increase of the interest in the fake news problematic, is the growth of the frequency of "fake news" Google searches, which can be | + | An additional fact that gives us a relevant measure of the increase of the interest in the fake news problematic, is the growth of the frequency of "fake news" Google searches, which can be verified at https://trends.google.com. In Figure xx we can see the radical growth of online searches related to this topic since 2016. |

| Line 48: | Line 69: | ||

| − | Because of the Alarming Fake News Statistics, the interest | + | Because of the Alarming Fake News Statistics, the interest in the fake news topic has also greatly increased |

| Line 192: | Line 213: | ||

==How to calculate the accuracy of the Model== | ==How to calculate the accuracy of the Model== | ||

| − | The accuracy of the model is a measure used to evaluate how well the model is working. In our case, our model is used to classify news articles as «fake (1)» or «reliable (0)». Therefore, to | + | The accuracy of the model is a measure used to evaluate how well the model is working. In our case, our model is used to classify news articles as «fake (1)» or «reliable (0)». Therefore, to calculate the accuracy we have to compare the results returned for the Model with the labels of the news article. |

| Line 206: | Line 227: | ||

*Then we used the Model created to classify the articles of the 30% of this data that has been reserved as Test data. | *Then we used the Model created to classify the articles of the 30% of this data that has been reserved as Test data. | ||

*Because it is a labeled data, we know that the authenticity tag of the 3 articles of our Test data example are: 0, 1, 1 (reliable, fake, fake) | *Because it is a labeled data, we know that the authenticity tag of the 3 articles of our Test data example are: 0, 1, 1 (reliable, fake, fake) | ||

| − | *Now, | + | *Now, suppose that our Machine Learning Model returns 0, 0, 1 when classifying these 3 news articles of the Test data. |

| − | *So, two out of the three classifications that the model has performed were correct. We can therefore calculate the accuracy as: | + | *So, two out of the three classifications that the model has performed were correct. We can, therefore, calculate the accuracy as: |

| Line 220: | Line 241: | ||

==Procedure to build the Supervised Machine Learning Model== | ==Procedure to build the Supervised Machine Learning Model== | ||

| − | Because one of the goals of this project is to evaluate performance of different algorithms, we built models for each dataset using 3 algorithms: | + | Because one of the goals of this project is to evaluate the performance of different algorithms, we built models for each dataset using 3 algorithms: |

*Naive Bayes (NB): Implemented through the e1071 R Library | *Naive Bayes (NB): Implemented through the e1071 R Library | ||

| Line 271: | Line 292: | ||

<br /> | <br /> | ||

===Cleaning the data=== | ===Cleaning the data=== | ||

| − | We need to take everything that doesn't contribute to the analysis out of the data before | + | We need to take everything that doesn't contribute to the analysis out of the data before running the algorithm. The cleaning of the data used in this project includes: |

*Convert to lower-case letters. | *Convert to lower-case letters. | ||

| Line 277: | Line 298: | ||

*Remove numbers. | *Remove numbers. | ||

*Remove blank space | *Remove blank space | ||

| − | *Remove stopwords: Stop words are commonly used words (a, an, and, the, this, those). The reason why stop words should be removed is that we can focus on the words that really differentiate the articles and not | + | *Remove stopwords: Stop words are commonly used words (a, an, and, the, this, those). The reason why stop words should be removed is that we can focus on the words that really differentiate the articles and not the words that are in all the articles. |

| − | *Stemming Words: Trimming words | + | *Stemming Words: Trimming words such as calling, called and calls to call. |

<syntaxhighlight lang="R"> | <syntaxhighlight lang="R"> | ||

| Line 298: | Line 319: | ||

<br /> | <br /> | ||

===Building the Document-Term Matrix=== | ===Building the Document-Term Matrix=== | ||

| − | To be able to run a Machine Learning algorithm, we first need to transform each news article into a numerical representation in the form of a vector. In <xr id="fig:DocumentTermMatrix" />, we show how to build the Document-Term Matrix. This matrix will be the numerical representation that a Machine Learning algorithm is able to understand. As you can see, each | + | To be able to run a Machine Learning algorithm, we first need to transform each news article into a numerical representation in the form of a vector. In <xr id="fig:DocumentTermMatrix" />, we show how to build the Document-Term Matrix. This matrix will be the numerical representation that a Machine Learning algorithm is able to understand. As you can see, each column of this matrix represents a word in the training data. Thus, each document is defined by the frequency of the words that are in the dictionary composed for all the terms in our data. |

<div style="text-align: center;"> | <div style="text-align: center;"> | ||

| Line 304: | Line 325: | ||

<!-- <pdf width="2000" height="630">File:Document-Term Matrix.pdf</pdf> --> | <!-- <pdf width="2000" height="630">File:Document-Term Matrix.pdf</pdf> --> | ||

[[File:Document-Term Matrix.png|750px|thumb|center| | [[File:Document-Term Matrix.png|750px|thumb|center| | ||

| − | <caption>The Document-Term Matrix</caption> | + | <caption> |

| + | The Document-Term Matrix <br/> | ||

| + | [[File:Document-Term Matrix.pdf]] [[File:Document-Term Matrix.ods]] | ||

| + | </caption> | ||

]] | ]] | ||

| − | |||

| − | |||

</figure> | </figure> | ||

</div> | </div> | ||

| Line 358: | Line 380: | ||

*First we need to add the labels to our DTM to create the Matrix that we pass to the xgboost function. | *First we need to add the labels to our DTM to create the Matrix that we pass to the xgboost function. | ||

| − | *We implement a decision trees based XGBoosting using the | + | *We implement a decision trees based XGBoosting using the following parameters: |

:*objective = "binary:logistic" : To train a binary classification model (this is the case of fake news detection). | :*objective = "binary:logistic" : To train a binary classification model (this is the case of fake news detection). | ||

| Line 395: | Line 417: | ||

<br /> | <br /> | ||

===Using the model_XGB to classify news articles and calculating the accuracy of the model=== | ===Using the model_XGB to classify news articles and calculating the accuracy of the model=== | ||

| − | Now that we have a Model, we can use it to classify new data. Remember that we count | + | Now that we have a Model, we can use it to classify new data. Remember that we count on the labels of the data (fake (1) or reliable (0)). So, the goal is to verify the accuracy of the model by comparing the results returned for the model_XGB with the true labels. |

<syntaxhighlight lang="R"> | <syntaxhighlight lang="R"> | ||

| Line 443: | Line 465: | ||

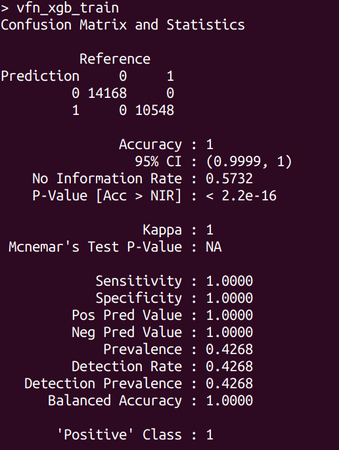

This accuracy doesn't really give a good measure of the efficiency of the model to classify new data, but it is used to validate that the algorithm has been correctly implemented. It is expected to get an accuracy close to 100% when using the classifying the Train data. | This accuracy doesn't really give a good measure of the efficiency of the model to classify new data, but it is used to validate that the algorithm has been correctly implemented. It is expected to get an accuracy close to 100% when using the classifying the Train data. | ||

| − | In <xr id="fig:Accuracy" />, we show the accuracy and | + | In <xr id="fig:Accuracy" />, we show the accuracy and Confusion matrix we got over the Training data. We got 100% accuracy over the Training data. |

| Line 451: | Line 473: | ||

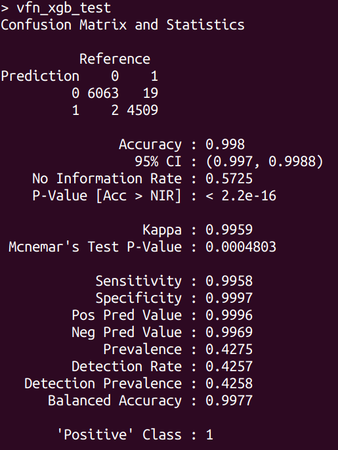

| − | A second measure of the accuracy of the model is calculated by classifying the news articles of our Test Data. This data was not used to build the Model. So, the | + | A second measure of the accuracy of the model is calculated by classifying the news articles of our Test Data. This data was not used to build the Model. So, the results of the classification over the Test Data give us a good measure of the accuracy of the model. |

| − | When we used the Test data to calculate the | + | When we used the Test data to calculate the accuracy, it must be noticed that, even if the Test data was not used to build our model, it comes from the same dataset of our Training data. It is therefore very likely that the news articles come from similar sources and have a similar writing style. That is why, when calculating the accuracy using the Test data (from the same dataset), it is also expected to get a good accuracy. |

We got an accuracy of 99% when classifying the 30% of the Victoria University dataset that was reserved as Test data. See the results and Confusion Matrix is shown in <xr id="fig:Accuracy" />. | We got an accuracy of 99% when classifying the 30% of the Victoria University dataset that was reserved as Test data. See the results and Confusion Matrix is shown in <xr id="fig:Accuracy" />. | ||

| Line 464: | Line 486: | ||

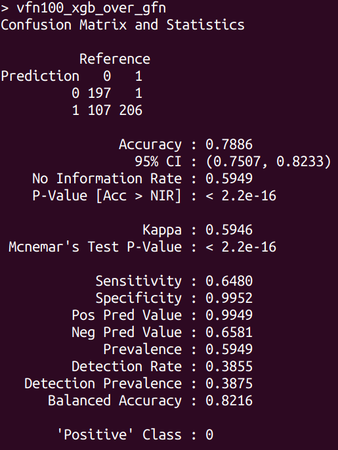

| − | The best measure of the | + | The best measure of the accuracy of a model is the one calculated by classifying data that is not related at all with the dataset used to build the model. In this project, we use the Gofaaas Dataset as our final Test data. |

We will later discuss carefully the results of the accuracy we got. For now, notice that we got an accuracy of 78% when using the model built with the Victoria University Dataset to classify the articles of our Gofaaas Dataset. | We will later discuss carefully the results of the accuracy we got. For now, notice that we got an accuracy of 78% when using the model built with the Victoria University Dataset to classify the articles of our Gofaaas Dataset. | ||

| Line 490: | Line 512: | ||

==Summary of results and Analysis== | ==Summary of results and Analysis== | ||

| − | In <xr id="fig:Results" />, we have | + | In <xr id="fig:Results" />, we have summarised the results of all the models built in the project. |

| Line 498: | Line 520: | ||

:*We got very good accuracies for almost all the models built. | :*We got very good accuracies for almost all the models built. | ||

| − | :*The best accuracies | + | :*The best accuracies were obtained with the XGBoost algorithm. |

::*We got above 97% using xgboost for the 3 external datasets and 83.66% for the Gofaaas Dataset. It was expected a lower accuracy for the Gofaaas dataset because it is composed only for 511 News articles. | ::*We got above 97% using xgboost for the 3 external datasets and 83.66% for the Gofaaas Dataset. It was expected a lower accuracy for the Gofaaas dataset because it is composed only for 511 News articles. | ||

| − | :*We got a bad accuracy only in the Naive Bayes Model created with the | + | :*We got a bad accuracy only in the Naive Bayes Model created with the Kaggle Fake News Dataset 1 and 2. |

*'''Regarding the Test accuracy when classifying data from another dataset:''' | *'''Regarding the Test accuracy when classifying data from another dataset:''' | ||

| − | :*This is the | + | :*This is the most important result of our project. As we already mentioned, the best measure of the accuracy of a model is the one calculated by classifying data that is not related to the dataset used to build the model. |

| − | :*In this project we used the Gofaaas Dataset as our final Test data. This is an important aspect of our work | + | :*In this project we used the Gofaaas Dataset as our final Test data. This is an important aspect of our work because the Gofaaas Dataset was built by our team in order to ensure that we have reliable data to validate the models built with other datasets. |

:*We created 2 final models that have been using to classify the data of the Gofaaas Dataset: | :*We created 2 final models that have been using to classify the data of the Gofaaas Dataset: | ||

::*Final Model 1: This model was built by using 3 datasets combined: Kaggle Fake News Datasets 1 and 2 and the Victoria University Dataset. | ::*Final Model 1: This model was built by using 3 datasets combined: Kaggle Fake News Datasets 1 and 2 and the Victoria University Dataset. | ||

| − | ::*Fianal Model 2: This model was built | + | ::*Fianal Model 2: This model was built using the Victoria University Dataset (Training data: 100%). |

| − | :*We got 73.97% and 78.86% accuracy using Final Model 1 and Final Model 2, respectively. We can not say that they are excellent accuracies (we generally look for values above 80); but we can say that | + | :*We got 73.97% and 78.86% accuracy using Final Model 1 and Final Model 2, respectively. We can not say that they are excellent accuracies (we generally look for values above 80); but we can say that especially the 78.87% accuracy, it is a value that we think is very acceptable for a first Version 1.0 of a Fake News Detector Project. |

| Line 637: | Line 659: | ||

==Fake News Detector R Library== | ==Fake News Detector R Library== | ||

| − | After all the work done training the models and | + | After all the work done training the models and analyze the results obtained, we needed to create an R function that takes a News article as argument and (using the Models created) return the authenticity tag («fake (1)» or «reliable (0)»). |

Latest revision as of 13:46, 5 February 2023

In this project, we have created a Supervised Machine Learning Model for Fake News Detection based on three different algorithms:

- Naive Bayes, Support Vector Machine and Gradient Boosting (XGBoost).

The final results of this project are:

-

http://fakenewsdetector.sinfronteras.ws This is the link to a Web Application that has been created to easily interact with the Machine Learning Models created. It allows us to determine if a News Article is Fake or Reliable by entering the text into an input field. The input text will be processed by the Machine Learning Models at the back-end and the result will be sent back to the client. This Web App was created using Shiny, an R package that can be used to build interactive web apps straight from R.

-

https://github.com/adeloaleman/RFakeNewsDetector This is the link to a Github repository that contains a R Library we have created to package the Machine Learning Models built. This package contains essentially three functions: modelNB(), modelSVM() and modelXGBoost(). These functions take a news article as argument and, using the Models created, return the authenticity tag («fake (1)» or «reliable (0)»)

- modelNB(): Based on the the Naive Bayes Model.

- modelSVM(): Based on the Support Vector Machine Model.

- modelXGBoost(): Based on the Extreme Gradient Boosting Model.

The accuracy of the model:

- The Machine Learning Model created (using the Gradient Boosting algorithm) was able to determine the reliability of News Articles with an accuracy of 78.86%. In Figure 5 we show the accuracy we got for all the models created.

What we call Fake News in this project:

- Deliberately distorted information that secretly leaked into the communication process in order to deceive and manipulate (Vladimir Bitman).

- Therefore, our Machine Learning Models are only able to detect this kind of disinformation: Fake News Articles that were deliberately created in order to deceive and manipulate

Contents

- 1 Problem: Classify News articles as fake or reliable

- 2 Solution: Supervised Machine Learning

- 3 The training dataset

- 4 How to calculate the accuracy of the Model

- 5 Procedure to build the Supervised Machine Learning Model

- 6 Summary of results and Analysis

- 7 Fake News Detector R Library

Problem: Classify News articles as fake or reliable

We are facing a Text classification problem. We want to classify News articles as «fake (1)» or «reliable (0)».

The function that we want to build can be expressed this way:

Where is the text of the article we want to verify its authenticity.

Solution: Supervised Machine Learning

We are implementing a Supervised Machine Learning Algorithm to build a Model (function) that will take an Input Text (the news article) and will return an Output Tag: «fake (1)» or «reliable (0)».

Supervised Learning

- Is the task of learning a function (the model) from labeled training data. The training data consists of a set of input-output pairs. We call it labeled data because we know the output (target variable)

- In our case, the input is the text of the news article and the output is the authenticity tag («fake (1)» or «reliable (0)»).

- The Function obtained will be used for mapping new inputs. In other words, the function will return the authenticity tag («fake (1)» or «reliable (0)») for new input data (not labeled news articles).

The training dataset

In this project we used 4 different labeled fake news datasets, we called them:

- Kaggle Fake News Dataset 1

- Kaggle Fake News Dataset 2

- Victoria University Dataset

- Gofaaas Dataset: This is a small (511 news articles) but very trustworthy dataset. It has been built by our team in order to have reliable data that will be used as Test data to validate the models built with other datasets.

The 3 first datasets were built for similar fake news detector projects and are available online.

To simplify the explanations of the process followed to train our model, consider the example data schema shown in Figure 2. This will be our training data from which our Machine Learning Algorithm will build the Classification Function (the Model). Each input-output pair (News Article - Lable) will be used for the algorithm to learn the writing styles of Fake or Reliable news articles.

Figure 2: Data example

File:Fake news dataset example.pdf File:Fake news dataset example.ods

Datasets used

.

Kaggle Fake News Dataset 1

https://www.kaggle.com/c/fake-news/data

Distribution of the data:

The distribution of Stance classes in train_stances.csv is as follows:

| rows | unrelated | discuss | agree | disagree |

|---|---|---|---|---|

| 49972 | 0.73131 | 0.17828 | 0.0736012 | 0.0168094 |

Kaggle Fake News Dataset 2

https://www.kaggle.com/jruvika/fake-news-detection

Victoria University Dataset

.

Gofaaas Fake News Dataset

.

How to calculate the accuracy of the Model

The accuracy of the model is a measure used to evaluate how well the model is working. In our case, our model is used to classify news articles as «fake (1)» or «reliable (0)». Therefore, to calculate the accuracy we have to compare the results returned for the Model with the labels of the news article.

For example, consider the data shown in Figure 2:

- Suppose that we build a Machine Learning Model using the 70% of this data. Our model has learned the writing style of a fake or reliable news article based on the input-output pairs of the training data.

- Then we used the Model created to classify the articles of the 30% of this data that has been reserved as Test data.

- Because it is a labeled data, we know that the authenticity tag of the 3 articles of our Test data example are: 0, 1, 1 (reliable, fake, fake)

- Now, suppose that our Machine Learning Model returns 0, 0, 1 when classifying these 3 news articles of the Test data.

- So, two out of the three classifications that the model has performed were correct. We can, therefore, calculate the accuracy as:

Procedure to build the Supervised Machine Learning Model

Because one of the goals of this project is to evaluate the performance of different algorithms, we built models for each dataset using 3 algorithms:

- Naive Bayes (NB): Implemented through the e1071 R Library

- Support vector machine (SVM): We used the RTextTools R Library, which depends on e1071.

- Gradient Boosting (XGB): Implemented using the xgboost R Library.

So, we obtained 3 Models for each dataset:

- Kaggle Fake News Dataset 1:

- Model_NB

- Model_ SVM

- Model_ XGB

- The same for the other datasets

In this section, we explain the procedure followed to build the XGBoost Model using the Victoria University Dataset. In Figure 5, we summarize the results obtained for each dataset.

The Models were built following these steps:

- Splitting the Dataset into Training and Test data

- Cleaning the data

- Building the Document-Term Matrix

- Running the Machine Learning Algorithm to create the model

- Using the model_XGB to classify news articles and calculating the accuracy of the model

- Cross validation

Splitting the Dataset into Training and Test data

- 70% of the dataset will be used as Train data: The model will be built using this portion of the data.

- 30% of the dataset will be used as Test data: We will use the model build using the 70% of the dataset to classify the 30% that hasn't been used to train the model. This will allows us to calculate the accuracy of the classification made with our model. We will explain later how the accuracy is calculated.

# =============================================================================== =

# Splitting the data into Train and Test data ----

# =============================================================================== =

# Randomly Taking the 70% of the rows (70% records will be used for training sampling without replacement. The remaining 30% will be used for testing)

samp_id = sample(1:nrow(data), # do ?sample to examine the sample() func

round(nrow(data)*traindata_percentage),

replace = F)

train = data[samp_id,] # 70% of training data set, examine struc of samp_id obj

test = data[-samp_id,] # remaining 30% of training data set

Cleaning the data

We need to take everything that doesn't contribute to the analysis out of the data before running the algorithm. The cleaning of the data used in this project includes:

- Convert to lower-case letters.

- Remove punctuation.

- Remove numbers.

- Remove blank space

- Remove stopwords: Stop words are commonly used words (a, an, and, the, this, those). The reason why stop words should be removed is that we can focus on the words that really differentiate the articles and not the words that are in all the articles.

- Stemming Words: Trimming words such as calling, called and calls to call.

# =============================================================================== =

# Cleaning the data ----

# =============================================================================== =

text <- data$text

text <- tolower(text)

text <- removePunctuation(text)

text <- removeNumbers(text)

text <- removeWords(text, stopwords("en")) # stopwords

text <- stripWhitespace(text) # Remove blank space

text <- wordStem(text, language = "english") # Stemming Words

Building the Document-Term Matrix

To be able to run a Machine Learning algorithm, we first need to transform each news article into a numerical representation in the form of a vector. In Figure 3, we show how to build the Document-Term Matrix. This matrix will be the numerical representation that a Machine Learning algorithm is able to understand. As you can see, each column of this matrix represents a word in the training data. Thus, each document is defined by the frequency of the words that are in the dictionary composed for all the terms in our data.

# =============================================================================== =

# Building the Document-Term Matrix ----

# =============================================================================== =

# ---------------------------------------------------------------- -

# * Train data ----

# ---------------------------------------------------------------- -

# Vocabulary of tokens for the train data

# Tokenize the text and create a vocabulary of tokens including document counts

vocab_train <- create_vocabulary(itoken(text_train,

preprocessor = tolower,

tokenizer = word_tokenizer))

saveRDS(vocab_train, file = vocab_train_filename)

# Document-term matrix for the train data

dtm_train_xgb <- create_dtm(itoken(text_train,

preprocessor = tolower,

tokenizer = word_tokenizer),

vocab_vectorizer(vocab_train))

# ---------------------------------------------------------------- -

# * Test data ----

# ---------------------------------------------------------------- -

# Vocabulary of tokens for the test data

vocab_test <- create_vocabulary(itoken(text_test,

preprocessor = tolower,

tokenizer = word_tokenizer))

# Document-term matrix for the test data ----

dtm_test_xgb <- create_dtm(itoken(text_test,

preprocessor = tolower,

tokenizer = word_tokenizer),

vocab_vectorizer(vocab_train))

Running the Machine Learning Algorithm to create the model

This is the point where we create the function using a Machine Learning Algorithm.

- First we need to add the labels to our DTM to create the Matrix that we pass to the xgboost function.

- We implement a decision trees based XGBoosting using the following parameters:

- objective = "binary:logistic" : To train a binary classification model (this is the case of fake news detection).

- max.depth = 7 : Maximum depth of a tree.

- eta = 0.01 : Step size shrinkage used in update to prevents overfitting.

- nrounds = 10000 : The number of rounds for boosting.

- The model created with the xgboost function has been saved into the model_XGB variable. This model will be used later to classify new data.

# =============================================================================== =

# Xgboost model building ----

# =============================================================================== =

# Turn the DTM into an XGB matrix using the labels that are to be learned ----

xgbMatrix_train <- xgb.DMatrix(dtm_train_xgb, label = labels_train)

# Parameters for xgboost

xgb_params = list(

objective = "binary:logistic",

eta = 0.01,

max.depth = max_depth,

eval_metric = "auc")

# Creating the «xgb model» obj ----

system.time(

# model_xgb <- xgboost(data = xgbMatrix_train, params = xgb_params, nrounds = 10000, print_every_n = 500)

model_XGB <- xgboost(data = xgbMatrix_train, params = xgb_params, nrounds = setNrounds, print_every_n = setPrintEvery)

)

saveRDS(model_XGB, file = model_XGB_filename)

Using the model_XGB to classify news articles and calculating the accuracy of the model

Now that we have a Model, we can use it to classify new data. Remember that we count on the labels of the data (fake (1) or reliable (0)). So, the goal is to verify the accuracy of the model by comparing the results returned for the model_XGB with the true labels.

# =============================================================================== =

# Classifying data using the model created and displaying a Confusion matrix ----

# =============================================================================== =

# ---------------------------------------------------------------- -

# * Train data ----

# ---------------------------------------------------------------- -

# Create our prediction probabilities

pred_prob_train_XGB <- predict(model_XGB, dtm_train_xgb)

# Set our cutoff

pred_train_XGB <- ifelse(pred_prob_train_XGB >= 0.5, 1, 0)

saveRDS(pred_train_XGB, file = pred_train_XGB_filename)

# Create the confusion matrix

# https://rpubs.com/mharris/multiclass_xgboost

cm_train_XGB <- confusionMatrix(factor(pred_train_XGB), factor(labels_train), positive="1")

saveRDS(cm_train_XGB, file = cm_train_XGB_filename)

# ---------------------------------------------------------------- -

# * Test data ----

# ---------------------------------------------------------------- -

# Create our prediction probabilities

pred_prob_test_XGB <- predict(model_XGB, dtm_test_xgb)

# Set our cutoff

pred_test_XGB <- ifelse(pred_prob_test_XGB >= 0.5, 1, 0)

saveRDS(pred_test_XGB, file = pred_test_XGB_filename)

# Create the confusion matrix

cm_test_XGB <- confusionMatrix(factor(pred_test_XGB), factor(labels_test), positive="1")

saveRDS(cm_test_XGB, file = cm_test_XGB_filename)

Train Accuracy

Train Accuracy: The accuracy of the Model over the Training data.

A first measure of the accuracy of the model can be calculated by classifying the same data that has been used to build the model. Our Model must perform very well when classifying this data.

This accuracy doesn't really give a good measure of the efficiency of the model to classify new data, but it is used to validate that the algorithm has been correctly implemented. It is expected to get an accuracy close to 100% when using the classifying the Train data.

In Figure 4, we show the accuracy and Confusion matrix we got over the Training data. We got 100% accuracy over the Training data.

Test Accuracy

Test Accuracy: The accuracy of the Model over the Test data.

A second measure of the accuracy of the model is calculated by classifying the news articles of our Test Data. This data was not used to build the Model. So, the results of the classification over the Test Data give us a good measure of the accuracy of the model.

When we used the Test data to calculate the accuracy, it must be noticed that, even if the Test data was not used to build our model, it comes from the same dataset of our Training data. It is therefore very likely that the news articles come from similar sources and have a similar writing style. That is why, when calculating the accuracy using the Test data (from the same dataset), it is also expected to get a good accuracy.

We got an accuracy of 99% when classifying the 30% of the Victoria University dataset that was reserved as Test data. See the results and Confusion Matrix is shown in Figure 4.

The accuracy of the Model over another dataset

The accuracy of the Model over the Gofaaas Dataset.

The best measure of the accuracy of a model is the one calculated by classifying data that is not related at all with the dataset used to build the model. In this project, we use the Gofaaas Dataset as our final Test data.

We will later discuss carefully the results of the accuracy we got. For now, notice that we got an accuracy of 78% when using the model built with the Victoria University Dataset to classify the articles of our Gofaaas Dataset.

Summary of results and Analysis

In Figure 5, we have summarised the results of all the models built in the project.

We built models for each dataset using three different algorithms:

- Regarding the Test accuracy when classifying the reserved Test data from the same dataset Notice that:

- We got very good accuracies for almost all the models built.

- The best accuracies were obtained with the XGBoost algorithm.

- We got above 97% using xgboost for the 3 external datasets and 83.66% for the Gofaaas Dataset. It was expected a lower accuracy for the Gofaaas dataset because it is composed only for 511 News articles.

- We got a bad accuracy only in the Naive Bayes Model created with the Kaggle Fake News Dataset 1 and 2.

- Regarding the Test accuracy when classifying data from another dataset:

- This is the most important result of our project. As we already mentioned, the best measure of the accuracy of a model is the one calculated by classifying data that is not related to the dataset used to build the model.

- In this project we used the Gofaaas Dataset as our final Test data. This is an important aspect of our work because the Gofaaas Dataset was built by our team in order to ensure that we have reliable data to validate the models built with other datasets.

- We created 2 final models that have been using to classify the data of the Gofaaas Dataset:

- Final Model 1: This model was built by using 3 datasets combined: Kaggle Fake News Datasets 1 and 2 and the Victoria University Dataset.

- Fianal Model 2: This model was built using the Victoria University Dataset (Training data: 100%).

- We got 73.97% and 78.86% accuracy using Final Model 1 and Final Model 2, respectively. We can not say that they are excellent accuracies (we generally look for values above 80); but we can say that especially the 78.87% accuracy, it is a value that we think is very acceptable for a first Version 1.0 of a Fake News Detector Project.

| Algorithm | R Package | Accuracy | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Kaggle Fake News Dataset 1 | Kaggle Fake News Dataset | Victoria University Datase | Gofaaas Fake News Datase | Final Model 1: Combination of the 3 Datasets | Final Model 2: Victoria University Dataset | ||||||||

| 20,800 News articles | 4,009 News articles | 35,309 News articles | 511 News articles | 60,118 News articles | 35,309 News articles | ||||||||

| Data split ratio:

Train data: 70% Test data: 30% |

Data split ratio:

Train data: 70% Test data: 30% |

Data split ratio:

Train data: 70% Test data: 30% |

Data split ratio:

Train data: 70% Test data: 30% |

Data split ratio:

Train data: 100% |

Data split ratio:

Train data: 100% | ||||||||

| Train Accuracy | Test Accuracy | Train Accuracy | Test Accuracy | Train Accuracy | Test Accuracy | Train Accuracy | Test Accuracy | Train Accuracy | Test Accuracy

(Tested over the Gofaaas Dataset) |

Train Accuracy | Test Accuracy

(Tested over the Gofaaas Dataset) | ||

| Naive Bayes | e1071 | 72.03% | 72.02% | 80.90% | 78.80% | 97.93% | 97.95% | 92.74% | 81.05% | 86.06% | 67.12% | ||

| Support vector machine | We used RTextTools, which depends on e1071 | 99.74% | 94.33% | 99.57% | 93.43% | 99.97% | 99.17% | 100% | 75.16% | 99.57% | 59.69% | ||

| Extreme Gradient Boosting | xgboost | 99.97% | 97.13% | 100% | 98.25% | 100% | 99.80% | 100% | 83.66% | 99.99% | 73.97% | 100% | 78.86% |

Figure 5: Summary of results (accuracy for all the models created)

File:Fake news detection-Summary of results.pdf File:Fake news detection-Summary of results.ods File:Fake news detection-Summary of results 2.pdf File:Fake news detection-Summary of results 2.ods

Fake News Detector R Library

After all the work done training the models and analyze the results obtained, we needed to create an R function that takes a News article as argument and (using the Models created) return the authenticity tag («fake (1)» or «reliable (0)»).

This R function takes a news article as argument and using the XGBoost model created from the combination of the 3 datasets return the authenticity tag («fake (1)» or «reliable (0)»):

#' modelXGBoost Function

#'

#' This function allows you to check if a news article is authentic or fake.

#' It was trained using the xgboost package and the the Kaggle Fake News Dataset

#' @param fileName

#' @export

#' @examples

#' modelXGBoost(FileName)

#' @import xgboost

#' @import tm

#' @import text2vec

#' @import tools

#' @import SnowballC

#' @importFrom readr read_file

modelXGBoost <- function(fileName){

if(class(fileName) == "character" && length(fileName) == 1) {

if(file.exists(fileName)){

if(file_ext(fileName) == "txt"){

data <- data.frame(read_file(fileName))

names(data)[1] <- "text"

}else if(file_ext(fileName) == "csv"){

data <- data.frame(readLines(fileName))

names(data)[1] <- "text"

}else{

print('The extension of the file entered is not supported.

Make sure the file you are trying to read have a supported extension (.txt or .csv)')

}

} else{

print("Warning!! The parameter you have entered it is NOT a valid path for a text file.

The function is analysing the text contained in the «character» object you have entered as parameter.")

data <- data.frame(fileName)

names(data)[1] <- "text"

}

}else{

print("Warning!! The parameter you have entered it is NOT a valid path for a file.

The function is analysing the text contained in the object you have entered as parameter.")

data <- data.frame(fileName)

names(data)[1] <- "text"

}

# Text pre-processing ----

text <- data$text

text <- tolower(text)

text <- removePunctuation(text)

text <- removeNumbers(text)

text <- removeWords(text, stopwords("en")) # stopwords

text <- stripWhitespace(text) # Remove blank space

text <- SnowballC::wordStem(text, language = "english") # Stemming Words

# Building the document-term matrix using the tokenized review text. This returns a dgCMatrix object ----

dtm <- create_dtm(itoken(text,

preprocessor = tolower,

tokenizer = word_tokenizer),

vocab_vectorizer(vocab_train))

pred_prob <- predict(model_XGB, dtm)

pred <- ifelse(pred_prob >= 0.5, 1, 0)

return(pred)

}